Your Review: The Astral Codex Ten Commentariat (“Why Do We Suck?”)

Finalist #5 in the Review Contest

[This is one of the finalists in the 2025 review contest, written by an ACX reader who will remain anonymous until after voting is done. I’ll be posting about one of these a week for several months. When you’ve read them all, I’ll ask you to vote for a favorite, so remember which ones you liked]

Introduction

The Astral Codex Ten (ACX) Commentariat is defined as the 24,485 individuals other than Scott who have contributed to the corpus of work of Scott’s blog posts, chiefly by leaving comments at the bottom of those posts. It is well understood (by the Commentariat themselves) that they are the best comments section anywhere on the internet, and have been for some time. This review takes it as a given that the ACX Commentariat outclasses all of its pale imitators across the web, so I won’t compare the ACX Commentariat to e.g. reddit. The real question is whether our glory days are behind us – specifically whether the ACX Commentariat of today has lost its edge compared to the SSC Commentariat of pre-2021.

A couple of years ago Scott asked, Why Do I Suck?. This was a largely tongue-in-cheek springboard to discuss a substantive criticism he regularly received - that his earlier writing was better than his writing now. How far back do we need to go before his writing was ‘good’? Accounts seemed to differ; Scott said that the feedback he got was of two sorts:

“I loved your articles from about 2013 - 2016 so much! Why don’t you write articles like that any more?”, which dates the decline to 2016

“Do you feel like you’ve shifted to less ambitious forms of writing with the new Substack?”, which dates the decline to 2021

Quite a few people responded in the comments that Scott’s writing hadn’t changed, but it was the experience of being a commentor which had worsened. For example, David Friedman, a prolific commentor on the blog in the SSC-era, writes:

A lot of what I liked about SSC was the commenting community, and I find the comments here less interesting than they were on SSC, fewer interesting arguments, which is probably why I spend more time on [an alternative forum] than on ACX.

Similarly, kfix seems to be a long-time lurker (from as early as 2016) who has become more active in the ACX-era, writes:

I would definitely agree that the commenting community here is 'worse' than at SSC along the lines you describe, along with the also unwelcome hurt feelings post whenever Scott makes an offhand joke about a political/cultural topic.

And of course, this position wasn’t unanimous. Verbamundi Consulting is a true lurker who has only ever made one post on the blog – this one:

Ok, I've been lurking for a while, but I have to say: I don't think you suck… You have a good variety of topics, your commenting community remains excellent, and you're one of the few bloggers I continue to follow.

The ACX Commentariat is somewhat unique in that it self-styles itself as a major reason to come and read Scott’s writing – Scott offers up some insights on an issue, and then the comments section engages unusually open and unusually respectful discussion of the theme, and the total becomes greater than the sum of the parts. Therefore, if the Commentariat has declined in quality it may disproportionately affect people’s experience of Scott’s posts. The joint value of each Scott-plus-Commentariat offering declines if the Commentariat are not pulling their weight, even if Scott himself remains just as good as ever. In Why Do I Suck? Scott suggests that there is weak to no evidence of a decline in his writing quality, so I propose this review as something of a companion piece; is the (alleged) problem with the blog, in fact, staring at us in the mirror?

My personal view aligns with Verbamundi Consulting and many other commentors - I’ve enjoyed participating in both the SSC and ACX comments, and I haven’t noticed any decline in Commentariat quality. So, I was extremely surprised to find the data totally contradicted my anecdotal experience, and indicated a very clear dropoff in a number of markers of quality at almost exactly the points Scott mentioned in Why Do I Suck? – one in mid-2016 and one in early 2021 during the switch from SSC to ACX.

Setting Out the Case for Decline

There’s a pretty basic question that needs to be answered before we compare the Commentariat today to that of yesteryear. That question is - does ‘the Commentariat’ actually exist?

It is easy to understand what it means for Scott’s writing to have got better or worse over time, or to track the evolution of a specific commentor’s engagement with the blog. But in order to review ‘the Commentariat’ as a whole we would have to treat it as a single entity with discernible patterns and tendencies. I believe this approach is justified; the Commentariat has a distinct culture, voice and its own unique animal spirits that react to both Scott’s interests and the interests of the external world. Since it is not just generating random noise, it is possible to explore the Commentariat over time to build a case that its overall quality is declining (or not).

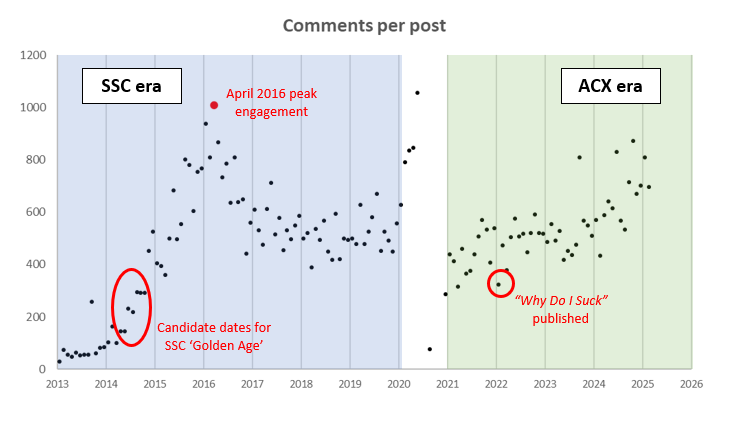

To demonstrate this, I have displayed below a graph of comments per post across the lifetime of the blogs. It may not be quite fair to say that ‘engagement’ is the same thing as ‘quality’, but I certainly think it raises a question that needs to be answered; something massively affects comment engagement in 2016 and then again in 2021.

In this graph, each datapoint represents a month that Scott has been blogging. A typical month will have between 15-20 posts, of which around half will be authored by Scott and half will be ‘authored’ in some way by the Commentariat, which are mostly Open Threads. I’ve averaged by month because certain types of post get much less engagement than others, and so looking at individual posts ended up too noisy to make attractive graphs (the true goal of any honest statistician).

The SSC-era is highlighted in blue. You can see that it shows something a bit like a classic sigmoidal adoption curve (but wearing a top hat). Post engagement starts low, before rapidly shooting up in 2014-15. It peaks in April 2016 – which is highlighted in red in this and all subsequent graphs so you can track peak engagement - before dropping back to a steady level of around 400-600 comments per post for the next three years. Notably, the run of posts that most people regard as being the ‘Golden Age’ for Scott’s writing happens much earlier than peak engagement with the comments section. People disagree about where this run of exceptionally good posts in quick succession start and ends, but I think you could safely say it has definitely begun by the time of The Control Group is Out of Control (although I would date it a little earlier, personally) and ends with either The Toxoplasmosa of Rage or Untitled – basically 2014 has a high density of ‘important’ posts.

There’s then a white band representing the NYT unpleasantness where the blog was briefly on a hiatus, and the posts in that period were very weird (statistically speaking). I won’t say much about this period in my review.

The ACX-era begins in 2021 and is highlighted in green. You can see engagement starts lower than the SSC steady-state of 400-600 comments per post (maybe more like 300-400 per post) but increases over time to at least that level by 2024, getting close to the peak engagement era. In one of life’s small ironies, Scott wrote Why Do I Suck? at close to the lowest period of engagement the blog had experienced for nearly a decade.

My key conclusion is that someone who says they preferred what the comments section used to be like is not (necessarily) just being curmudgeonly – something really did happen between pre-2016 SSC and post-2016 SSC, and then again between SSC as a whole and ACX as a whole, which caused a lot of people to disengage from the comments section. Furthermore, we would expect engagement to track quality quite closely (because people don’t want to engage with a bad comment section), and so a very strong hypothesis for an otherwise unexplained drop in comment engagement is a corresponding drop in Commentariat quality.

Interestingly, after a few years of lower engagement than steady-state SSC, engagement with ACX is trending upwards at the moment. If you were optimistic, you might even say that the early signs are that 2025 is showing the first bit of the fast-growth section of a sigmoidal adoption curve. If this initial trend continues, the ACX Commentariat will surpass the peak of SSC Commentariat around lunchtime on the 27th July this year, so mark that in your calendars.

Commentariat Quality – A Deep Dive

‘Professional’ reviewers – a thousand curses heaped upon their name – often rely on vague and idiosyncratic measures of quality. This may be appropriate for trivialities like literature and music, but when it comes to important things like the ACX Commentariat I’d prefer to follow good Commentariat norms and use clearly defined objective criteria in my review. I’ve therefore broken down comment quality into four key factors that, in my view, define the Commentariat’s unique character:

Depth of engagement with a topic – When the comment section is good, it is characterised by people taking time to uncover each other’s views and identify genuine disagreement, rather than just rehearsing tribally-coded talking points or making incendiary ‘drive-by’ comments and disappearing.

Freedom of intellectual engagement – A feature which many people appreciate about the SSC/ACX comments section is the freedom to discuss socially or professionally sensitive ideas (i.e. ideas which could get you sacked from a University if you expressed them publicly). If the Commentariat are censored or self-censoring they lose this unique feature making ACX better than other blogs.

Politeness – Perhaps more than any other blog, the Commentariat considers itself to be a ‘polite’ place, where people are afforded a fair opportunity to discuss ideas. There are strong community norms towards politeness, even when engaging with very emotive topics. Other websites have free speech norms (such as 4Chan or early-days reddit), but ACX is unique in having strong norms both for free speech and politeness.

Complexity of thought – Perhaps the most important feature distinguishing the ACX Commentariat from other, lesser, blogs is that some really smart people comment here and give novel and well-nuanced takes on a topic. If this ever disappeared it would not matter about any of the other three features, because the Commentariat would effectively be dead anyway.

To me, these broad categories represent the unique and positive features of the SSC/ACX Commentariat, and the extent to which they are present is a reasonable indicator of comment section quality, especially if they are all present at the same timepoint and that timepoint happens to line up with peak engagement in 2016 (this is foreshadowing).

To generate data on the ACX Commentariat, I scraped the comments section of every post Scott has made since 2013. The Old Ones whisper of a blog that existed before even Slate Star Codex, but since I’m not 100% certain we’re encouraged to talk about the older blog (and nobody dates the golden era of Scott’s writing to pre-2013 anyway) I kept my scraping to just the two websites we’re definitely allowed to talk about; Slate Star Codex (SSC) and Astral Codex Ten (ACX). The main points of failure with my scraping were Subscriber-only threads (which my algorithm virtuously refused to read as it wasn’t a subscriber) and battling with the Substack UI to get all the comments to load for me simultaneously on larger threads. Nevertheless, between my incompetent code and the jaunty Substack UI I only dropped a few comments on even very long threads, so I figured the data scrape would be adequate for the use-case I had for it. I then used a bunch more janky code (some written by me, some written by ChatGPT) to try and quantify the levels of depth, freedom, politeness and complexity of each comment.

I captured 2460 individual posts, and approximately 1.8m comments. Of the 24,486 unique comment authors, around 40% have made only one comment to the blog. The most prolific poster is the irrepressible Deiseach, at 20,685 contributions. Deiseach is also the only commentor to have made a comment on both the first post in my sample and the last, so has been with the blog a very long time! Only one other commentor has made more contributions than Scott (11,249), and this is John Schilling (11,607). The quality of data on individual users is not great for the ACX era (Substack seems to record missing author data in a few different ways, and sometimes swallow data for no reason) but I’m happy to give the rank ordering of anyone else who cares to know their specific level of clout in this niche community - I myself am the 799th most prolific contributor to the comments section (225 comments).

I’m also delighted to share my raw data with anyone interested – the summary statistics per post are here. The scraped comments themselves are about 2Gb so I don’t know where I can host them but if anyone has any ideas (and Scott doesn’t mind) I’ll share them too. I know that some of the post titles seem to have turned into hieroglyphics, but as far as I can tell it is cosmetic only and won’t affect any of the actual data – it is a symptom of a cool hidden feature of Microsoft Excel where it open UTF-8 encoded CSVs in a way that garbles special characters for no particular reason.

Considering each of these factors in turn:

Depth of engagement with a topic

Depth of engagement matters for several reasons, but the most important is simply that it shows people are getting enough out of a discussion to keep participating - a strong marker of a high-quality Commentariat. This is especially relevant in the ACX context, where many commentors don’t fit neatly into predefined categories like ‘Democrat’ or ‘Republican.’ As a result, discussions often require more time to clarify positions and establish common ground before real debate can begin. In that sense, depth also serves as a proxy for the number of interesting and non-standard voices present, which is itself a sign of a healthy and valuable comment section.

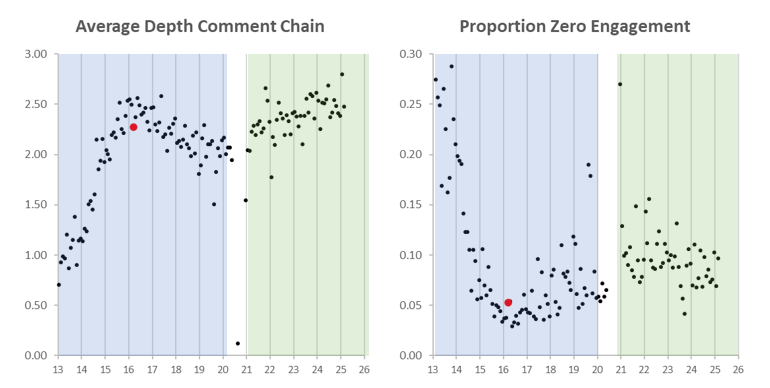

To operationalise this idea, I looked at the average depth of a comment chain (that is, suppose you took a random comment from anywhere within the comment section of a particular month – how many parent comments would that comment have?). Apparently professional data scientists sniff at this measure because it over-weights very deep back-and-forth between two people, vs many shallow engagements with a top-level post (which I guess is optimal for brand engagement or something) but in an SSC/ACX context deep discussion between two people is actually desirable, so I kept the simple approach. I have also considered the number of top-level comments which get no responses as a marker of ‘drive-by’ posting – commentors who just fling low-effort comments off into the void with no expectation of adding constructively to the discussion.

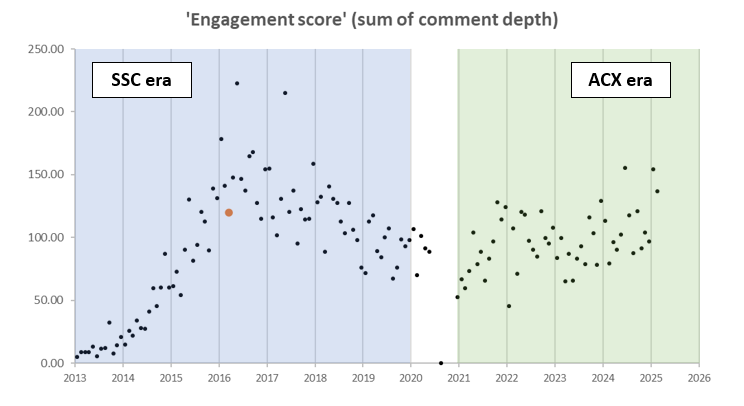

The average depth of a comment chain is actually highest now, in 2025. However, the proportion of comments with zero replies was lowest during 2016, and has been creeping up steadily since – meaning that the proportion of commentors who find themselves just screaming into the void with no response has increased since 2016. I don’t precisely know how to weight ‘discussions are good when they get going’ vs ‘discussions are easy to get going’, but we could try and capture some of the intuition here with a compound ‘engagement score’ (for example below I show the sum of all comment depth in a thread). Regardless of exactly how you operationalise it, it is reasonable to say 2016 was a strong period for depth of engagement, and that engagement markers have been trending upwards since the start of ACX, reversing a decline seen in the later SSC-period.

I treat more engagement as an unalloyed good, but this might actually not be true. A recurring discussion which occurs in the Commentariat is whether it has become too big to have a reasonable conversation.

For example, benwave writes:

One thing I do think has seriously gone downhill for me personally is the participation aspect, and that's just because the comments section has just gotten tooooo biiiiiig. Getting your comment noticed is hard, keeping up with the others is hard and lately I've just given up trying. The comments here used to feel a lot like an epistemic little league, and I adored that.

I raise this because it is easy to see how too much free speech could be polarising, or too much politeness stifling – but I wanted to flag that a good comment section seems to lie in a Goldilocks zone of pretty much every dimension. I also love the idea of an ‘epistemic little league’ happening below the primetime event of Scott’s posts.

Freedom of intellectual engagement

Freedom of intellectual engagement matters because people describe the SSC/ACX forum as one of the only places they can go to get honest critique of prevailing intellectual orthodoxy. Respectful discussion of highly emotive topics is a unique feature of the Commentariat which is not replicated in heavily censored spaces (especially in meatspaces where you can suffer very real harm for expressing a view which is seen as locally unacceptable).

For example, bruce writes:

I don't think Scott's quality has changed much, but the comments section used to be a lot more right-left confrontational. If that comes back the place will probably be purged.

This captures two main ideas very neatly (thank you, bruce) – that the ACX Commentariat was good in the past because it was honest and confrontational about major political cleavages in the Anglosphere world, and is not so good now because it has to be heavily censored to avoid cancel culture.

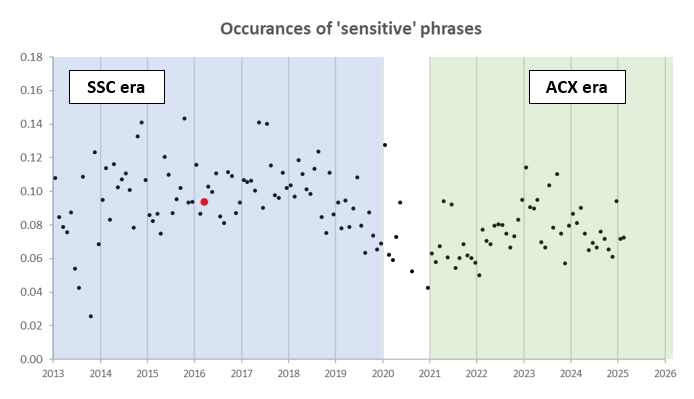

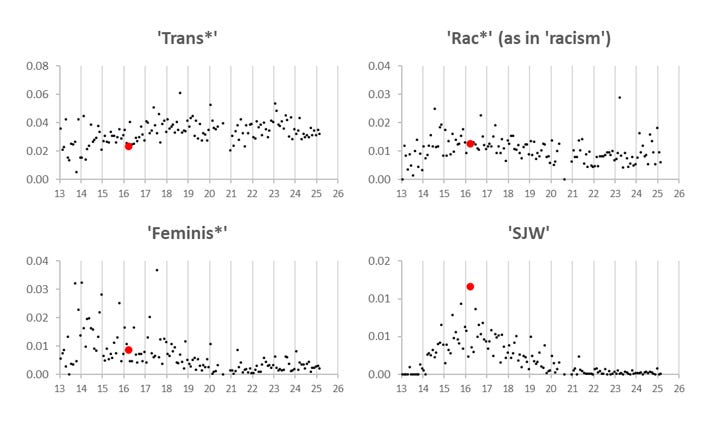

To operationalise a test of whether this was true, I built a dictionary of phrases which I will euphemistically describe as ‘socially or professionally sensitive’. I then searched the comments I had scraped for occurrences of any word in my dictionary, and counted the proportion of comments which contained a ‘sensitive’ token. To give a sample of some of the words in my dictionary, around half of all ‘sensitive’ tokens in the comments ended up being one of either ‘trans*’, ‘feminis*’, ‘immigra*’, ‘race’ / ‘racis*’ or ‘climate change’ (the * means I didn’t care about what followed that set of letters, so for example ‘transgender’ and ‘transsexual’ are both covered, but also – annoyingly – ‘transparent’ and ‘transport’ would also be captured which I only spotted just now). The graph of my output is below.

This graph shows that around 9% of comments will contain at least one token indicating the comment is discussing a sensitive topic, with a range of about 6% to 14%, disregarding the very early years where small sample size made the data more variable. There wasn’t any one ‘sensitive’ token in particular which correlated exceptionally well with the rise and fall of this 6% to 14%, which implies to me that we have correctly identified a general factor of ‘willingness to discuss sensitive topics’ (or possibly that the peaks and troughs correspond to peaks and troughs in the external landscape – ie specific touchpoints and lulls in the Culture War – which would also be fine for the purpose we’re putting it to).

This is an imperfect measure because it only tracks if someone is using a sensitive phrase and not whether they are using it in a heretical way (cf. ‘fifty Stalins’ here). However, I thought in the context of ACX posts the approach was probably reasonable – sensitive phrases are only likely to appear if they are being discussed a lot, and we know from the previous section that discussion depth is high both now and during the 2016 peak engagement period. It isn’t necessarily true that deep discussion implies spirited debate - some political discussions on reddit can go into the thousands of comments without anyone ever actually expressing a counter-orthodoxy view – but I think in the specific context of ACX it is reasonable, because we don’t generally have norms of expressing substanceless agreement. Hopefully, therefore, the changing ratio of socially or professionally sensitive phrases to phrases not included in my dictionary would tell us something about the willingness of the comment section to engage in potentially emotive discussions at any point in time.

The relationship of occurrence of these tokens to engagement with the comment section is hard to draw clear conclusions from – although the peak does indeed look to be about 2016 or 2017 the data are noisy, and strongly affected by the choice of words to include in my dictionary. I picked the dictionary before I saw the data, but perhaps a different set of words would have given a different result, especially if I had a better way of identifying sensitive discussions around COVID (‘ivermectin’ was the only COVID-related word I could think of that became politicised in the same way ‘microaggression’ or ‘misgender’ did). Nevertheless, I would say this gives some weak support to the idea that 2016 was a turning point in SSC Commentariat free speech norms (and strong support to the idea that the start of ACX was a low point for discussion of sensitive topics)

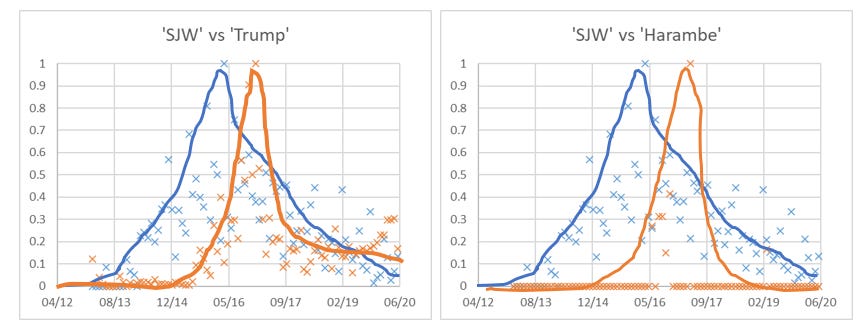

I include below a few specific sensitive phrases which I thought were interesting. Do note the different scales on each graph. Of particular interest to me is the ‘SJW’ graph, which has a really clear peak at exactly the high point of Commentariat engagement. I will return to this graph later in the review.

Politeness

One of the most appealing aspects of the ACX Commentariat, to me, is that ideas respectfully presented are respectfully engaged with – even when mainstream cultural commentors use this as a stick to beat ACX with. Strong community norms for niceness, community and civilisation are very rare in online spaces, so the ACX Commentariat may be especially sensitive to fluctuating levels of politeness.

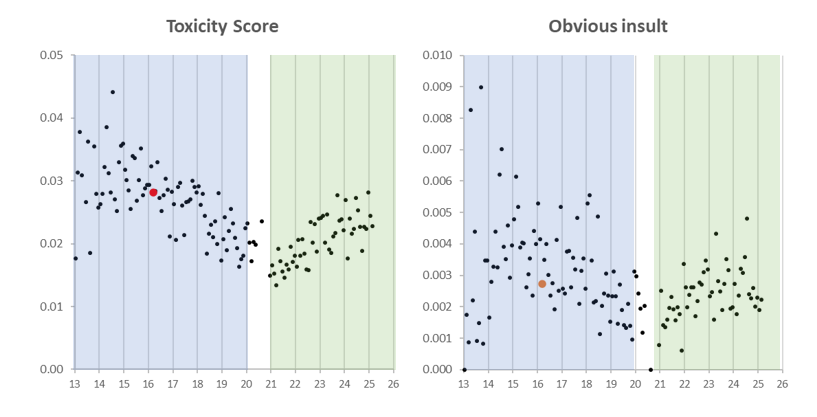

To operationalise this, I used a preweighted neural network trained to identify ‘toxicity’ in comment sections. The model, produced by the online safety company Unitary, is named ‘toxic-bert’ and identifies potentially impolite comments along a few axes of inappropriateness, for example; general toxicity, profanity and threats. I wasn’t quite sure if some of the routine discussions SSC/ACX has on socially or professionally sensitive ideas might trip the ‘toxicity’ filter even when they were respectful and polite, so to test for this, I included a sense check of occurrences of some words which are very rarely uttered in constructive contexts – specifically; ‘dumbass’, ‘fuck you’, ‘fucking’ and ‘retard’. I’ve called these ‘obvious insults’ even though in hindsight that’s a bit strong, and they have all been used in non-toxic contexts at some point or the other by the Commentariat. For example, here is an entirely non-toxic comment by Paperclip Minimiser using the word ‘retard’ in the sense of ‘to slow down progress’ (toxicity score < 0.001) and here is a comment by nydwracu which uses the word ‘retarded’ as a slur but which is nevertheless so insightful that it was awarded ‘Comment of the Week’ status by Scott, suggesting that a little bit of toxicity as a literary device can sometimes be overlooked by both toxic-bert and Scott (toxicity score = 0.01).

The graph above shows the output of these two approaches. This is a really weird result, which defies easy explanation. Toxicity goes down over the whole SSC era, then starts ticking back up again from the ACX era. If you allow for a bit more variability in the simpler measure, the fancy neural network closely tracks the number of times we call each other ‘retards’ or ‘dumbasses’, which you would expect to track overall toxicity quite closely. This suggests the neural network is keying in on actual toxicity, rather than polite discussions which happen to involve contested or sensitive concepts.

One caveat is that the ACX Commentariat is not very toxic to begin with, so the ‘toxicity’ metric may not be sensitive enough to capture the sort of politeness which the Commentariat values. In 2013, at peak toxicity, the toxicity score maxed out at 0.04 (the spike in October 2013 seems to be related to attracting some external neo-reactionaries (very roughly the precursor ideology to the modern alt-right) to comment on this post. In 2021, the lowest toxicity ever was reached at around 0.01. This means that a typical comment would be around 4% likely to be perceived as toxic by a human reader in 2013, but by 2021 this has fallen to 1%. Here is a snippet of a comment which is rated as having a 1% chance of being perceived as toxic by a human, written by John Schilling:

The purpose of war is, roughly speaking, to settle the question of whose police get to enforce which laws in a region, and since Catalonia isn’t going to do anything more than say, “We’re going to make you look like Evil Meanies on TV and Youtube if you don’t pull back your policemen and let us have our own”, that point is moot. [Link]

By contrast, if you promise to draw your fainting couch nearby, here is a snippet of a comment which is rated as having a 4% chance of being perceived as toxic by a human, written by Maximum Limelihood:

Being fired means nothing about the speed you’re learning at. It means that the employer overestimated how much you *already* knew. …. Unfortunately, it doesn’t matter how great you’ll be at coding in a year when you’re costing me time and training effort today [Link]

The most toxic the comments section has ever got (beyond the very early days) was on the post Gupta on Enlightenment. I feel like the comments section on this post should be part of the ACX main canon because it is so cosmically hilarious. It concerns a man name Vinay Gupta (founder of a blockchain-based dating website) and his claims to have reached enlightenment. Some people in the comments are sceptical that Vinay Gupta is indeed an enlightened being, citing that enlightened people don’t typically found blockchain-based dating websites. A new forum poster with the handle ‘Vinay Gupta’, claiming to be Vinay Gupta and writing in a very similar style to the actual Vinay Gupta, turns up and starts arguing with everyone in an extremely toxic way (in the objective sense that his comments score very highly on the toxic-bert scoring system), which provokes more merriment that a self-described enlightened being would deploy such classic internet tough-guy approaches as ‘I don’t think much of a four-on-one face off against untrained opponents’ (link) and ‘this board is filled with self-satisfied assholes who feel free to hold forth on whatever subject crosses their minds, with the absolute certainty that they’re the smartest people in the room’ (link, no further comment…). More prosaically, this is a great example of what I was discussing earlier – the comment section is usually so civilised that a single individual turning up and acting out of the Commentariat norms is enough to make it the most toxic discussion which has ever taken place.

Of Scott’s classic posts, the most toxic the comment section has ever become was on Radicalising the Romanceless. The least toxic the comments section has ever been are the posts on Scott’s conworld, Raikoth (technically the Raikoth post on history and religion specifically, but the whole series is so good I’ve linked to the index).

Complexity of thought

Complexity of thought is important because it indicates the effort being spent on each comment. Effort being spent on comments indicates that the Commentariat is treating each other with respect and thoughtfulness (or at least that’s my hypothesis).

This is a very hard one to rigorously quantify, but so help me we’re going to hold hands and give it our best shot together. Defining the complexity of thought is something which has defied philosophers for millennia, so instead I will look at the complexity of language, assuming this is a proxy for the care and attention (not to mention intellectual calibre) being put into a comment. I have looked at a few features of language which I thought might capture this idea.

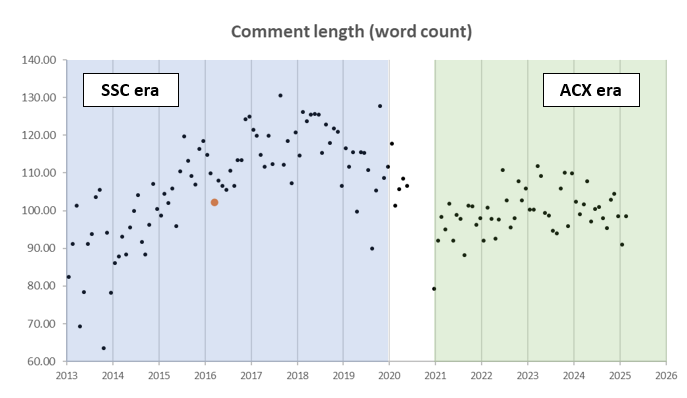

Complexity Approach 1 – Length of comment

First and most importantly, I looked at how long a typical comment was. A long comment, I reasoned, was a good sign someone had spent some time thinking about what they wanted to say and took some time to actually say it. It is a running joke that Scott is extremely wordy, but this is also true of the Commentariat, for the most part – a typical comment is around 110 words, or slightly longer than this paragraph. In the graph below, we do see a clear peak, but the peak occurs in 2017 (so about a year later than the period of maximum engagement).

Complexity Approach 2 – Occurrence of complex words

Second, I looked at the occurrence of complex words – both via a test of individual word length and through the use of the ‘SMOG Index’ metric (detail), which basically tracks multisyllabic words – it is a Simple Measure of Gobbledygook. I figured both of these would show more complex comments, which required the use of jargon and compound phrases to express properly. This shows a longstanding trend towards shorter words over time (the effect is slight, but it seems to be speeding up since moving to ACX) and a clear peak in multisyllabic word usage at around 2017 – basically exactly the same time as comments reached their maximum length.

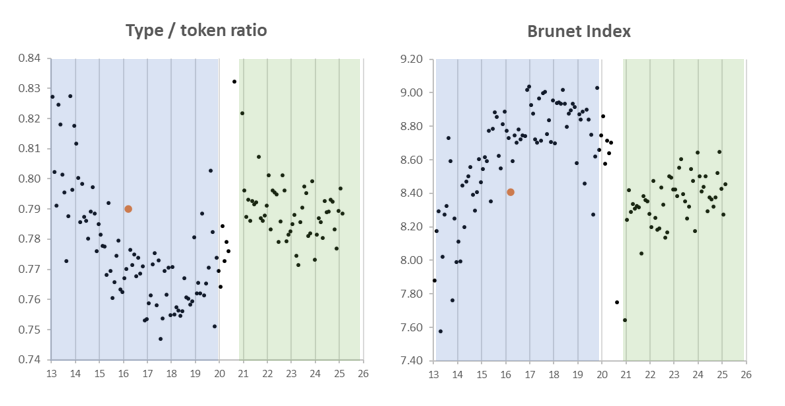

Complexity Approach 3 – Lexical diversity

Third, I looked at sentence construction. The type-token ratio is a simple measure of vocabulary richness, calculated by dividing the number of unique words (types) by the total number of words (tokens) in a text. This measure has a very specific problem in the context of the Commentariat in that it becomes less useful as comments become longer (because the chance of repeating a word increases). Therefore, I’ve also used the ‘Brunet Index’, which I know nothing about but which Google tells me fixes this problem. The two measures have an inverse relationship to each other – type/token ratio measures lexical variety, Brunet Index measures lexical staleness. Weirdly, we do see a clear trend for a peak occurring in 2017 like the other complexity measures, but this trend is in the opposite direction than we might expect – the time of maximum comment length / word complexity was also the time of maximally stale vocabulary.

I think, in hindsight, that this is reflecting a unique characteristic of the ACX Commentariat, which is that it is unusually likely to develop an idea or conceptual schema rather than just asserting something and moving on. For example, here’s a Comment of the Week where Anatoly spends a very long time explaining the different meanings of ‘infinite’ and ‘finite’ in the context of explaining why P=NP is a difficult problem. It has a Brunet Index of 14.3 (so a little less than double the local average) because it repeats the words ‘infinite’, ‘finite’ and ‘algorithm’ many times. But I agree with the responses that this is a great comment, and exactly the sort of thing which only the ACX Commentariat seems to produce with any regularity. For a more recent example, here’s another comment of the week by Benjamin Jolley which adds some details to Scott’s post The Compounding Loophole, and is also clearly a great post which fits very well into the Commentariat corpus. So my conclusion here is that documentation for these tests assumes that stale vocabulary is always bad, because it expects you to be using the tests on – for example - novels. However, stale vocabulary isn’t inherently good or bad, and in this case it serves as a marker for something the Commentariat like or value. Anecdotally, it looks like what the Commentariat value is something like ‘well defined terms’. Even if this doesn’t map cleanly to something we can point to, there’s no accounting for taste - if the Commentariat just happen to prefer lengthy stale sentences there’s nothing actually wrong with that. Therefore, this measure is consistent with the other measures of complexity even though it very clearly shows the opposite relationship than I expected.

Just for fun, I thought I would show the most repetitive comment ever written. This was actually slightly difficult as there are a lot of things which are both comments and repetitive but which would be uninteresting to count (spam, code snippets, pasted text from early LLMs where the model hangs and repeats the same text to infinity). The most repetitive non-spam comment which I reckon was generated by humans alone is this comment by Deiseach, which quotes extensively from an early Irish law book (Brunet = 16.9). The most repetitive non-spam comment which I reckon has a single human author is this comment by Fahundo (Brunet = 16.5), giving the answer to a logic problem in ROT13 (so actually possibly breaks the rule about not using a computer in the writing, but not in the way I meant!)

Complexity Approach 4 – Reading age

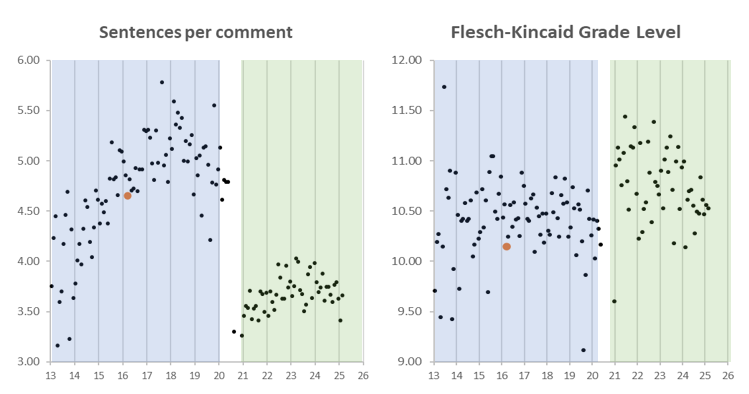

Finally, I looked at reading age, although this approach was largely unsuccessful. ‘Reading age’ is an approximate composite measure of the complexity of language and sentence construction in a piece of text. There are quite a few different measures of reading age, which all show roughly the same outcome in my data. The one I have depicted below is the Flesh-Kincaid Grade level, which roughly tracks how many years of continuous schooling you would theoretically need to read and understand the text. The Commentariat is a largely very intellectual bunch and so a typical reading age of around 10.5 is unsurprising (a typical SSC/ACX comment is just barely less complex than an academic article in terms of vocabulary and construction, and the most complex comments significantly exceed this). The graph shows that comment complexity jumps by approximately half a grade level when SSC becomes ACX, but I’m a bit sceptical this is a ‘real’ effect. Most reading age formulae track sentence length very closely, and for some reason sentence length also changes significantly around this time. I could genuinely believe that sentence length changes on the switch to ACX, but I don’t think measures of reading age are designed to be valid if sentence length is changing for reasons unrelated to the complexity of text, so I don’t think you can confidently conclude the ACX comments are more sophisticated from this measure alone.

Complexity - Conclusions

Overall, it is appropriate to discover that my measure of ‘complexity of thought’ is itself complex. We do see very clear peaks in the SSC era, but not actually quite in the place we expected to see them. Similarly, we don’t always see the peak in the direction we expect (sentences are long and stale in the peak SSC years, which doesn’t seem like a recipe to promote engagement). Finally, we have a puzzle about how the Substack UI/UX causes significantly fewer sentences per comment.

My conclusion here is that these data are completely consistent with a Commentariat who have a particular thing that they like, which peaked in 2017. This thing quantitively looks like long stale sentences, but actually might qualitatively feel different – like for example careful definitions of words which are then used repeatedly. As for why the peak sentence length is after peak engagement, my best guess is that people didn’t stop engaging at random; the people with the strongest commitment to the Commentariat stuck around longest, and these are also the people with the most respect for SSC cultural norms (leave long, thoughtful comments) and willingness to dedicate time to commenting. I have heard this described as ‘evaporative cooling’ before. This group of ‘fanatics’ hung around for a bit longer than everyone else, but eventually either they mostly left too or their influence on discussion norms was not strong enough to prevent a reversion towards the comment section mean (which tends towards shorter and less rigorous comments)

What happened in 2016?

From the data I extracted, it is clear something happened to the Commentariat in 2016(ish) and again in 2021 with the switch to ACX. Of the four measures I presented:

Depth of engagement shows two clear directional reversals in 2016 and 2021

Freedom of expression shows a scruffy directional reversal in 2016 and a clearer one in 2021, and also looking at individual kinds of expression reveals very sharp peaks in 2016 for ‘SJW’ and related phrases

Toxicity scores show a clear directional reversal in 2021 (but nothing in 2016)

Complexity of thought measures show clear directional reversals on every measure except average word length (which has been steadily declining) in both 2017 and 2021. This would be great confirmation for the theory that quality declined in 2016 except you’ll notice that 2017 is a bit too late to explain that!

Overall, I’d say that all four of these measures point to a change which occurred when the Commentariat moved to Substack, and two-and-a-half point to a change which occurred in 2016.

To me, the ACX change is somewhat understandable – Substack has a different userbase, different UI and Scott started blogging there after nearly a year hiatus so he lost some of the momentum and norms established from SSC. The start of ACX also coincided with another wave of COVID cases, which in some countries at least will have significantly altered the ‘online-ness’ of the general population. So, I don’t think we need to look especially hard for why ACX comments are a bit different to SSC comments. I also don’t think we need to look especially hard for why the ACX comments seem gradually moving more towards looking like peak-SSC; it took three years for SSC to reach peak quality, so we could tentatively propose that there is some sort of inherent ‘bedding in’ time for new comment sections to feel out and formalise the norms they want to establish. Speculatively, perhaps Substack has a different mechanism for attracting readers to WordPress so the beginning of ACX featured a mix of SSC old guard and Substack newcomers, and it is taking some time for the community norms of the SSC old guard to assert themselves onto ACX.

The Commentariat seems capable of self-diagnosing the many ways in which the ACX change might have contributed to a decline in quality. For example, Moon Moth writes:

I would posit that, for all of Substack's good qualities, the commenting experience is worse here. Which may be coloring commenters' overall impressions. [Expanding on this in another comment they write] Substack comments take too long to load, especially on mobile. And on mobile, they reload and lose my place whenever I switch tabs or apps … Which makes me reluctant to do anything but skim on mobile.

And teddytruther writes:

I also expect that this selection effect took a huge bump from the NYT controversy, which drew people primarily interested in Woke War Punditry and not a long series of guest posts on Georgist land taxes.

The change which occurred in 2016 (and very specifically April 2016) is much less understandable to me. After some thought, I’ve come up with three possible hypotheses:

Scott’s writing got worse in April 2016, causing mass disengagement, which changed the makeup of the comments section

The user experience of the blog unrelated to Scott’s writing changed in April 2016, which changed the way in which the Commentariat engaged with the comments section (in basically an exact parallel to the ACX switch)

The ACX Commentariat has a very specific set of hyperfixations, and world events around that time meant they weren’t able to satisfy that hyperfixation through commenting, so their commenting got lazier / worse and never really recovered.

Considering each in turn:

Scott’s writing got worse

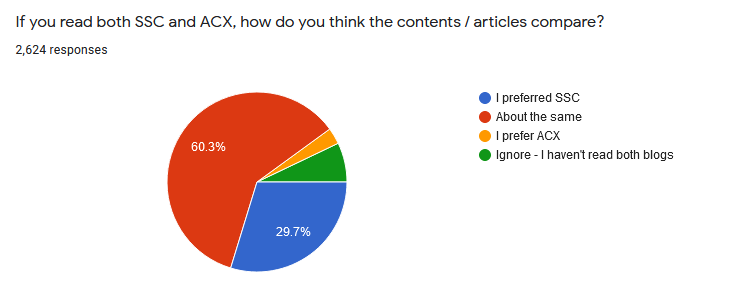

I kicked off this essay by referencing Why Do I Suck?, in which Scott presents some evidence that his writing has not got worse, replicated below:

However, this pie chart only considers ACX vs SSC, not pre-2016 SSC vs post-2016-SSC. It is therefore still maybe consistent with Scott’s writing getting worse in April 2016 and never recovering. This could straightforwardly explain the drop in Commentariat quality in 2016 (but not 2021), but the evidence for a decline in writing quality centred on this period is anyway very mixed.

April 2016 has some great posts (including the ‘classic’ The Ideology is Not the Movement), but there were a lot of good posts around that time - the very start of May 2016 includes another ‘classic’ in the form of Be Nice, At Least Until you can Coordinate Meanness. Nor can it be that readers somehow intuit that Scott has nothing more valuable to say on any topic going forward, because 2017 contains classics like Guided by the Beauty of our Weapons, or my personal favourite SSC-era post, Considerations on Cost Disease. Not to mention, of course, there are some cracking ACX-era posts which are nearly a decade away at this point.

In my head, the cleanest story is that a bunch of people became regular readers of the blog because they read Meditations on Moloch or another of the universally-loved posts that were linked everywhere and then left when they realised the median post was ‘merely’ as good as The Ideology is Not the Movement, but this story doesn’t make sense – you could certainly argue the toss about when ‘peak’ SSC was, but if you believe it exists you’d surely have to put it centred somewhere around 2014. This would mean that the group of people who are disappointed by Scott’s output would have to get interested in the blog in 2014, stick around through the whole of 2015, and then leave en masse in April 2016 despite 2016 (in my subjective opinion) being better than 2015 for ‘important’ posts.

Another point to consider is that the ‘Scott’s writing sucks now’ hypothesis needs not only to explain why engagement fell off in 2016, but also why multisyllabic words and type/token ratio also peaked around that time. I think you can maybe tell a story where Scott’s writing gets worse in 2016 so people engage less with the comments (producing less comment depth and more zero-length comment chains) but it is very difficult to imagine how Scott’s writing getting worse produces more multisyllabic words. If Scott’s writing drives the disengagement, you have to start loading up the ‘evaporative cooling’ hypothesis with a lot of weird epicycles in order for everything to all make sense at once.

In summary, I’m agnostic on the question of whether Scott’s writing has got worse. I personally don’t think it has (although the frequency of ‘hits’ was remarkable in 2014) but perhaps it has changed a bit over time. However, I’m reasonably certain that nothing Scott writes is the reason for the dropoff in engagement around 2016, because there’s no coherent story you can tell that fits that hypothesis. I think this is an unproductive sidetrack to consider in a review of the Commentariat specifically.

The user experience of the blog got worse

If Scott’s writing did not get worse, perhaps some other element of the blog did, which led to significant disengagement. For example, perhaps the moderation of SSC got more restrictive around this time or an incredibly obnoxious autoplay advert was introduced to the sidebar. We actually know that the Commentariat are quite sensitive to user experience changes, because Scott notes that Open Threads with an announcement get between 2-4x less engagement than those without. This theory seems very strong to me – the UX was one of the major complaints about early-days ACX, and it seems like you can explain the initial Commentariat stagnation and then flourishing for ACX with reference to the UX specifically.

For example, Vladimir writes:

My personal theory is that the ACX website itself is less enjoyable. SSC had it's personal charms: I had to pinch and zoom slightly to read the small text better on my phone, and the blue decorations were comforting, and the comments felt like early internet forums for some reason. Now, everything feels more bland.

And DinoNerd writes:

Personally, I find the ACX experience less good than that of SSC, but I can't disentangle how much of that is the substandard user interface.

However, there are no corresponding comments for some massive change that occurred in April 2016, and I know because I read thousands of comments from that period trying to find one to quote here. SSC has always had a fairly loose moderation policy (notwithstanding the occasional ‘reign of terror’ when Scott clamped down on bad commentors) and has worked hard to keep adverts unobtrusive. I remember the only major change to SSC UX was the highlighting of comments you hadn’t read yet (which was an excellent change but I think came later than 2016).

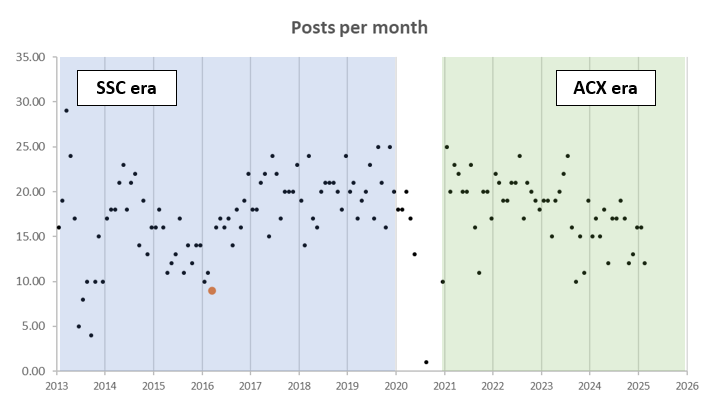

The best explanation I have found for a massive change in UX which occurred in April 2016 is that April 2016 was the lowest ever posts per month, probably driven by Scott travelling at during this period and so having less time to post (link)

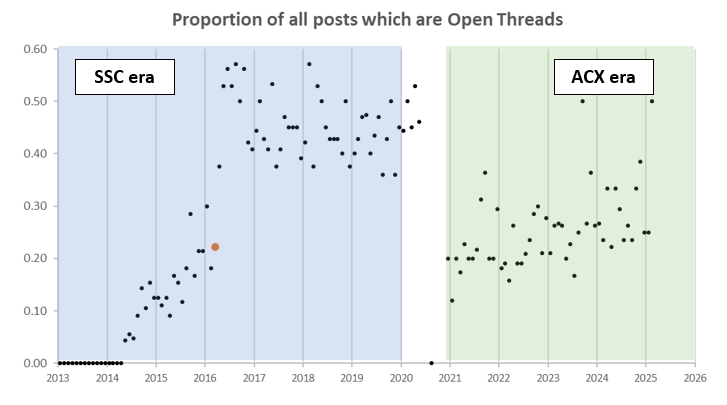

Following April 2016, posts per month spike sharply because Scott changes the frequency of Open Threads from biweekly to biweekly (or fortnightly to twice-weekly if you are going to insist on spoiling my joke). You can see an immediate impact on the proportion of each month’s posts which are Open Threads in the graph below. Note that the actual proportion of Open Threads in the ACX era is probably more like the later SSC era, it is just that my scraping algorithm didn’t catch the paid Subscriber-only Open Threads.

So, on this theory, the Commentariat have an approximately fixed amount of time they want to devote to interacting with the comments section each week. The more posts there are, the more thinly they spread themselves across those posts – even if the posts are not core Scott-generated blogposts but rather Open Threads. Perhaps this also explains the changing complexity of language too – quantity and quality of engagement are somewhat substitutes for each other, so in a world where there are more posts than time available to engage with them, the response is to cut back a little on both quantity and quality of engagement. This process could be self-reinforcing; if the community norms become for lower-quality engagement, then this could lead to people trading off quality for quantity even further.

This leads to quite an interesting corollary, which is if true then to some extent Scott’s posts are irrelevant to the Commentariat’s enjoyment of Scott’s writing. The value of Scott’s writing is to act as a sort of butterfly net to catch the sort of people interested in Scott’s writing, and then once captured those people interact with each other in the comments section basically a fixed amount basically regardless of how often Scott posts. I don’t know how fully I endorse this theory, but it is interesting to think about how long the Commentariat would remain good even if Scott’s writing genuinely did drop in quality.

The real world intruded on the Commentariat’s hyperfixations

In The Rise and Fall of Online Culture Wars, Scott notes that online feminism was absolutely everywhere from around 2014-16 and then just sort of… disappeared one day. This has some parallels (down to the timing) for engagement with the SSC Comments section – from 2014-16 engagement with the comments section seems to be on an unstoppable upward trajectory and then in April 2016 it just sort of… reverses.

I have already mentioned that April 2016 marked an extreme high-water mark for usage of the term ‘SJW’. From what I can see, there’s no particular reason for this specific to SSC – April 2016 has two threads with significant usage of the token, but they are completely random threads – OT47 and Links 4/16 (Links 4/16 does have a link about social justice warriors so that makes some sense, but OT47 doesn’t, so my conclusion is that there is just something that was in the water around that time). This theory says that the Commentariat really liked talking about SJWs, and when they were prevented from talking about SJWs they just stopped engaging with the blog altogether.

The problem with this theory is that there is nobody really preventing the Commentariat from talking about SJWs to their heart’s content after April 2016. In February 2016, Scott requested that all Culture Wars topics be quarantined to a single Culture Wars thread on the r/slatestarcodex subreddit (link). This seems like the most common-sense explanation for the observation that the comment section changes dramatically around this time - of course engagement and usage of the term ‘SJW’ falls off when usage of the term ‘SJW’ is quarantined to a single thread in an offsite forum. However, the major problem with this explanation is that it doesn’t fit the data – comment section engagement increases throughout February – April 2016 and only starts dropping in May, when as far as I can see there is no specific events occurring in the r/slatestarcodex subreddit to explain it. Also, in February 2019 the Culture Wars Thread was euthanised (link) but there is no corresponding uptick in comment section engagement as people migrated back from the Culture Wars thread to the SSC comments section.

I thought perhaps discussion of SJWs might have been drowned out by discussions of something else, such that it became passé to be discussing SJWs when there was some other Culture Wars issue at stake. This would mirror what happened to online feminism, where it became passé to discuss women specifically and more trendy to discuss intersectionality / race issues from about 2016 onwards. The obvious candidate for this switch is Trump and the rise of the MAGA movement. March 2016 was probably the last period where you could kind of convince yourself Trump wasn’t going to win the Republican Primary. In March 2016 it was just about possible Cruz could have won, but by April 2016 Trump was winning every Primary with decisive majorities. If you are slightly younger you may not have been online during that period, but I can attest that it was completely crazy commenting in political spaces around that time; I’d argue a strong candidate for the most toxic comments section ever is You Are Still Crying Wolf, where Scott offers some extremely guarded non-criticism of Trump, arguing that he was not unusually racist by American Presidential standards. This didn’t make my database because Scott nuked the comments for being too toxic, so we will never know mathematically how bad the comments were, but anecdotally they were pretty standout – closer to 4Chan than ACX in places.

The evidence for this hypothesis is kind of mixed – if you abandon all sense of statistical appropriateness you can freehand draw a line which kind of looks like the decline in ‘SJW’ tokens is mirrored by a rise in ‘Trump’ tokens when you normalise the two terms, but you can also do that with any other word that was trending in April 2016, like ‘Snowden’ or ‘Wikileaks’ (or ‘Harambe’ as per the graph below). Looking just at the data it isn’t really a very impressive correlation to draw.

I appreciate it is so boring to conclude that Trump is the Great Satan for the millionth time. However, I do think if you add in contextual factors there is reason to be cautiously supportive of a ‘Donald Trump killed the AXC Comments Section’ theory:

The volume of ‘Trump’ comments is absolutely massive - around 11% of all comments were about Trump in January 2017, which is greater than comments about Russia during their invasion of Ukraine (10%) and comments about COVID during the first few months of the pandemic (7%). Even a topic like SJWs, which the Commentariat really liked talking about, could only manage a peak of around 1.2% (although eg ‘gender’ peaks at 5.5% and ‘feminis*’ peaks at 3.7%). Concepts like ‘Harambe’ and ‘Wikileaks’ barely register on this scale, at 0.3% and 0.5% peaks respectively. So even though the shape of the two curves looks similar when you normalise them, it is reasonable to believe Trump could have had a significant enough impact on the comments section to dislodge forum norms, in a way Harambe did not.

Looking at the data for related terms makes it clear that a massive shift in discourse occurred around the time of Trump’s election – terms which were somewhat common before (like SJW) died out basically overnight, whereas terms which arose in the alt-right ecosystem spring up basically at the same time. Also of importance, there is no clear term that replaces ‘SJW’ until early 2017 (with ‘antifa’), and no equivalent term that sticks until ‘woke’ enters common parlance.

s

So this theory says something like: the Commentariat was uniquely well suited to discussing Culture War issues in 2016. These largely revolved around gender debates – men vs women, creeps and niceguys etc. The Commentariat developed very virtuous norms around Culture War issues, which was self-reinforcing as SSC developed a reputation as a place you could come to have interesting and nuanced discussion about Culture Wars topics. This virtuous Commentariat was fuelled by Scott, who would write thoughtful takes on concepts like trigger warnings, rape culture, harassment, etc, and who set the standard for what this sort of debate could look like.

Trump’s success fundamentally changed things; first, Culture Wars arguments became a lot more mainstream, because the President of the United States had made Culture Wars arguments a major part of his policy platform. So you didn’t have to come to SSC to see arguments about Culture Wars, you could just turn on the news. Second, a bunch of people who wanted to discuss gender norms specifically found their arguments hijacked to be about Trump (“How can you talk about X when Trump is doing Y?!”). Scott also blogged less about Trump (because he was travelling, and because I guess Trump didn’t interest him so much) so there was less guidance on what norms around Trump should look like, although admittedly not none [EDIT: This was true at the time I wrote it, but Scott has recently been blogging about Trump / MAGA movement a bit more. So we’ll have to see how that shakes out]. Therefore, it became harder to adopt virtuous discussion norms around Trump (which – parenthetically - seems to be a general effect Trump has on people, both pro and anti). However, as these virtuous norms were something people actually liked, losing them hurt the Commentariat.

Of the three arguments I have considered, I am mostly taken with a story where the change to Open Thread frequency led to a significant shift in engagement, and during the period Trump caused a massive external shock to the ecosystem containing SSC (something like ‘longform political discussion forums’). Both of these factors individually shifted commenting norms in a way people didn’t like, but both of these factors together meant that the norms didn’t recover as they might have done in the past – influential posters who might have previously guided community norms through an emotive period were burning out on the Open Thread frequency, while newer commentors were excited to use all the airtime available to them in Open Threads to discuss Trump and his platform to the exclusion of the rest of the Commentariat’s hyperfixations. After the discourse moved on from Trump about a year later (September 2017-ish) the Commentariat that remained had become stuck in a situation of ‘shoot from the hip’ commenting, where politeness was preserved by accepting less complex or divisive discussion, on average.

If true, this would be a very optimistic story to tell, because you don’t really see similar markers in 2024 when Trump II was elected, suggesting the Commentariat has worked out how to have mature discussions about Trump without swamping other topics they want to discuss. Similarly, Scott made no major changes to the comment section during that period, so it was able to adjust to post-Trump norms more naturally. Together, that suggests that the green shoots of improving comment quality we see in 2024 and early 2025 have no natural reason to reverse, and we could easily be seeing a Commentariat renaissance in progress.

Conclusions

I want to end with two concluding thoughts.

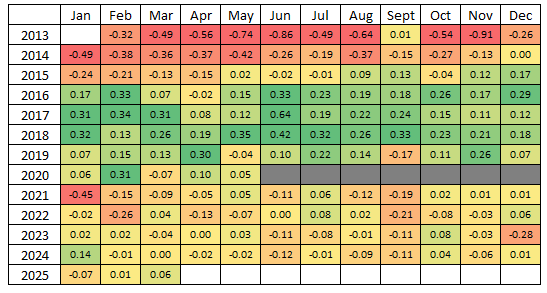

First, although I have meandered at times, this is supposed to be a review – I therefore reckon I need to come down on one side of the fence or the other on the question of whether the Commentariat now is better or worse than the Commentariat of 2016. I have constructed a composite measure of ‘Commentariat Quality’ from the list of four measures I described earlier – each measure given equal weighting - and applied them to every comment section.

If we believe that I’ve captured the four essential characteristics of the Commentariat well, then the best comment section there has ever been is on an ACC entry from 2018, Should Transgender Children Transition? (the best comment section on a Scott-authored post is also on trans issues, interestingly). The best individual comment which has ever been made is apparently this comment by neonwattagelimit. There’s nothing really wrong with this comment, and I can see why it scored highly on my algorithm - however I think it shows the limits of defining quality algorithmically, because I wouldn’t say it has the indefinable features that would make it a good candidate for (for example) Comment of the Week status.

The output of this scoring algorithm is recorded below. A score of zero means a perfectly average month, and positive scores indicate higher Commentariat Quality as defined by my algorithm. The cells are colour-coded so that stronger months are greener and weaker months are redder (grey cells are the blog’s hiatus, and don’t count towards the averages).

My review of the overall Commentariat is that there was indeed a ‘Golden Age’ of comments from around 2016-2018, which occurs slightly later than the period of peak engagement (April 2016). We are currently slightly above average in terms of quality, which I believe means we would rate a solid B, or perhaps even a B+. I’ve decided we deserve the B+ because signs are that the Commentariat is improving on most measures of quality so the trend is in the right direction, plus I love the Commentariat so I’m biased towards higher scores.

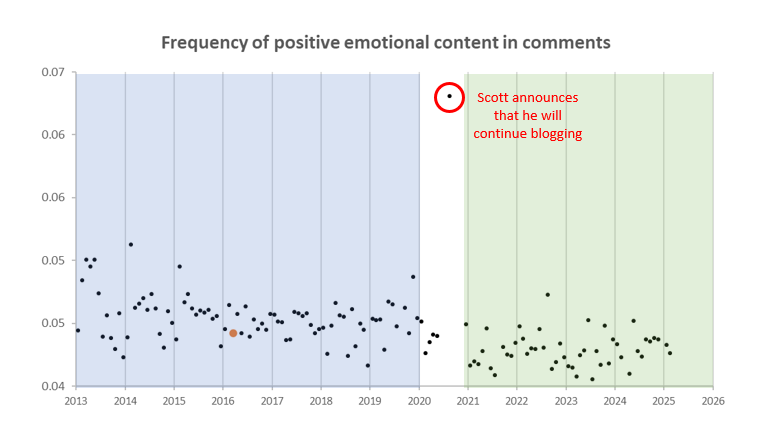

Second, in preparing the data for this essay I did some rudimentary sentiment analysis as a precursor to looking at ‘toxicity’ as its own thing. The results are not especially interesting (all expressions of emotion have been dropping in a linear fashion since 2013) but I came across a very interesting outlier I thought I would share. The graph below shows the frequency of comments which display positive emotional content – joy, excitement, anticipation etc. The massive outlier during the COVID era is this post where Scott announces he will begin blogging again after a several-month stretch where that did not look at all certain. For all that the Commentariat may have had some teething problems with the switch to ACX, I think this datapoint does more than the entire preceding essay at expressing how much I value the ACX Commentariat (and Scott himself) as providing a unique culture of lively, frank and polite discussion. I am looking forward to another decade of following the Commentariat, wherever they happen to be hosted.