I.

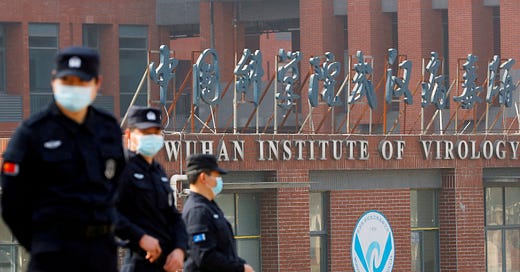

Does it matter if COVID was a lab leak?

Here’s an argument against: obviously there are lots of lab leaks and you should take lab safety really seriously. Given the amount of dangerous microbiology research, it seems pretty plausible that something like a COVID lab leak will happen at some point. So even if we learn that this particular pandemic wasn’t a lab leak, we should still worry that the next one is. It’s not like “if COVID is a lab leak, we need to worry about biosecurity - but if it’s not, then it’s fine, we should keep doing gain-of-function on dangerous viruses in lax conditions.” Start worrying now, and leave the questions about what exactly happened with COVID to the historians.

A good Bayesian should start out believing there’s some medium chance of a lab leak pandemic per decade. Then, if COVID was/wasn’t a lab leak, they should make the appropriate small update based on one extra data point. It probably won’t change very much!

I did fake Bayesian math with some plausible numbers, and found that if I started out believing there was a 20% per decade chance of a lab leak pandemic, then if COVID was proven to be a lab leak, I should update to 27.5%, and if COVID was proven not to be a lab leak, I should stay around 19-20%1.

But if you would freak out and ban dangerous virology research at a 27.5%-per-decade chance of it causing a pandemic per decade, you should probably still freak out at a 19-20%-per-decade chance. So it doesn’t matter very much whether COVID was a lab leak or not.

I don’t entirely accept this argument - I think whether or not it was a lab leak matters in order to convince stupid people, who don’t know how to use probabilities and don’t believe anything can go wrong until it’s gone wrong before. But in a world without stupid people, no, it wouldn’t matter. Or it would matter only a tiny amount. You’d start with some prior about how likely lab leaks were - maybe 20% of pandemics - and then make the appropriate tiny update for having one extra data point2.

II.

Back in 2001, the motto was “9-11 changed everything”. Everyone started talking about the clash of civilizations, and how Islam was fundamentally opposed to the West. The government made a whole new Cabinet department around the theory that terrorism was now a giant threat and we needed to sacrifice our civil liberties to deal with it.

But terrorist attacks after 9-11 mostly followed the same pattern as before 9-11: every few years, someone set off a bomb and killed some people, at about the same rate as always. Islam stayed about as opposed to the West as it had always been (plus some extra because we spent a decade bombing them).

In retrospect, updating any of our beliefs - about Islam, about the extent of the terrorist threat, about geopolitical reality, based on 9-11, was probably a mistake.

I think it would have been possible to have gotten this right. Before 9-11, we might have investigated the frequency of terrorist attacks. We would have noticed small attacks once every few years, large attacks every decade or so, etc. Then we would have fit it to a power law (it’s always a power law) and predicted a distribution. That distribution would have said there would be an enormous attack once every (let’s say) 50 years. Then we would have calibrated the amount of resources we spent on counter-terrorism to a world with frequent small attacks, occasional large attacks, and once-per-50-years enormous attacks. We would have specifically thought things like “Once per 50 years, when the enormous attack predictably happens, will we wish that we had spent more money on counter-terrorism?” and if the answer was yes, spend the money on counter-terrorism beforehand.

Then,when 9-11 happened, we should have shrugged, said “Yup, there’s the once-per-fifty-years enormous attack, right on schedule”, and continued the same amount of counter-terrorism funding as always.

(The diametric opposite of this is anyone who uses the phrase “science fiction”. As in “There’s never been an enormous terrorist attack before, so that’s just science fiction; there’s no evidence that this will ever happen and we should stick to worrying about real problems.” Come on! You know that weapons exist that can kill thousands of people at once, you know that it’s physically possible for terrorists to obtain them, so you should assume that this will happen at some specific frequency. Then you should try to predict that frequency. Once you have a prediction, you should take the appropriate security measures. And if those fail and an enormous attack happens, then instead of freaking out and deciding that science fiction is true and your worldview has been thrown into disarray, you should just do a sanity check to confirm your frequency calculations and move on.)

This is part of why I think we should be much more worried about a nuclear attack by terrorists. My distribution for terrorist attacks includes a term for a successful nuclear detonation once every century or two. I would like to believe that if terrorists nuked a major city tomorrow, I wouldn’t update any of my beliefs. I would say “Yeah, we always knew that could happen. Yeah, we should have prepared X amount, to balance the costs of preparation against the risks of this happening. I still think X amount is a good amount to prepare. Let’s do the same thing I would have recommended back in 2024 before any of this happened.”3

III.

It’s bad enough when people fail to consider events that obviously could happen but haven’t. But it’s even worse when people fail to consider events that have happened hundreds of times, treating each new instance as if it demands a massive update.

This is how I feel about mass shootings. Every so often, there is a mass shooting. Sometimes it’s by a Muslim terrorist immigrant. Sometimes it’s by a white person (maybe even a far-right racist white person). Sometimes it’s by some other group entirely.

Every time, people get very excited about how maybe this proves their politics correct. “I bet everyone thought this latest shooting was by a Muslim. But actually, it was by a white person! This just goes to show how everyone except me is racist!” Or “I bet everyone thought this shooting was by a white racist! But actually it was a Muslim! This just goes to show how everyone except me has been poisoned by political correctness!”

It’s hard to define “mass shooting” in an intuitive way, but by any standard there have been over a hundred by now. You can just look at the list and count how many were by Your Side vs. The Other Side. If you really want, you can count how many were falsely reported as being by Your Side before people learned they were actually by The Other Side. Once you’ve done this, adding one more data point to the n = 100 list doesn’t change anything. Even if you care a lot about this topic (especially if you care a lot about this topic) you can ignore whatever was on the news yesterday, in favor of checking the list again in another five years to see if anything’s changed.

A few months ago, there was a mass shooting by a far-left transgender person who apparently had a grudge against a Christian school. The Right made a big deal about how this proves the Left is violent. I don’t begrudge them this; the Left does the same every time a right-winger does something like this. But I didn’t update at all. It was always obvious that far-left transgender violence was possible (just as far-right anti-transgender violence is possible). My distribution included a term for something like this probably happening once every few years. When it happened, I just thought “Yeah, that more or less matches my distribution” and ignored it.

I also have a term in my distribution for people who 100% agree with me on everything - liberals, rationalists, etc - committing a mass shooting. I think this is less likely than for most other groups. I deeply hope it doesn’t happen. But it’s obviously possible - all you need is one really mentally ill person at the wrong place at the wrong time. If it does happen, I intend to be very sad, but not change any of my beliefs or actions. If I was going to change them in response to this possibility, I would have done it already.

(if it happens twice in a row, yeah, that’s weird, I would update some stuff)

Likewise, I have a term for people who are my worst enemies - e/acc people, those guys who always talk about how charity is Problematic - committing a mass shooting. This term is also pretty low. They don’t seem evil in that particular kind of way - they’re more subtle than that. But it happens, I don’t intend to crow about it. It doesn’t prove anything beyond that sometimes the dice land on all ones, a crazy evil person joins your community, and he does something horrible. And we already knew that was possible. If 95% of mass shootings in the next decade were caused by weird anti-charity socialists, that would be an interesting update. But one shooting doesn’t differ from my prior enough to be interesting.

IV.

This is also how I feel about other kinds of misdeeds.

Take sexual harassment. Surveys suggest that about 5% of people admit to having sexually harassed someone at some point in their lives; given that it’s the kind of thing people have every reason to lie about, the real number is probably higher. Let’s say 10%.

So if there’s a community of 10,000 people, probably 1,000 of them have sexually harassed someone. So when you hear on the news that someone in that community sexually harassed someone, it shouldn’t change your opinion of that community at all. You started off pretty sure there were about 1,000, and now you know that there is at least one. How is that an update?!

Still, every few weeks there’s a story about someone committing sexual harassment in (let’s say) the model airplane building community, and then everyone spends a few days talking about how airplanes are sexist and they always knew the model builders were up to no good.

I will stick to this position even in the face of your objections. Yes, maybe it’s a particularly bad case of sexual harassment. But if there are 1,000 sexual harassers in a 10,000 person community, surely at least a few out of those 1,000 will be very bad. Yes, maybe the movement didn’t do enough to stop the harassment. But surely of 1,000 sexual harassment incidents, the movement will fumble at least one of them (and often the fact that you hear about it at all means the movement is fumbling it less than other movements that would keep it quiet). You’re not going to convince me I should update much on one (or two, or maybe even three) harassment incidents, especially when it’s so easy to choose which communities’ dirty laundry to signal boost when every community has a thousand harassers in it.

I realize this sounds callous, but when I double-checked, everyone had 180-degrees false impressions of what fields had the most sexual harassment because they were updating on who they’d heard salacious stories about recently - retail is worst, and STEM is among the best. If this surprises you, stop updating on random salacious anecdotes!

V.

Do I sound defensive about this? I’m not. This next one is defensive.

I’m part of the effective altruist movement. The biggest disaster we ever faced was the Sam Bankman-Fried thing. Some lessons people suggested to us then were:

Be really quick to call out deceptive behavior from a hotshot CEO, even if you don’t yet have the smoking gun.

It was crazy that FTX didn’t even have a board. Companies need strong boards to keep them under control.

Don’t tweet through it! If you’re in a horrible scandal, stay quiet until you get a great lawyer and they say it’s in your best interests to speak.

Instead of trying to play 5D utilitarian chess, just try to do the deontologically right thing.

People suggested all of these things, very loudly, until they were seared into our consciousness. I think we updated on them really hard.

Then came the second biggest disaster we faced, the OpenAI board thing, where we learned:

Don’t accuse a hotshot CEO of deceptive behavior unless you have a smoking gun; otherwise everyone will think you’re unfairly destroying his reputation.

Overly strong boards are dangerous. Boards should be really careful and not rock the boat.

If a major news story centers around you, you need to get your side out there immediately, or else everyone will turn against you.

Even if you are on a board legally charged with “safeguarding the interests of humanity”, you can’t just speak out and try to safeguard the interests of humanity. You have to play savvy corporate politics or else you’ll lose instantly and everyone will hold you in contempt.

These are the opposite lessons as the FTX scandal.

I’m not denying we screwed up both times. There’s some golden mean, some virtue of practical judgment around how many red flags you need before you call out a hotshot CEO, and in what cases you should do so. You get this virtue after looking at lots of different situations and how they turned out.

You definitely don’t get this virtue by updating maximally hard in response to a single case of things going wrong. If you do that, you’ll just fling yourself all the way into the opposite failure mode. And then when you fail again the opposite time, you’ll fling yourself back into the original failure mode, and yo-yo back and forth forever.

The problem with the US response to 9-11 wasn’t just that we didn’t predict it. It was that, after it happened, we were so surprised that we flung ourselves to the opposite extreme and saw terrorists behind every tree and around every corner. Then we made the opposite kind of failure (believing Saddam was hatching terrorist plots, and invading Iraq).

The solution is not to update much on single events, even if those events are really big deals.

VI.

This can’t be true, right?

The bread-and-butter of modern news, politics, etc, is having a dramatic event happen, getting shocked and outraged, demanding that something be done, and then devoting a news cycle or Senate hearing to it. We can’t just throw all that out, can we?

Here are a few minor, contingent counterarguments:

Obviously when a dramatic event happens, even if you don’t update your predictions for the future, you still need to respond to that particular event. For example, if a major hurricane strikes New Orleans tomorrow, you may not want to update your models of hurricane risk very much, but you still need to send aid to New Orleans.

Obviously if you formed a model back in 2000 based on X data points, at some point you need to remember to update it with all the new data points that have come in since then, and a dramatic event might remind you to do this.

Obviously a dramatic event can reveal a deeper flaw in your models. For example, suppose there is an enormous terrorist attack, you investigated, and you found that it was organized by the Illuminati, who as of last month switched from their usual MO of manipulating financial markets to a new MO of coordinating terrorist attacks. You should expect new terrorist attacks more often from now on, since your previous models didn’t factor in the new Illuminati policy.

Obviously there are some events you thought were so unlikely that you need a big update if they happen even once. If you learn that King Charles is secretly a lizardman alien, you should definitely update your probability that Joe Biden is one too.

Obviously there are some things that aren’t really distributions, but just things where you learn how the world works. If your spouse cheats on you the first chance that they get, you should make a big update about their chance of doing so in the future too.

But I think the most fundamental counterargument is that dramatic events are unimportant from an epistemic point of view, but very important from a coordination point of view.

Harvey Weinstein abusing people in Hollywood didn’t cause / shouldn’t have caused much of an epistemic update. All the insiders knew there was lots of abuse in Hollywood (many of them even knew that Harvey Weinstein specifically was involved!) #MeToo didn’t happen because people learned the new fact that there was at least one abuser in Hollywood. It happened because it served as a Schelling point for coordination. Everyone who wanted to get tough on sexual assault suddenly felt that everyone else who wanted to get tough on sexual assault would be energized enough to support them.

You can think of this as a common knowledge problem. Everyone knew that there were sexual abusers in Hollywood. Maybe everyone even knew that everyone knew this. But everyone didn’t know that everyone knew that everyone knew […] that everyone knew, until the Weinstein allegations made it common knowledge.

Or you can think of it as producing a hyperstitional cascade. A campaign against sexual abuse will only work if people believe that it will. That is, people will only want to join an anti-sexual-abuse campaign if they’ll be on the winning side - both because they don’t want to waste their time, and because they don’t want sexual abusers to retaliate against them. And their campaign will only win if many people support it. Most of the time, no individual anti-abuse crusader is sure enough of winning to start a campaign and get momentum behind it. But the Weinstein allegations produced a mood where everyone felt like “it was time” for an anti-sexual-assault campaign, so everyone believed such a campaign would work, so everyone was willing to support it, so it did work.

I think same mechanism is true of terrorist attacks, mass shootings by the outgroup, and lab leak pandemics. There are people who are against all of these things. But they have trouble coordinating. Also, they would benefit from the support of the “stupid people” demographic, and stupid people only remember that something is possible for a space of a few days immediately after it happens, otherwise it’s “science fiction”. So crusaders build on the sudden uptick of stupid-person-support to bootstrap a movement and a “moment” and shift to a new equilibrium in which they’re coordinated and respectable and their opponents aren’t. And sometimes this blossoms into some giant coordinated push like #MeToo or the Global War On Terror.

All of this is true, and if you’re an activist you should take advantage of it. Certainly the balance of the world depends on who can leverage sudden shifts in the mood of stupid people most effectively. I’m just saying, don’t be a stupid person yourself. Even if you opportunistically use the time just after a lab leak pandemic or a sex scandal to push the biosecurity agenda or feminist agenda you had all along, don’t be the kind of person who doesn’t care about biosecurity or feminism except in the few-week period around a pandemic or sex scandal, but demands an immediate and overwhelming response as soon as some extremely predictable dramatic thing happens.

Dramatic events are a good time to agitate for a coalition, but this is a necessary evil. In a perfect world, people would predict distributions beforehand, update a few percent on a dramatic event, but otherwise continue pursuing the policy they had agreed upon long before.

Assume that before COVID, you were considering two theories:

Lab Leaks Common: There is a 33% chance of a lab-leak-caused pandemic per decade.

Lab Leaks Rare: There is a 10% chance of a lab-leak-caused pandemic per decade.

And suppose before COVID you were 50-50 about which of these were true. If your first decade of observations includes a lab-leak-caused pandemic, you should update your probability over theories to 76-24, which changes your overall probability of pandemic per decade from 21% to 27.5%.

If your first decade of observations doesn’t include a lab-leak-caused pandemic, you should update your probability over theories to 42-58, which changes your overall probability of pandemic per decade from 21% to 20%.

So this hypothetical Bayesian, if they learned that COVID was a lab leak, should have 27.5% probability of another lab leak pandemic next decade. But if they learn COVID wasn’t a lab leak, they should have 20% probability.

You should actually update even less than this, because if you originally thought a lab leak was very unlikely, you should assume COVID wasn’t a lab leak.

It should take a lot of evidence to make you think a very unlikely thing had happened. For example, sometimes there are eyewitness reports of UFOs, but we still don’t believe in UFOs, because they’re very unlikely. If there was an eyewitness report of something else, like a yellow car, we would believe it, because yellow cars are common enough that even a single eyewitness provides enough evidence to convince us.

Suppose you previously thought lab leaks were just as unlikely as UFOs. There’s some evidence COVID was a lab leak, but it’s not overwhelming. So you should mostly discount it until more evidence comes in, and only update a tiny amount.

This strategy is mathematically correct, but obviously dangerous and prone to misfire - see here and here for more.

Except insofar as the same group of terrorists are still around with their nuclear program, waiting to strike again.

Share this post