Intelligence seems to correlate with total number of neurons in the brain.

Different animals’ intelligence levels track the number of neurons in their cerebral cortices (cerebellum etc don’t count). Neuron number predicts animal intelligence better than most other variables like brain size, brain size divided by body size, “encephalization quotient”, etc. This is most obvious in certain bird species that have tiny brains full of tiny neurons and are very smart (eg crows, parrots).

Humans with bigger brains have on average higher IQ. AFAIK nobody has done the obvious next step and seen whether people with higher IQ have more neurons. This could be because the neuron-counting process involves dissolving the brain into a “soup”, and maybe this is too mad-science-y for the fun-hating spoilsports who run IRBs. But common sense suggests bigger brains increase IQ because they have more neurons in humans too.

Finally, AIs with more neurons (sometimes described as the related quantity “more parameters”) seem common-sensically smarter and perform better on benchmarks. This is part of what people mean by “scaling”, ie the reason GoogBookZon is spending $500 billion building a data center the size of the moon.

All of this suggests that intelligence heavily depends on number of neurons, and most scientists think something like this is true.

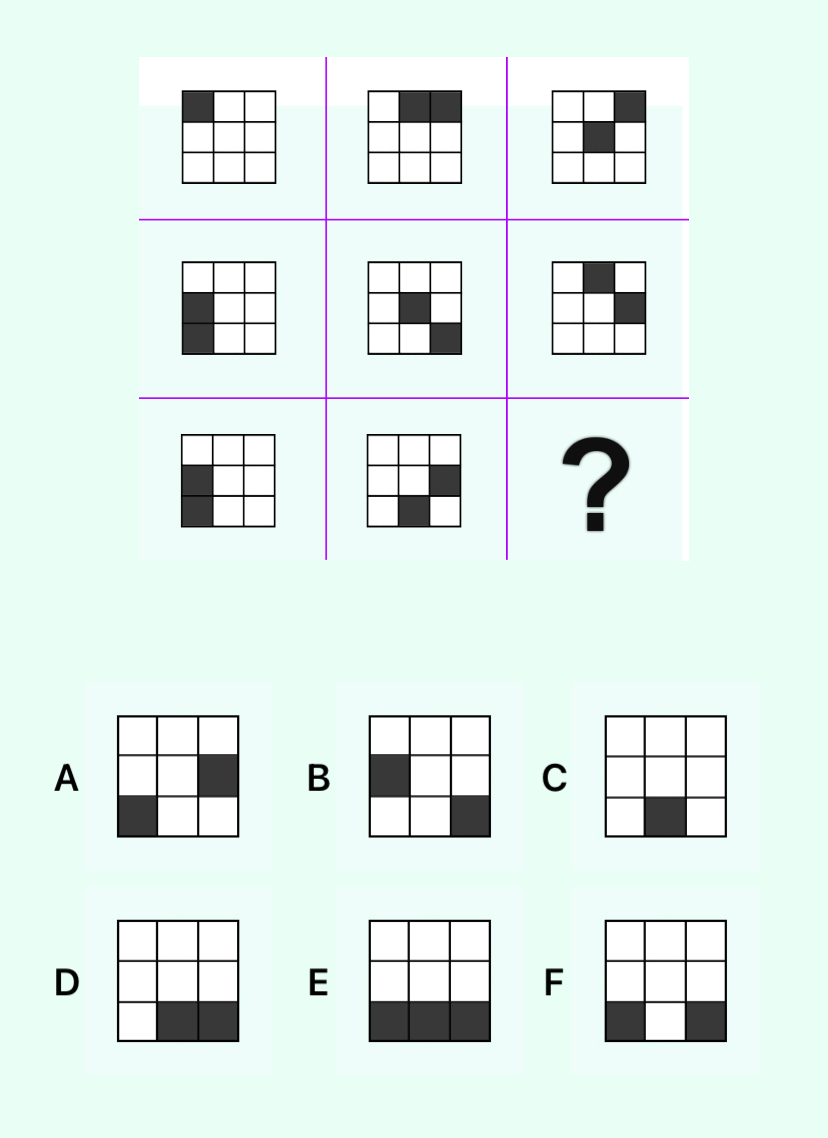

But how can this be? You might expect people with more neurons to have more “storage” to remember more facts. But a typical IQ test question looks like this:

…and you have to solve it in one minute or less. How does having (let’s say) 150 billion neurons instead of 100 billion help with this?

One possibility is that you have some kind of “pattern matching region” taking up some very specific percent of your brain, and the bigger the brain ,the bigger the “pattern matching region”. But this is just passing the buck. Why should a big pattern-matching region be good?

If we focus on the AI example, we might be tempted to come up with a theory that you store some huge number of past patterns in your brain. If you can exactly match a new pattern to one of those, great, problem solved. If not, you still try your best to find the one that it’s most like and try to extend it a little bit. Then the number of neurons determines the number of patterns you can store. But this doesn’t really seem right - endless practice on thousands of Raven’s style patterns helps a little, but a true genius will still beat you. But by the end of your practice, you will have far more patterns stored than the genius does. So what do they have that you don’t?

Maybe it’s not just patterns stored in the sense of “math problems you’ve seen before”, but in the sense where the text of Paradise Lost is a “pattern”, and somehow by truly absorbing the text of Paradise Lost, you learn impossibly deep patterns that you could never describe in so many words, but which help with all other patterns, including those on IQ tests? And the genius is only a genius because they have a natural skill for absorbing and storing very many of these deep patterns, more than you will ever match? I like how mystical this one is, but I just don’t think the connection between Paradise Lost and math puzzles is that deep.

The best real answer I can come up with is polysemanticity and superposition. Everyone has more concepts they want stored than neurons to store them, so they cram multiple concepts into the same neuron through a complicated algorithm that involves some loss of . . . fidelity? Usability? Precision? If you have too few neurons, the neurons have to become massively polysemantic, and it becomes harder to do anything in particular with them.

I think that brings us back to all those other things - speed, accuracy, number of connections. When you try to solve that problem, you’re trying to explore/test a very large solution space before the brain-wave-shape of the problem dissipates into random noise and you have to start all over again. Maybe if your neurons are more monosemantic, then you can get more accuracy in your search process and the problem-shape dissipates more slowly.

I asked a friend who thinks about these topics more than I do; here’s their answer:

pretty much everybody who asks "why do neural nets work at so many things?" comes up with the same answer. it didn't have to be neural nets. other things like genetic algorithms and cellular automata have the same capability. it's just the ability to represent a rich set of different functions, which is what you get when you have a sufficiently large set of modifiable items that can represent phenomena at different scales, and mechanisms of variation and selection so you can make a bunch of funky functions and then choose only the ones that work on the training data. and then "nature is kind" and to some large degree past patterns tend to generalize to future ones. but you need to have a big enough model, with enough wiggly bits, that you can represent arbitrary functions!

coming at this from the mathematical point of view, you can approximate any continuous function (on an interval) with a weighted sum of sines and cosines at different frequencies, or with polynomials, or with piecewise linear functions, or a bunch of things. but the better an approximation you want, the more "elements" and the finer/wigglier elements you need to build your function out of (higher-frequency sines/cosines, higher-order polynomials, shorter line segments)

or, like, going away from math for a moment, you can say more complex things in a language with more words. maybe only up to a point; maybe there's no real difference in expressivity between, say, English and Chinese, because they both have "enough" words, but you'd be really screwed in a "language" with five words.

more neurons in the brain -> there are more possible configurations of firing -> it's a "richer" language. one consequence of this is that you need less polysemanticity, as you said.

https://en.wikipedia.org/wiki/Overcompleteness is a good concept here. to be "complete" is to have "enough" components to represent the function you want. to be "overcomplete" is to have more than enough. which is, in practice, good for faster learning, especially in noisy conditions. if you're "overcomplete", you don't have to hit upon the unique combination of elements that exactly represents your function, there are lots of ways to do it, so you'll come across one by chance more quickly

if you look carefully at the famous Neel Nanda mech interp paper from 2023 https://arxiv.org/pdf/2301.05217 you'll see that the model "learns" a trigonometric identity; how? because it tries a superposition of lots of functions at once, and its training loss tells it to minimize the difference between its "guess" and the correct answer, so the "guess" gradually goes from a flat distribution over "all" functions to a sharper and sharper peak at the right one. it actually picks a weird-ass linear combination of sines and cosines that no human would think to write down, but which is approximately close to the right answer.

in other words, there actually isn't a single right answer that the model "magically" found, like a needle in a haystack. even in a seemingly "rigid" question like a math problem with one right answer. there's a nice smooth basin of nearly-right answers that any sufficiently complex model will stumble into fairly soon, and a consistent "incentive" to go from nearly-right to more-nearly-right.

so, speculatively: brains and neural nets "learn" a generalizable principle/pattern by considering a "superposition of many guesses" and then gradually putting more and more "weight" on the best guesses. if the brain/model is way too small then you can't represent the right "guess" at all. If the brain/model is at the minimum viable size to represent the right "guess", it's still very polysemantically represented, so the "right guess" is indistinguishable from overlapping wrong ones, and it's hard to isolate it from alternatives? you learn one "pattern" for solving one problem but then it also constrains how you solve some other problem, and so doing better at problem #1 makes you worse at problem #2.

Share this post