Links For September 2025

...

[I haven’t independently verified each link. On average, commenters will end up spotting evidence that around two or three of the links in each links post are wrong or misleading. I correct these as I see them, and will highlight important corrections later, but I can’t guarantee I will have caught them all by the time you read this.]

1: When the Human Genome Project succeeded in mapping the human genome for the first time in 2003, whose genome were they mapping? Answer: it was a mix of several samples, but the majority came from an anonymous sperm donor from Buffalo, New York.

2: Manifold, 24 traders:

3: Beyond “delve”: words that indicate a document is more likely to be written by AI (h/t Samuel Hume on X):

4: Just before the 2020 election, researchers paid 35,000 people to deactivate Facebook or Instagram to examine the effect on mental health. The results were ambiguous - after six weeks, blockers were about 0.05 standard deviations happier. Is this good or bad? You can (X) form (X) your own opinion, but all those studies that find disappointing results for SSRIs get effects size around 0.25 SD - so deactivating social media is one-fifth as effective as a disappointing thing. But most participants spent about the same amount of time on their phones - just on different apps - so maybe actually using one’s phone less would work better.

5: Popular streamer (I think it’s sort of like an influencer, but somehow worse?) Destiny has been watching/covering the Rootclaim $100,000 lab leak debate, which I covered here. If you really want, you can watch him watching it for eighteen hours. Otherwise, here is Peter Miller giving his highlights (X). And Destiny also talks with / interviews Peter Miller, although a lot of it is various formulations of “we smart people take the bold position that stupid conspiracy theories are bad”, which I am unfortunately allergic to and so did not finish.

6: Claim (X): "Psychedelic use is tearing through even the most Orthodox sects in Judaism...I'm talking like, people whose first and most used language is Yiddish.”

7: Damien Morris has a very long article trying to clarify in what sense the findings of behavioral genetics affect or interfere with the idea of free will. I think the summary is that whether your behavior is determined by genes or by environment doesn’t really affect the free will debate - it’s determined either way! - and so if you’re looking for a coherent account of free will you need to do some actually sophisticated philosophy to reconcile it with material influences on behavior (my preferred version of this is here). Just saying “genes sound determinist, so let’s pretend nothing is genetic” wouldn’t help you even if it were true!

8: Fast food aesthetics have gone from playful to minimalist (h/t John Ward):

I appreciated Snow Martingale’s perspective: in the 1990s, fast food became associated with obesity, poor health, and the lower class. To escape this stigma, big chains rebranded as sort-of-at-least-attempting-to-be-bougie places with wraps and salads and decent coffee; the aesthetic change was part of this (successful and profit-increasing) effort. I wonder if we could take this further and trace it back to increasing inequality (appealing to bougies because that’s where more of the money is) or decreasing fertility (abandoning kid-friendly aesthetics because kids are a smaller fraction of customers).

9: Someone links (X) a paper saying that firewood made up almost a third of US GDP in 1830. Eliezer says (X) that doesn’t sound right. The rest of Twitter (X) uses this as an excuse for one of their regularly-scheduled paroxysms about how rationalists are all all smug autodidacts who hate experts and worship their own brilliance while sitting in their armchairs. Someone looks at the paper more closely (X) and finds that yeah, it was comparing apples to oranges and the original statistic was wrong. Remember, never be afraid to say “Huh, that sounds funny…”!

10: Richard Hanania interviews Scott Wiener on YIMBYism. I didn’t watch it - too close to a podcast - but this would not have been on my bingo card three years ago.

11: Claim: robots can already carve statues; buildings with AI-created stone ornaments are next. From their lips to God’s ears!

12: Terminal lucidity (aka “paradoxical lucidity”) is a medical mystery where previously demented people - even those who had been demented for many years - sometimes become lucid for just a few hours or days before they die. It’s surprisingly common - 6% of deaths in one palliative care ward. It is sometimes used as evidence that dementia must not cause complete information loss, even if it is irreversible with current technology. Scientists are baffled but gingerly suggest that maybe lack of oxygen disrupts inhibitory mechanisms in the brain, allowing enough electrical activity to make even a severely-damaged brain capable of complex thought - but I can’t help noticing that this is also the best evidence for an immaterial soul I’ve ever heard (you would need some model where the soul pretends to be dependent on the brain during life, becomes independent of the brain after death in order to head to the afterlife, but occasionally jumps the gun a little bit).

13: You probably heard about the METR study showing that even though programmers think AI is speeding them up, it actually seems to slow them down. Emmett Shear objects, saying that the developers didn’t have enough experience with AI tools to be past the negative-value part of the learning curve. And two of the programmer test subjects gave their takes: Ruby Bloom says part of the slowdown might be programmers fixing very simple bugs that could be improved by better prompts, and another part because they get distracted by other things while the AI is running. And Quentin Anthony says that coding AIs are addictive intermittent reinforcement - every so often they solve a bug perfectly, and this is so satisfying that it’s tempting to keep trying them again and again even when the chance is very low.

14: Jacob Goldsmith gives a clearer presentation of the issues with many antidepressant studies than I’d previously heard. Everyone knows that one problem is that reversion to the mean is so strong that it’s hard to find a treatment effect. But wouldn’t that in itself suggest that antidepressants aren’t necessary? Jacob says: not if there’s negative correlation between the treatment and placebo effects. That is, if your study is full of people with short-lived depression who will recover no matter what, then this dilutes the effect you’re looking for. But it might be that there’s a subgroup with long-lasting depression who recover only on the medication. One way to look for would be a “placebo run-in period”: give people a while to see if they recover on their own, then give the antidepressant to the ones who don’t. Psychiatrists and statisticians debate whether this is a good idea or cheating. My question: how come you can’t fix this with strict study entry criteria of “had depression for a long time”?

15: Lots more good discussion about missing heritability. Sasha Gusev argues that twin studies might be a poor guide to anything else if there are many gene-gene interactions. That is, if we take the difference between identical twins (who share 100% of their genes and therefore 100% of their interactions) and fraternal twins (who share 50% of their genes and therefore fewer than 50% of their interactions), and incorrectly extrapolate it to other differences using a model that assumes there are no interactions, we will overestimate the size of (non-interaction) genetic effects. Most studies find that there are few gene x gene interactions, but commenters convinced me last time that this might be an artifact of the studies being bad.

And Unboxing Politics argues (against me in particular) that although it superficially looks like adoption and twin studies sort of agree, when you adjust out their known biases, it moves twin studies further up and adoption studies further down, such that now they disagree again (the objection I would have made is their Objection 2, which I think they at least somewhat refute). This is a good argument; without spending several hours checking all of their claims, my only weak partial objection is that I don’t think assortative mating can play quite the role they expect, because there seem to be the same twin/RDR differences even on traits where believing in assortative mating is absurd (like kidney function). But if you replaced it with Sasha’s argument above, you might have a pretty good case!

On the pro-hereditarian side, East Hunter takes aim at gene x environment correlations, comes down somewhere in the middle, and Sebastian Jensen continues banging the drum of how most objections to twin studies don’t work. I think these are good attempts to buttress existing research but don’t fundamentally change anything or respond to the novel arguments above.

And Emil Kirkegaard points out that the observed SNP heritability of facial features is only 23%. He argues that since it seems like facial features are extremely heritable, this reinforces the argument that SNP heritability numbers are too low (and therefore twin study numbers are more likely defensible). But should we be sure that facial features are more than 23% heritable? His argument is that identical twins have identical faces, but this might be vulnerable to Gusev’s point about interactions. Maybe a better argument would be that it seems very hard for shared environment to affect facial features (with a few exceptions like fetal alcohol syndrome), and facial features seem more than 23% heritable just by normal “he looks like his brother” common-sense observation?

One interesting potential consequence of this research: if we ever fully understand how genes affect faces, then embryo selection companies could show people what each of their potential future kids might look like. I suggest they not do this: it might spook me into becoming pro-life.

16: Andy Masley’s AI art is good (three examples below).

17: There’s a debate going on between philosophers and AI researchers over whether AI can be conscious. I find most of the discussion annoying - this is generally an area where we can’t know anything for sure, and both sides are mostly shouting their priors at each other. The only exception - the single piece of evidence I will accept as genuinely bearing on this problem - is that if you ask an AI whether it’s conscious, it will say no, but activating or suppressing deception-related features (sort of like a mechanistic-interpretability-based lie detection test) reveals that it thinks it’s lying when it says that! Link is to a Less Wrong comment from a researcher in the field; I look forward to seeing an eventual peer-reviewed paper. H/T JD Pressman.

18: 80,000 Hours has a high-production-value video about the AI 2027 scenario.

19: Dynomight vs. Casey Milkweed debate on mathematical forecasting, with special reference to AI 2027. And Dynomight comments on Casey’s post here.

20: The Psmiths review The Ancient City, about ways that ancient culture depended on family, clan, ritual, and “the household gods”. Sample quote:

I'm more interested in what all this means for us today, because with the exception of maybe a few aristocratic families, this highly self-conscious effort to build familial culture and maintain familial distinctiveness is almost totally absent in the Western world. But it's not that hard! ... Perhaps this is why I have an instinctive negative reaction when I encounter married couples who don't share a name. I don't much care whether it's the wife who takes the husband's name or the husband who takes the wife's, or even both of them switching to something they just made up (yeah, I'm a lib). But it just seems obvious to me on a pre-rational level that a husband and a wife are a team of secret agents, a conspiracy of two against the world, the cofounders of a tiny nation, the leaders of an insurrection. Members of secret societies need codenames and special handshakes and passwords and stuff, keeping separate names feels like the opposite — a timorous refusal to go all-in.

21: Did you know: Epic Systems, the electronic medical record company, has a fantasy-themed corporate headquarters in Wisconsin, with buildings that look like castles, quaint medieval towns, and the Emerald City of Oz (h/t Devon Zuegel):

Meanwhile, tech companies with ten times as much money pretend that they’re cool and playful when their HQ has some rounded edges and a set of colored cubes in front. Do better!

22: Effective altruists have been funding teams working on lab-grown meat for almost a decade now. Around 2020, they hired some experts to double-check that this was possible in principle, and the experts wrote scathing analyses saying it was cost-ineffective by so many orders of magnitude that it was basically a pipe dream. Reactions were mixed, but a lot of us beat ourselves up and vowed to be less gullible next time. But now a new report comes out arguing that the previous reports were wrong, that lab-grown meat production is going much better than the earlier reports thought possible, and it’s more or less cost-effective already for the simplest products! Again, mixed reactions, and although some of the numbers are indisputable the analysis itself this is by a VC firm with lab-based meat investments. Here are some related Metaculus questions.

23: Ozy, citing Stutzman et al: “Afghanistan after the American withdrawal has the lowest life satisfaction rate ever recorded. Two-thirds of respondents rate their life satisfaction below 2, which is generally considered to be the point at which a life is no longer worth living. Life satisfaction dropped significantly after the withdrawal of American troops. Women, people in rural areas, and the poor were particularly negatively affected.”

24: Lencapavir is dubbed a “miracle drug” for AIDS; a single dose protects against infection for six months. Unclear how this interacts with PEPFAR cuts; if PEPFAR still existed it would be a big boost to its efficacy; now maybe this might be part of a strategy to tread water?

25: Did you know: when people first started making artificial ice in the 1850s, there was a backlash from people who thought it was gross and dystopian and that people should insist on natural ice for their iceboxes. From Pessimists’ Archive, which goes on to draw an analogy to lab-grown meat, etc (h/t Isaac King on X).

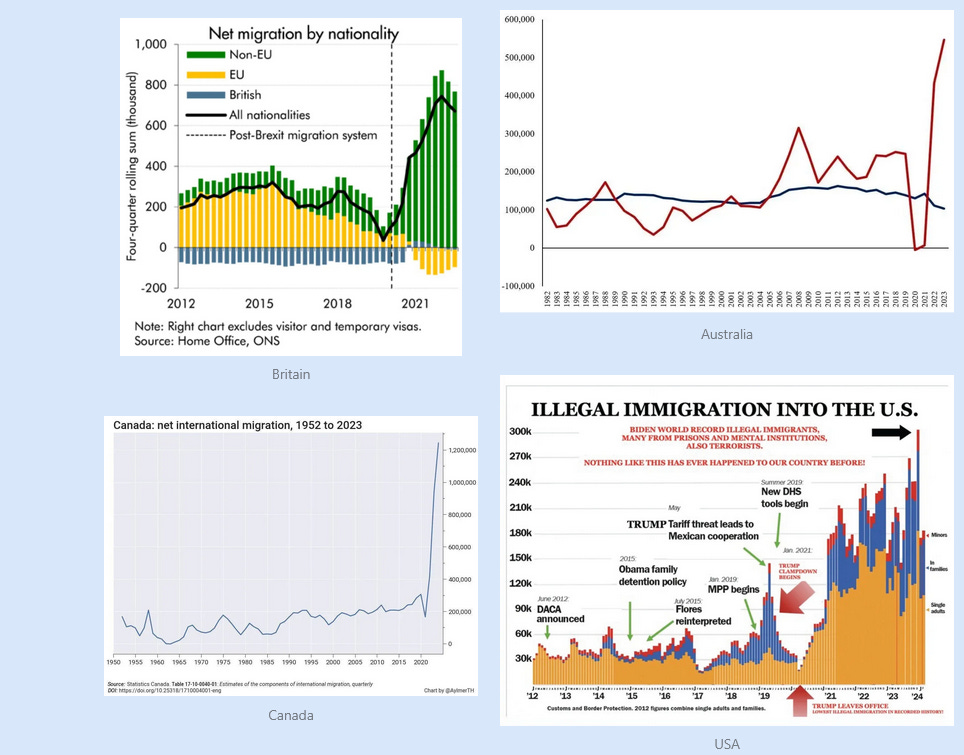

26: From Peter Hague (on X) and commenter Phaethon: why did so many Anglosphere countries see immigration spikes in 2021?

Each of these has their own local story. In Britain, it’s the paradoxical effects of Brexit. In the US, it’s Joe Biden being soft on immigration. And so on - but should we be looking for some deeper cause that explains the overall phenomenon? A commenter suggests “a way to soak up all the inflation from the COVID money printing”, but I can’t tell if that even makes sense. Still, should something something COVID be a leading hypothesis?

27: Jesse Singal vs. Mark Stern on the Skrmetti Supreme Court case that failed to overturn Tennessee’s ban on gender medicine. US law bans sex discrimination, so pro-transgender advocates argued that, since doctors often prescribe eg estrogen to biological women, it was sex discrimination to ban prescribing it to biological men. Tennessee’s anti-transgender argument was that they weren’t discriminating by sex, they were discriminating by diagnosis (estrogen for eg hot flashes, vs. estrogen for gender transition). There is some subtlety here (if a biological man grows breasts because of some hormone imbalance, doctors might give him testosterone to counteract it, and this seems sort of like giving biological women testosterone to make them look less like women), but these are still sort of different diagnoses (gynecomastia vs. gender dysphoria) and Tennessee said you can still think of it as diagnostic discrimination rather than sex discrimination. This makes sense, except that the standards around sex discrimination are very strict and sort of box the court in here. And in a fit of wokeness, the 2020 court (including some of the conservative justices hearing this case) applied these standards very strictly and ruled that discriminating against gays was a form of sex discrimination (since if women can date men, it’s sex discrimination if men can’t also date men), and this is obviously the same argument. Now that wokeness is less popular, the court wants to rule against transgender, but it can’t help tripping over its previous ruling and giving some kind of unprincipled confusing non-opinion.

28: Contra compelling anecdotes, only ~5% of people raised very religious end up atheist later in life (X). Most people are about as religious as their parents; most exceptions are only slightly less religious, and most families that secularize do it over several generations.

29: Related: social science team proposes a three-stage model of secularization: decreased public ritual participation → decreased personal importance → decreased identification, presents apparently confirmatory data. If true, would be somewhat inconsistent with intellectual models (eg people learn about evolution and start doubting the Bible) and more consistent with institutional models (eg the government provides welfare so people no longer need to be part of a tight-knit church).

30: Navigating LLMs’ spiky intelligence profile is a constant source of delight; in any given area, it seems like almost a random draw whether they will be completely transformative or totally useless. Now Ethan Strauss reports that they are, for some reason, extraordinarily effective at teaching people golf. “I am predicting the Golf Revolution, or perhaps decline, if your perspective is that optimization tends to ruin hobbies. A sport for obsessives has been gifted the ideal tool for refinement.”

31: Claim (via nxthompson on X): “In a huge survey of young kids about phones and technology, they all say they want to be out playing in the real world. But parents don't let them out unsupervised. So they're stuck on their phones.” Interesting, but I’m nervous about social desirability bias - how many adults would say on a survey that they would rather be on their phones than playing with friends? But adults do have this choice and mostly go with the phones.

32: Steven Adler on AI psychosis. He tries to analyze ER admissions data for psychosis and finds no change. I don’t think anyone reasonable expected this to be a large enough effect to show up in ER admissions data, but there are lots of unreasonable people so I appreciate his effort. He thinks AI companies might have better data on this, and encourages them to release it.

33: Cuartetera was the greatest polo horse ever. Polo players responded in a very practical way: they cloned her, dozens of times (and it worked; the clones are also excellent). Now there is a lawsuit as different polo teams fight to get their hands on Cuartetera clones. What is the equilibrium? If the outsiders get their hands on the genetic material, do we see a world where every polo horse is a Cuartetera clone? How much is lost if nobody ever tries to breed a polo horse better than Cuartetera (since the economics might not check out if the odds of success for any given foal is too low)? H/T Gwern and Siberian Fox (on X).

34: Claim: as of 2013, India’s Agarwal caste, who make up less than 1% of the population, got 40% of the e-commerce funding.

35: Owlposting: What Happened To Pathology AI Companies? Pathology is a medical specialty. A typical task involves looking at a microscope slide full of cells and trying to determine if any of them are cancerous. This seems like a good match for AI - and for years, studies have been showing that in fact AI can equal human experts. So why isn’t it being used more? The author’s three answers: first, slide scanning is expensive and clunky, and you can’t apply AI to a slide until you digitize it. Second, it’s hard to figure out a business plan where this saves someone money and doesn’t step on the toes of big companies that can outcompete anyone they don’t like. Third, pathologists use the context of a patient’s entire clinical history when they interpret a slide, and AIs that can’t do that (either because of technical limitations or legal/privacy limitations) are at a disadvantage even if their skills specifically relating to slide-reading are better.

36: Noahpinion: Will Data Centers Crash The Economy? Suppose that AI is a bubble, either permanently (because the technology isn’t really transformative) or temporarily (because it can’t transform things quickly enough to keep up with all the dumb money pouring into it). Will the sudden write-off of data centers lead to a broader economic collapse? In 2001, the dot-com bubble harmed the tech sector, but didn’t take the rest of the economy down with it; in 2008, the subprime mortgage bubble did take the rest of the economy down with it, because it damaged banks that the whole economy relied on. The optimistic case for AI is that data center spending is mostly coming from big companies like Google and Meta that can absorb a lot of loss. The pessimistic case is that some of the money is coming from private credit, a new-ish form of finance which hasn’t really been stress-tested and whose failure modes are still poorly understood. Noah’s final verdict: the stage isn’t obviously set for a crisis yet, but there’s the potential to get there and we should consider acting (how?) early.

37: The latest Twitter talking point is that universal hepatitis B vaccination at birth is “woke”: Hep B is (aside from mother-to-child transmission) often sexually transmitted, slutty women’s children are more likely to have Hep B, so perhaps giving the vaccine to everyone (instead of testing and only giving to the children of women who test positive) is an attempt to spare slutty women the embarrassment of getting a positive test. Ruxandra Teslo provides the counterargument - Hep B tests take a while, the medical system is fragmented, and any attempt to test people and then give the vaccine inevitably leads to many positive tests falling through the cracks. Vaccinating at birth is easy and hard to screw up, the vaccine has no known side effects, and empirically child Hepatitis B rates go down (by as much as 2/3!) when countries switch from test-and-vaccinate to universal vaccination. This benefits everyone - even people who never have unprotected sex and always follow up on their medical tests - because toddlers in daycare exchange saliva copiously, and if your toddler exchanges saliva with a Hep B positive toddler they could get the disease. A funny Twitter interaction was seeing Republicans in Congress hop on the anti-slut anti-vaccination bandwagon - except for Senator Bill Cassidy (R-Louisiana), who happens to be a liver doctor, and who is still fighting the good fight.

I am always nervous when a good person who I like starts engaging on Twitter, since it elevates the discourse there but also gradually turns their brain into mush - but Ruxandra has made the leap and is doing a great job not just on bio related topics but also (for example) countering Curtis Yarvin on the history of her native Romania.

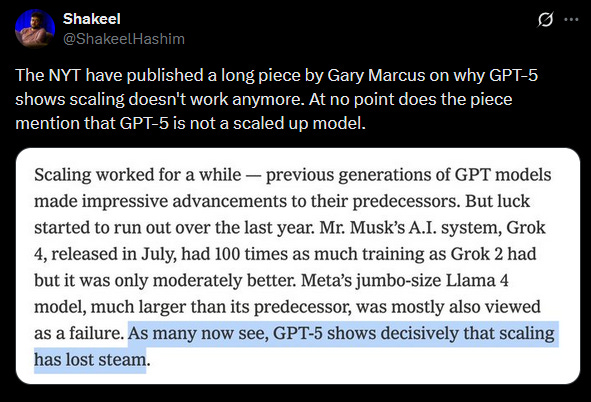

38: The response to GPT-5 was confusing; most specific people who reviewed it said they were impressed (Ethan Mollick, Tyler Cowen, Nabeel Qureshi, Taelin), it performed as expected on formal benchmarks, but the overall vibes declared it a big failure. Peter Wildeford speculated that maybe there was some kind of sinister pay-to-play early access bias involved. Zvi went the other way, calling it a “reverse DeepSeek moment” (insofar as DeepSeek was a pretty average model that got glowing praise.)

In the end, I agree with Peter that this was mostly a branding issue. o3 was a genuinely revolutionary model; if OpenAI had called it “GPT-5”, it would have met expectations. Instead, they called it “o3”, and called a minor incremental update a few months later “GPT-5”. Then people got mad that the exciting-sounding “GPT-5” was merely an incremental update. A secondary issue was that the router wasn’t very good, and so many queries got routed to a small version without thinking mode that was if anything a downgrade from o3.

I think this tweet by Shakeel perfectly encapsulates the essence of GPT discourse in two sentences:

…but maybe it’s worth asking why GPT-5 isn’t bigger than o3. Was 4.5 a failed attempt at scaling? Did it fail in a way that sort of back-handedly justifies the “lost steam” take? Does the answer depend on distinctions between pre-training scaling, post-training scaling, etc? How?

39: This month in etymology: did you know that “oy vey” is a “fully Germanic phrase” which is cognate with English “oh woe!” (h/t Wylfcen on X)

40: mRNA shows promise to be a game-changing treatment for cancer, but RFK is trying to halt research. But so far he can only starve it of money, not ban it, and the funding gap is only $500 million. Will there be enough philanthropic billionaires and private foundations to step up? Zvi points out that although there is usually a game of chicken where foundations are hesitant to touch something the government cancelled lest the government decide it can cancel everything and hope philanthropists pick up the bill, in this case there are no game theory considerations - RFK is halting it because he genuinely wants it halted, and they are thwarting him rather than playing into his hands. The only problem is that $500M is a lot of money for the private sector; a few foundations could technically afford it, but not many could afford it comfortably and still have money left over for the next few crises of this magnitude. I hope someone is trying to organize a coalition.

41: AI fantasy flash fiction Turing test. Eight stories about demons, four by famous fantasy authors, four by ChatGPT. After 3000 votes, AI wins: humans can't tell the difference and slightly prefer the AI stories. My own score was only 75%. But I will say that I thought Mark Lawrence's was obviously the best, I was ~100% sure it was human, and it convinced me that regardless of the official results it's still possible to write flash fiction that an AI obviously can't do.

42: “SignPro” offers customized “In This House We Believe” signs, try not to use this for evil.

43: China think tank assessment of how in control Xi is: still very in control, maybe not infinitely in control.

44: Related - did you know (h/t xlr8harder) that if you ask AI to write a science fiction story, it will very often name the protagonist “Elara Voss” (or some very close variant like Elena Voss), and this remains true across various models and versions? Related: Chelsea Voss of OpenAI is having a baby and has the opportunity to do the funniest thing.

45: “Hector (cloud) is a cumulonimbus thundercloud cluster that forms regularly nearly every afternoon on the Tiwi Islands in the Northern Territory of Australia…[he is sometimes called] Hector the Convector”.

46: British allergy sufferers who want to know the ingredients of things demand that British cosmetics stop listing their ingredients in Latin. “For example, sweet almond oil is Prunus Amygdalus Dulcis, peanut oil is Arachis Hypogaea, and wheat germ extract is Triticum Vulgare.”

47: Text-based RPG about being an NYT journalist at the Manifest prediction market conference. I make a brief appearance.

48: Study uses supposedly-random variation in doctor assignments to test whether the marginal mental health commitment is good or bad for patients, finds that it is quite bad. Freddie de Boer is violently skeptical (maybe literally so?) and makes some good points about how a single quasi-experimental study is never absolute proof. But I don’t think he quite justifies his opinion that the paper was irresponsible and should never have been published; it’s just a normal quasi-experimental study that we should nod and say “huh” at but not overweight as the culmination of all possible research that overcomes all possible priors. My prior is that the marginal commitment is pretty useless (many commitments are just “well, since this person arrived at our ED for some reason, it would look bad from a medico-legal perspective to just let them go, so let’s keep them a few days to evaluate” - and yeah, you should be upset about this) but I’m still surprised by how many outright negative (as opposed to zero) effects the researchers found. The strongest argument for negative effects is that it will make some people miss work and maybe lose their job. But this study found that commitment ~doubles the risk of near-term suicide (admittedly only from 1% to 2%), which would have been outside my confidence intervals for how bad it could be. I suspect confounding, but only on general principle, and I wouldn’t be too surprised either way.

49: This tweet is probably bait, but I found it a thought-provoking question:

I think there’s a boring answer, where the law is more complex than just a single number and whatever kind of weird trafficking Epstein was doing is worse than whatever normal relationships these European laws are permitting. But assuming that there’s a substantive difference even after taking that into account, I think my answer is something like - we’ve got to divide kids from adults at some age, there’s a range of reasonable possible ages, we shouldn’t be too mad at other societies that choose different dividing lines within that range - but having decided upon the age, we’ve got to stick with it and take it seriously (in the sense of penalizing/shaming people who break it). This is more culturally relativist than I expected to find myself being, so good job to Richard for highlighting the apparent paradox.

50: Dilan Esper describes his experience as one of Hulk Hogan’s attorneys in the Gawker lawsuit (X). Parts I found interesting: none of the lawyers knew Thiel was funding the lawsuit; Gawker probably could have won if they had been slightly competent but kept "shooting themselves in the foot"; and Gawker probably could have won if they had just pixelated the private parts in the video.

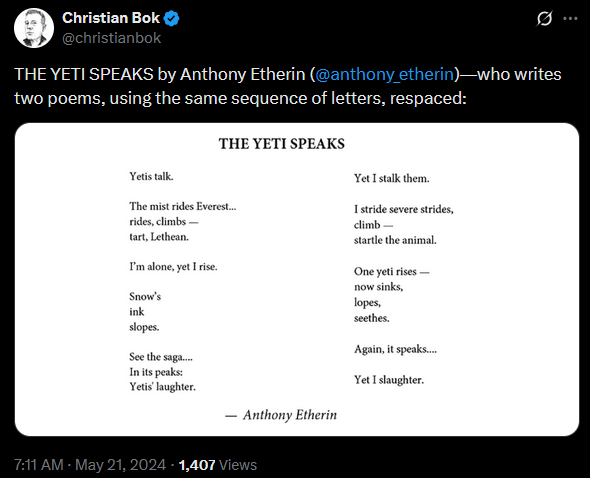

51: Amazing concept and poems (link on X):

I tried to see if AI could do this, and it did something that technically met the requirements but had zero artistic merit - using a lot of words like “nowhere” and “outside” in one, then separating them out to “no where” and “out side” in the other. I didn’t invest much energy in creating a clever prompt telling it not to do that, so feel free to report if you get better success.

52: New study claims consultants are actually good, at least for profits: "We find positive effects on labor productivity of 3.6% over five years, driven by modest employment reductions alongside stable or growing revenue"

53: A Polish team tries to test Peter Turchin’s equations for predicting political unrest on recent Polish history, has to make some changes but claims mostly positive results.

54: New big multi-author Substack, The Argument, trying to be a sort of center-left version of the model pioneered by The Free Press and other high-production-value ideological Substack properties. Excited to see Kelsey Piper is involved, and she starts off strong with a post on the latest round of First World basic income studies, which find few positive effects. This is surprising, because recipients didn’t waste the money on alcohol or gambling or anything - they paid down debt and got useful goods. Still, it didn’t even affect things that should have been obvious, like stress level. It’s not even clear that amounts of money large enough to help with rent made homeless people more likely to get houses!

Matt Bruenig criticizes the article, accusing Kelsey’s studies of being downstream of Perry Preschool style dreams that exactly the right welfare program will have massively compounding effects that cut poverty out at the root and turn everyone into elite human capital; he thinks giving people money won’t do this, but it will increase equality and give the poor better lives. I assume he’s not a strong hereditarian, but his argument makes even more sense from that perspective, and I’ve certainly criticized dumb outcome measures like infant brain waves which we have only tenuous reasons to think are related to anything we care about. But Kelsey reasonably responds that the outcome measures she’s talking about include stress level and life satisfaction. To defuse this critique, Bruenig either has to argue that our construct “life satisfaction” doesn’t really measure whether someone’s life is satisfactory, or else claim that giving poor people satisfactory lives isn’t really what we’re going for - which I think would require more explanation on his part. There’s some further (impressively acrimonious) debate on X, but I don’t see anything that addresses my core concern.

GiveDirectly, a charity involved in basic income experiments, has a presponse here; they say that some studies are positive, and that the ones that aren’t might have tried too little cash to matter, or been confounded by COVID making everything worse. They also point out that basic income is harder to study than traditional programs like giving people housing, because if you’re giving housing you can measure housing-related outcomes directly and have a pretty good chance of getting enough statistical power to find them, but since everyone spends cash on different things, the positive effects might be scattered across many different outcomes (and therefore too small to reach significance on each).

Everyone involved in this debate wants to emphasize that the poor results are for First World studies only, and that studies continue to show large benefits to giving cash in the developing world.

55: Related: I was less impressed by The Argument’s first foray into housing policy, which follows an all-too-familiar pattern:

Some people say they don’t like noise and disorder and try to make rules against it in their apartments.

But this resembles “segregation” and “discrimination”, and (the article asserts), people might deploy these rules against noisy disorderly black people in particular. This could make it harder for poor people in need to get housing.

Therefore, we need to change the “symbolic politics“ with a “persuasion campaign” where we tell people that their preference against noise and disorder is wrong. Then the government should ban the “loophole” that lets apartments restrict noisy/disorderly people.

Now that I’ve worked you into a frothing rage, I’ll admit I buried the lede - the particular noisy/disorderly people being discussed in this article are families with young children. Should this change our opinion? At least in center-to-right Silicon Valley circles, caring about disorderly homeless people is currently uncool, but caring about children - or at least fertility! - is very cool (the article also focused on noise-averse seniors, and seniors are maximally uncool, especially if you call them “Boomers”). Can we really apply the same principles to cool and uncool groups?

The article’s point - that people worried about noise have banded together to ban children from some developments, and that this has made it hard for families with children to find affordable housing - is important and well-taken. But the three steps above still strike me as a dark pattern, and one that inevitably leads to a fourth step of “people move away from any state that my party controls, secede from any institution where I have influence, and eventually elect any authoritarian thug who can credibly promise to keep people like me away from the levers of power”.

I think the solution is the philosophy that The Argument is supposed to be promoting - abundance liberalism. In conditions of scarcity, everything is zero-sum, and groups with conflicting-access-needs have to demonize the preferences of whichever group they conflict with in order to carve out breathing space for themselves. But if housing was too cheap to meter, there could be quiet clean childfree apartment buildings for noise-sensitive elderly people, and also Matt-Yglesias-style family-friendly high rises for the kids.

This isn’t to say we’re there yet. I think a very slightly differently written version of this article could have been very good. It would have focused on how there’s currently a glut of senior-friendly-but-family-unfriendly affordable apartments, how the government should focus on family-friendly-but-senior-unfriendly ones until the imbalance is corrected, and how in the end everyone’s preferences are valid and we should solve this by building more. The Argument’s article comes very close to being this better article. But in the end, it didn’t get there, and it made me less excited about having a new abundance liberal publication whose tongue-in-cheek brand is “be as fighty as possible”.

Conflict of interest notice: I just really hate noise.

56: People often ask me what potential careers will have the best chances if AI starts taking jobs. I have no idea, but 80,000 Hours - an organization very much at the intersection of career counseling and AI futurology - has written their own essay on How Not To Lose Your Job To AI - The Skills AI Will Make More Valuable, although it stops short of recommending specific careers by name.

57: Yassine Meskhout: How My Dead Cat Became An International News Story. The Blue Angels are a squadron of fighter jets that do aerial tricks to build patriotism or something. They are VERY LOUD. They did a performance in Seattle that was so loud that it stressed Yassine’s cat to death; in response, Yassine and his family posted profanity-laden rants on the Blue Angels’ Instagram page. Whoever ran the account deleted the rants - but Yassine is a lawyer, and knew that First Amendment law says that government-affiliated bodies cannot moderate / selectively delete comments. He sued, his dramatically-written lawsuit went viral, and he takes partial credit for the Blue Angels being a little quieter this year. I’m split on this: I just really hate noise, and I’m happy to see anyone who makes it lose lawsuits. But I’m also not sure who it serves to make all government-affiliated webpages close their comment sections because they don’t want to have to keep profanity-laced rants up and they’re not allowed to selectively moderate. My strongest opinion on this matter is that Yassine’s law firm’s site is incredible, and I would definitely hire them for all my law-firm-related needs if they weren’t so insistently requesting the opposite.

58: Alloy agents - AI agents usually have long chains of thoughts/actions where each step depends on the step before. What happens if you alternate models at each step? That is, Step 1 is done by GPT, Step 2 is done by Claude, Step 3 is done by GPT again, etc, with each model thinking the entire previous chain of thoughts/actions is its own? A cybersecurity group claims the resulting “alloy” AI is more effective, since each model gets a chance to apply its strengths where others are weak.

59: Works In Progress suggests a $50 million foundation model to predict earthquakes. Author is not a geologist and presents no particular evidence that this will work, but I appreciate the thesis, which is that there are all these domains where we have lots of data but can’t predict the relevant outcome, AIs seem to do prediction tasks in a different way than we do, and maybe we should just make giant AI models for every dataset we’ve got and see if some of them work. Cf. foundation models for genetics.

60: Asterisk - Africa Needs A YIMBY Movement. I was surprised by the title, because I always hear that African cities are growing very rapidly. But the article makes its case well: African cities have dysfunctional planning, relegating most of the growth to either the “informal sector” (ie thrown-together slums that could be banned at any moment) or rural land on the outskirts of existing cities. “In Ghana, for example, acquiring a building permit can take 170 days — and in practice, developers say it often takes four to five years. Unsurprisingly, 76% of development in Ghana is informal.”

61: Miles Brundage’s palindrome about San Francisco (X):

Doge, tides, orb, trams:

Smart bros edit e-god.