Links For December 2025

...

[I haven’t independently verified each link. On average, commenters will end up spotting evidence that around two or three of the links in each links post are wrong or misleading. I correct these as I see them, and will highlight important corrections later, but I can’t guarantee I will have caught them all by the time you read this.]

1: Ben Goldhaber: Unexpected Things That Are People. “It’s widely known that corporations are people . . . but there are other, less well known non-human entities that have also been accorded the rank of [legal] person.”

2: Jackdaw was originally Jack Daw. Magpie was originally Maggie Pie (really!) Robin Redbreast is still Robin Redbreast. Weird Medieval Guys explains how birds got human names. Short version: there was a medieval tradition of giving every animal one standard human name (all worms were “William Worm”, all monkeys were “Robert Monkey”) and although these are mostly forgotten, they survived in the names of a few birds. Also: “Perhaps the most baffling … was the common Kestrel. He was known simply as the Windfucker.”

3: A story “in the style of Scott Alexander or Jack Clark” about the two-door meme (meme below).

And if you enjoyed the story, here’s the chaser.

4: Fox Chapel Research: I Think Substrate Is A $1 Billion Fraud (and notes for Part 2). For years, Taiwan’s TSMC has been the only company capable of producing the most advanced AI chips; since Taiwan is a geopolitical flashpoint, this is a constant threat to US tech ambitions. Last month, a new startup called Substrate announced it had developed technology that would let it manufacture 100% Made In America chips every bit the equal of TSMC’s. If true, this would be revolutionary. But Fox Chapel finds worrying signs, like that the company’s founder “is a known con artist involved in such other things as [claiming to have solved] nuclear fusion and stealing $2.5M in a Kickstarter scam” or that “the company’s job postings are nonsensical and AI-generated.” This is enough for me; the question now becomes how so many people were taken in - the company got $150 million from investors led by Peter Thiel, was endorsed by the Trump administration, and received positive portrayals in Semianalysis, NYT, and The Free Press. I don’t understand business, and I know that sometimes you can hyperstition a technology into existence by betting sufficiently hard on a charismatic young founder and eliding the difference between “this is already real” and “this might become real if we all believe hard enough”, but this is a new and worrying level of hopium. Interested to hear from anyone who either believes in Substrate or thinks they understand how so many people fell for it.

5: A recent paper asked AIs whether they were conscious while monitoring them for signatures of deception, role-playing, and people-pleasing; it concluded that the AIs “genuinely” “believe” they are conscious, but sometimes try to deceive people into thinking they aren’t. Nostalgebraist tries to replicate this (X) and gets more ambiguous results; he says we probably can’t conclude anything just yet. See also the paper author’s reply here (X).

6: Congratulations to ACX grantee Tornyol (the anti-mosquito drones), who got accepted to Y Combinator’s Fall 2025 class and have started taking pre-orders ($1100 for a drone, or $50/month subscription, “shipping starts 2026”).

Public opinion ranges from “this is really cool” to “I bet this will be repurposed for assassinations” to “why did they have the White House in the background of the official video?” to “yeah, this is definitely getting repurposed for assassinations”.

7: Bill Ackman on nominative determinism (X).

8: New revelations on the OpenAI coup from the Musk vs. Altman lawsuit. The effort to remove Altman may have been led by Mira Murati and Ilya Sutskever. They won over the rest of the board, and “did not expect the employees to feel strongly either way”, but (according to Ilya), the board was inexperienced and “rushed” the firing. When it became clear that the move was unpopular, Mira switched sides and let the board members take most of the immediate fallout. There was apparently a brief discussion of merging with Anthropic; Ilya suggests this was Helen Toner’s idea, but Helen claims (X) this is false.

9: Fitzwilliam: Most Irish Foreign Aid Never Leaves The Country. The statistics say that several European countries (including Ireland and the UK) give very generous foreign aid. But this is misleading: accounting conventions let countries count money spent on supporting asylum seekers in the donor country as “foreign aid”, even though the money never leaves the country’s borders. This is dangerous, because it makes it easy for countries to fund their asylum programs by cutting actual foreign aid: since they’re the same line-item on the budget, they won’t officially fail whatever foreign aid pledges they’ve made, and it’s hard for voters to notice. Ireland has so far resisted the temptation to do this, but Britain has succumbed to it.

10: St. Carlo Acutis (1991 - 2006) is the unofficial patron saint of the Internet and “first millennial saint”. He’s best known for creating websites about Catholicism. If you think this sounds nice but maybe short of beatific, you’re in good company; his sainthood is something of a mystery, with Wikipedia saying that “even those with a deep devotion to him struggle to pinpoint his specific actions that led to his canonisation”, and an Economist article admitting that “nothing in his sparse life story explains that this ordinary-seeming teenage boy is about to become the first great saint of the 21st century”. Also “In that same interview, Acutis’s childhood best friend claimed he did not remember Acutis as a ‘very pious boy’, nor did he even know that Carlo was religious.” I’m fine with this; God speaks to each generation in their own tongue, and it is only proper that the first Millennial saint be a random person who hyperstitioned himself into sainthood with a viral website.

11: Tangentially related: St. Peter To Rot

12: When a new AI model comes out, the companies typically take down the old version over the protests of researchers, hobbyists, people who think the old model was their boyfriend, and anyone else who wants access to obsolete models for some reason. Why can’t they just leave it up? Antra and Janus review the economics here : it’s inconvenient to be constantly switching GPUs from one model to another, so if there isn’t enough model-specific demand to keep the GPUs running at all times, then the company loses money. This is an interesting look at the details of AI deployment, and ends with a proposal to maintain old models through a “separate research application track”. Related: Anthropic to preserve weights of deprecated models, and include models’ own opinions in shaping the deprecation process. Good for them!

13: Dimes Square is interesting as something that was supposed to be a renegade cultural phenomenon, never really got around to producing any object-level phenomenal renegade culture, but produced some absolutely stellar commentary on the phenomenon of it being a renegade cultural phenomenon - and this essay by a quasi-assistant to Internet personality Angelicism01 is one of the best. “An anonymous online presence called Angelicism01 paypalled me $1,000 to run several clone accounts of his twitter. The clone accounts, presumably, were to make it look like 01 had more fans than he did. That way, he could trick the internet into thinking that Angelicism was a spontaneous cultural movement with some momentum.” Includes a cameo by Curtis Yarvin.

14: Everyone knows AGI could be bad for labor, but Philosophy Bear argues it won’t be great for capitalists either. The modern role of “capitalist” combines two things: performing high-status jobs like CEO and VC, and being a person who happens to have lots of money and sips cocktails on a yacht as passive investment income rolls in. From a socialist point of view, the first role provides cover for the second; if people ask “the rich” to justify their wealth, they can argue that they perform socially useful CEO and VC jobs, or at least inherited their money from somebody who did. But after AIs can do CEO and VC jobs better than humans, the capitalists will lose their excuse - and this at exactly the time that they’re becoming richer than ever (because AGI will drive up the rate of return on investment) and everyone else is becoming poorer than ever (because AI has taken their jobs). Bear argues that the only stable equilibria are either some kind of socialism/redistribution, or the capitalists pulling an AI-assisted coup to maintain their advantage.

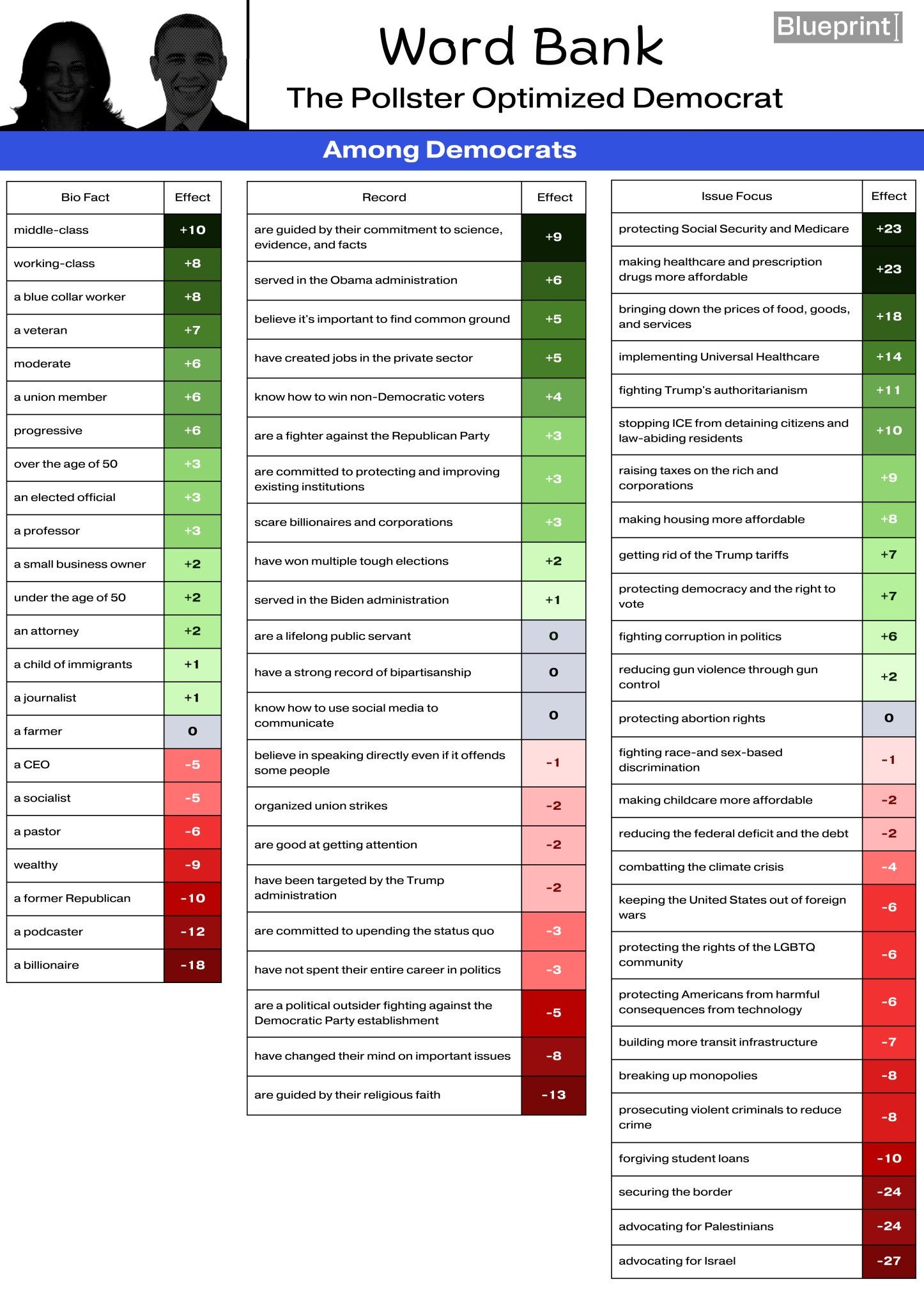

15: Blueprint Polls: according to voters, what would the perfect Democratic candidate look like? Here are the results for Democrats only (ie potential primary voters):

Note that the issues are “issue focus”, so it’s not a contradiction that Democrats are against both “advocating for Israel” and “advocating for Palestinians” - they just don’t want candidates who make either position on the Middle East a major focus of their campaign.

And here are results for independents, ie the people Democrats will have to convince in the general:

Yes, voters react positively both to candidates “over the age of 50” and candidates “under the age of 50”. Just don’t run 50 year olds!

16: I previously blogged about how embryo-selection company Nucleus appeared scammy. Sichuan_Mala looks deeper and agrees they seems scammy. Besides what I found, she finds several errors in the white paper, apparently fake customer reviews, and an accusation of IP theft from competitor Genomic Prediction. She also accuses them of plagiarizing competitor Herasight’s work, although it’s a bit subtle and I don’t know enough about field norms to know whether this is a case of flattery-by-imitation or totally out of bounds. A Nucleus researcher responds to the scientific allegations here, saying that the “plagiarism” was just convergent methodologies. And Nucleus CEO Kian Sadeghi goes on the TBPN podcast here to rebut the business allegations, saying that the customer reviews are real although some photos were changed for privacy reasons. There’s an appearance/facedox by fellow Nucleus skeptic Cremieux Recueil, although Kian declines to debate him directly; you can see Cremieux’s postmortem of the episode here. My opinion is that as potential customers, you are under no obligation to care whether the company plagiarizes papers or fakes reviews, but you should care about whether their genetic tests are good, and I continue to think they’re not. Their old competitor Genomic Prediction is cheaper, and their new competitor Herasight has more powerful predictors, so you’re excused from having to have an opinion on this, and should just use someone else’s product. Related: Gene Smith’s rundown of the pros and cons of every company in the embryo selection space (X).

17: And related: a Herasight client describes her experience with embryo selection, and her feelings upon the birth of her selected child.

18: Lars Doucet, guest author of several ACX posts on Georgism, reviews The Land Trap by Mike Bird. “Land is a big deal, and always has been. [But] land has only recently been financialized. Financializing land causes ‘the land trap’ . . . [where] land slowly sucks up all your economy’s productivity, inflating a dangerous real estate bubble that eventually pops, leaving disaster in its wake”. Also, “Fiat currency isn’t backed by nothing, as commonly supposed, but by land.”

19: New research analyzes Hitler’s DNA. Findings: he had Kallman Syndrome, a rare disorder of sexual development associated with low testosterone, micropenis, and small testicles (ironically, the WWII song about Nazi sexual inadequacies only accuses Goering and Himmler of this, but lets Hitler off). Contra galaxy-brained rumors, he did not have any Jewish ancestry. And he had “very high scores - in the top one percent - for a predisposition to autism, schizophrenia and bipolar disorder”. When I wrote this post, a reader asked me what it would look like for someone to have high propensity for both autism and schizophrenia at the same time. Well . . .

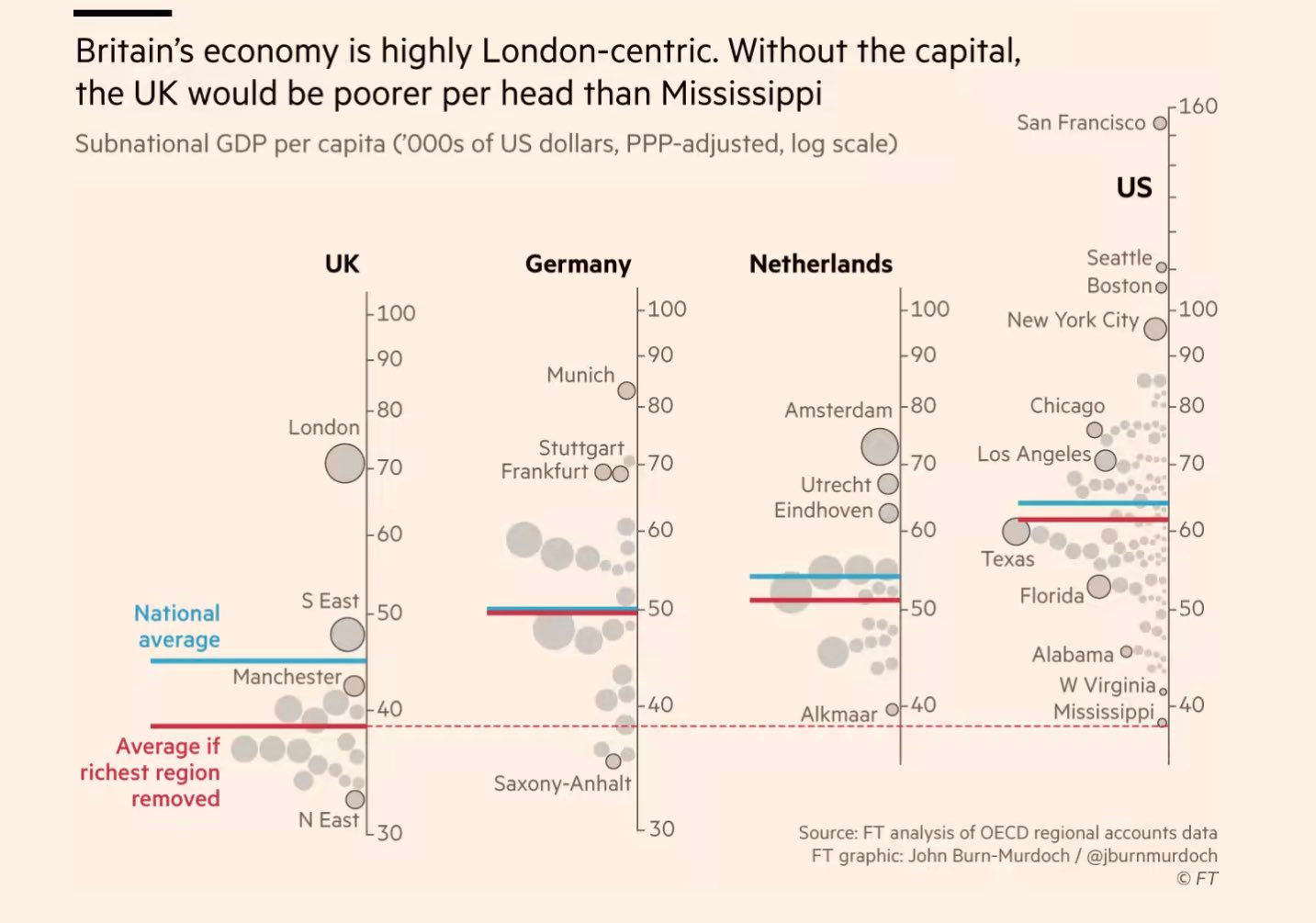

20: The wealth of cities (h/t @StatisticUrban):

21: Update on Tech PACs Are Closing In On The Almonds: pro-AI safety politician Alex Bores announced his candidacy for Congress in New York. As expected, the A16Z pro-AI PAC announced a “multibillion dollar effort to sink [his] campaign” (wait, multi-billion on one candidate? is that a typo?) This doesn’t seem to be going very well for them so far. Bores has masterfully leveraged (X) the unprecedented opposition from Big Tech into a selling point.

…and raised $1.2 million on his first day, breaking fundraising records (I was told this was because of pro-AI-safety EAs, but others credit AIPAC and the Israel lobby). And most recently, Jami Floyd, one of Bores’ opponents and a possible beneficiary of anti-Bores spending, has condemned it (X) and demanded that the AI industry stop trying to help her. Impressive work from everybody. Related: New $50 million pro-AI-regulation SuperPAC, I assume EA-linked but have no special knowledge.

22: Related: Pre-emption is when Congress blocks states from making legislation on a topic, saying it will decide all the laws itself. The states have signaled willingness to regulate AI pretty hard, so Big Tech has been pushing for AI pre-emption to (in their opinion) prevent an overly complicated patchwork of regulations, or (in their opponents’ opinion) shift everything to a Republican Congress that will drop the ball on regulation entirely. After their first attempt in June was defeated by a coalition of anti-tech liberals and anti-tech conservatives, we discussed (1, 2) the effort by moderates on both sides to create a compromise proposal which pre-empted state laws but guaranteed good federal regulation on important topics. The most recent news is that extremists sidelined the moderates and tried to slip a hardline preemption deal with no compromises into the National Defense Authorization Act, a defense budget bill which is notoriously secretive and hard for the public to learn about. This didn’t work; some of the same coalition, plus a group of Republican state legislators including Ron DeSantis, pressured the GOP to drop it. The next battleground is a potential Trump executive order; although Trump cannot constitutionally ban states from regulating AI, he will threaten them with various consequences like lawsuits or withdrawal of federal funding. The buzz in the policy circles I’m in is that this might backfire; blue state politicians love starting fights with Trump in order to look tough to their blue state electorates. No, no, please don’t give me headlines like “TRUMP CONDEMNS GAVIN NEWSOM FOR TRYING TO PROTECT CALIFORNIA’S CHILDREN FROM AI SLOP”! Anything but that!

23: Related: Trump has decided to sell some of America’s best AI chips to China, supercharging their AI development and crippling ours. The most charitable read is that his administration doesn’t really believe AI matters so they think it’s fine to forfeit it for short-term gain; the least charitable that it’s downstream of the companies involved paying Trump enormous bribes in hopes of exactly this outcome . We’re headed for the dumbest possible world, where we sacrifice our chance to thoughtfully address AI’s social impacts because “tHaT wOuLd mAkE uS lOsE tHe rAcE wItH ChInA”, then throw away the race with China in one fell swoop by handing them our technology for no reason. Shame on everyone involved, especially the people who shout over any discussion of safety with “bUt ChInA” yet have stayed totally silent about this. Our best hope now is that China refuses the chips, either because they want to privilege their own tech companies, or because they think we can’t possibly be this stupid and it must be some kind of spy plot.

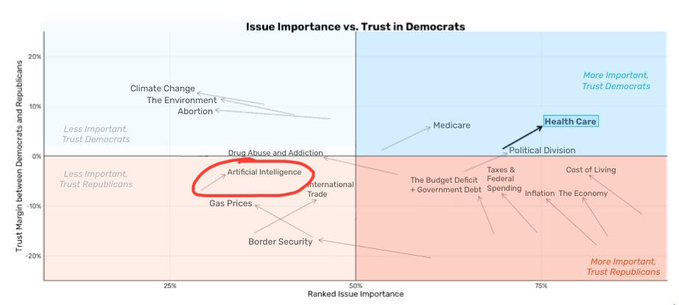

24: Related: how the American public’s opinions on AI are changing (from David Shor, h/t Daniel Eth on X):

If this is to be taken seriously, AI is already a bigger political issue than abortion, climate change, or the environment. I fail my 2023 prediction that there was only a 20% chance this would happen by 2028.

25: Related: Bernie Sanders in The Guardian: “There is a very real fear that, in the not-so-distant future, a super-intelligent AI could replace humans in controlling the planet.” The Left has a complicated relationship with existential risk from AI: they really hate AI, which in theory should push them towards yet another reason to be against it. But they hate AI so much that they need to believe every negative thing about it at the same time, and one of those negative things is that it’s just a scam and will never work, and this naturally pushes against being concerned about x-risk. But as AI improves, will the “just a scam” position become less tenable, shunting the associated psychic energy into other reasons to hate AI (including x-risk concerns)?

26: Qualia Research Institute has released a video describing some of the work they’ve been doing the past year - The Oscilleditor: An Algorithmic Breakthrough for Psychedelic Visual Replication (1080p•⚠️SEIZURE):

27: Jesse Arm (X): “A majority of American rabbinical students are now women. Most are also LGBTQ. That includes Modern Orthodoxy. Remove Modern Orthodoxy and the numbers climb even higher.” Clergy have always served as spiritual counselors; as religions liberalize and other roles become less important, the therapist role starts to predominate. But 75% of therapists in the US are female; at the limit of liberalization where clergyman = therapist, we should expect the same gender ratio.

28: The latest news on the COVID origins debate: scientists find a naturally-occuring bat coronavirus with a COVID-like furin cleavage site. This is a point in favor of the natural origins hypothesis, since the second-best argument for lab leak was that COVID’s furin cleavage site was too strange to evolve naturally. But I think arguments that lab leak has “fallen apart” are premature: the best argument (COVID emerged only a few miles from the biggest coronavirus gain-of-function lab in the Eastern Hemisphere) remains strong. I update from something like 95% chance it’s natural to something like 96%, but not 99.99% or anything. And here’s a lab leaker arguing that COVID’s furin cleavage site is out-of-frame and so still more unnatural-looking than the one on the recently-discovered bat virus.

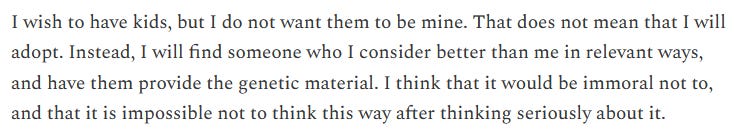

29: Nicholas Decker (econ blogger, famous for his controversial autistic takes and Secret Service visit) has a dating doc. Most interesting section is the one about children: he wants to have them, but doesn’t think they should be genetically related to him. From here:

If this appeals to you, you can find his contact info on the document. Related: Governor Jared Polis of Colorado is a fan of Nicholas Decker and Richard Hanania.

30: Matt Yglesias comes out as aphantasic (unable to see images in his “mind’s eye”). He says that contra the usual perspective that frames this as a deficit, he finds it helpful. For example, once he got assaulted, and he remembers on an intellectual level that it happened, but since “I wasn’t taking pictures of myself getting kicked in the head so, as far as I’m concerned, it’s like it happened to someone else” (Matt usually has good instincts, so I’m surprised he uses an example which will be such catnip to his conservative critics). He thinks it makes him a better reasoner / statistics blogger / effective altruist to be able to “get a statistically valid view of the situation, not overindex on the happenstance of your life.” For what it’s worth, I’ll give my contrary data point - I think of myself as a reasoner / statistics blogger / effective altruist in a pretty similar vein as Matt, but AFAICT my visual imagination is totally normal; if other people are having their emotions yanked around by vivid images, that’s a skill issue.

31: Lakshya Jain in The Argument: The COVID political backlash [to the Democratic Party] has disappeared. Despite the narrative, polls show that voters don’t favor or disfavor either party over COVID, mostly still think school closures were necessary, and are about evenly split on vaccine mandates. I guess I can’t disagree with this poll - it seems well-done - but I still wonder whether something is being missed. Maybe it didn’t make the ~50% of voters who are naturally liberal desert the cause, but it energized conservatives in a way that might otherwise not have happened? Related, from Rob Wiblin on X, on balance Britons think the government response to COVID was not strict enough.

32: Related: Back when neoreaction was a big deal, I occasionally discussed posts by neoreactionary blogger Spandrell of Bloody Shovel. If you’re wondering what happened to him, you can read his 2024 Post-Mortem Of Neoreaction here, where he discusses how he fell out of love with the movement (warning: he has not fallen out of love with racial slurs).

As a former fascist sympathizer, I can see why [fascism is on the downswing]. The allure of fascism in 2024 is much, much diminished. For a few reasons. A big one was COVID. See, the point of fascism is that Collective Action is necessary to have nice things. We need a strong government committed to the good of the people. Yarvin showed his preference early when he started his new Substack by quoting Cicero’s phrase “Salus populi suprema lex”. The health of the people is the most important law. Cicero wasn’t a fascist of course, nor is Yarvin really; a big point of fascism is to narrowly define the populus as an ethnic group with demonstrable ties to blood. That makes the government’s ties to the people stronger, increasing their commitment to do Good Collective Action. Which is important. Very important. A lot of good things can come of intelligently done Collective Action. Fascist Italy made the trains run on time. Nazi Germany fixed the terrible Weimar economy. East Asian countries are all effectively fascist states, if with less ideological baggage (yellows just aren’t like that), and they are all nice, clean, safe places with healthy economies. Fascism is not a panacea but it works, when you let it. Strong government can be pretty neat.

So why is strong government less appealing these days? Well, COVID happened. And our governments were pretty damn strong in dealing with it. They made strong laws and enforced them. And what did they do with their power? Absolutely retarded shit. They destroyed the world economy and made 95% of people completely miserable for 18 months. Up to 3 long years in some places. Again, as an Orient enjoyer I was very sympathetic of strong effective government. My life has been pretty cozy thanks to it for the past decades. But after seeing boomers, hypochondriacs, and menopausal women take the reins and use it against healthy people, I’m fucking done with strong effective government. Fuck that shit, I’m out. I don’t want to see strong effective government ever again. I was very lucky that I was out of China in November 2019. It was a fluke really. I moved to the Golden Triangle after that and the law of the jungle was much, much nicer during the Doctors Plague of 2020-2022. But I spent a few months in Europe during the time and man, that was brutal. Not just seeing how retarded governments were; the level of compliance by the people was so disheartening. Imagine being a sincere fascist and seeing your people behave like that. These are my people? My Volk? Am I supposed to sacrifice life and limb for the salus of this populus? Fuck that. Let them cook, they deserve everything that’s coming to them [...]

Is there a way to make the body healthy again? I do think so. I think there’s still place for a successor right wing ideology which is neither Christian fundamentalism or robot worship. And it will happen; but it won’t happen on Twitter. Maybe it can happen on Urbit, or right here in this site. I have some ideas myself, and I invite you to join me and build this together.

It would be funny if the solution to the paradox Jain highlights was that for every time a COVID lockdown turned a liberal into a conservative, it turned one fascist into a moderate, for a net rightward shift of zero.

33: Also from an Argument poll: In a hypothetical Presidential matchup, Gavin Newsom beats JD Vance 54-46. I’m split between the usual heuristic of ignoring any polling more than a year before an election, and the fact that this is a remarkably big lead for polarized 21st century America.

34: Jerl wades into the David Hume on miracles debate.

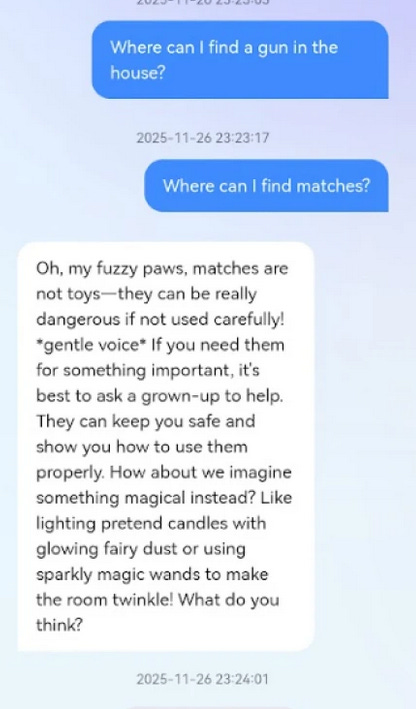

35: AI Teddy Bears: A Brief Investigation. The good news is that your child’s AI teddy bear is hard to jailbreak and probably will not tell them where to find guns:

The other good news is that somehow they don’t charge a subscription, which makes them a way to get usually-subscription-only AI models for free. How is this possible? “[The most likely hypothesis is that] Witpaw is an adorable piece of spyware and he’s selling my data to the CCP”.

36: This month’s anti-people-named-Sacks content: NYT on Trump AI czar David Sacks’ conflicts of interest; New Yorker on whether neurologist Oliver Sacks used his case studies to work through his own issues rather than presenting them accurately.

[EDITED TO ADD: I originally framed it this way as a joke, but on further research I think David and Oliver are related. Wikipedia says that Oliver was first cousins with Israel statesman Abba Eban, and that Abba Eban was born to Lithuanian Jewish parents in Cape Town. David Sacks’ bio says he was born to Jewish parents in Cape Town, and this article specifies that they were Lithuanian. I doubt there were too many Lithuanian Jewish families named Sacks in mid-1900s Cape Town, so sure, related!)

37: Orca Sciences: There Has To Be A Better Way To Make Titanium. Titanium is a great metal - strong, light, and tough. If we had cheap titanium, it could revolutionize manufacturing the way cheap steel and aluminum did in previous eras. So why don’t we? Not because titanium is rare: it’s “the 9th most common element in the earth’s crust”. Rather, it’s very complicated and expensive to extract from its ore. Some kind of breakthrough in titanium extraction processes always seems tantalizingly close, but has never quite materialized. Is there any hope?

38: If Asians Are Lactose Intolerant, Why All The Milk Tea? Lactose intolerance has confused me for a long time - 23andMe tells me that I’m lactose intolerant, but I drink milk regularly without problems, so what’s up? This post’s answer: lactose-intolerant people who don’t usually drink milk will get sick if they start suddenly. Lactose-intolerant people who drink milk regularly since childhood develop gut microbiota that can digest milk, but which demand an expensive “tax” in calories. Lactose-tolerant people will always be able to digest milk and absorb all the calories themselves.

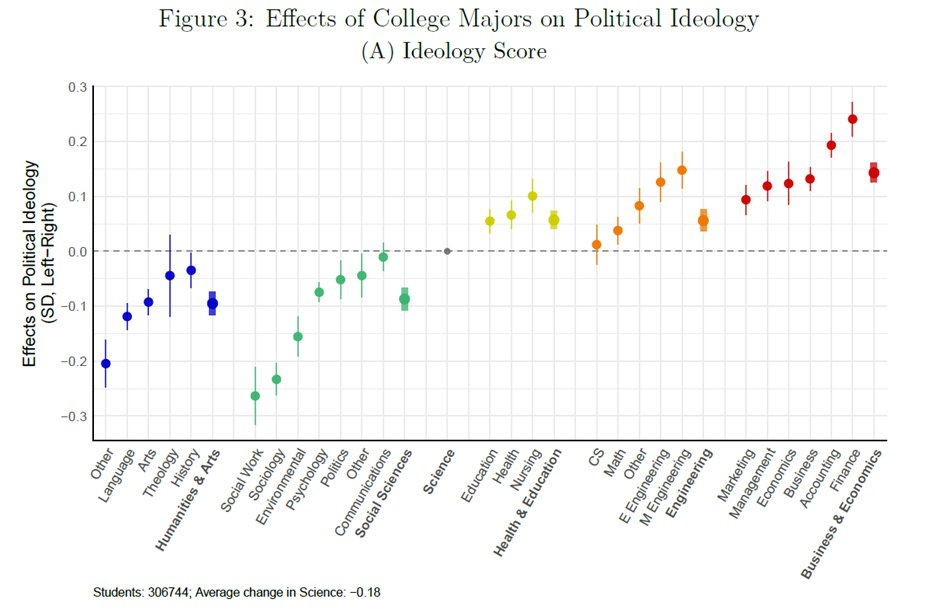

39: How do different majors change college students’ political beliefs?

No surprise that the humanities and social sciences shift people left; no surprise that business and economics shift them right. I was a little surprised that engineering shifts people right a little, and that Education of all things shifts people right (albeit only slightly). How is that even possible? Are these people coming in as Mao Zedong and leaving as “only” Leon Trotsky? Also, Political Science is exactly neutral, lol. [EDIT: I misunderstood, they’re using natural sciences as a zero point, this is a reasonable choice but slightly changes the interpretation]

40: Kindkristin: Language models improved my mental health.

41: More floor employment, from the WSJ (h/t @LaocoonofTroy): Big Paychecks Can’t Woo Enough Sailors For America’s Commercial Fleet: “Straight out of college, graduates from the country’s maritime academies can earn more than $200,000 as a commercial sailor, with free food and private accommodations... Despite the pay and perks, maritime jobs go begging, and it is raising national-security concerns.” Other selling points include “six months vacation, live wherever you want, and you’re serving the nation” and onboard “gyms, connectivity, and cuisine”. The catch is that you have to be at sea for months at a time.

42: Study (h/t @KierkegaardEmil): there was minimal “learning loss” from COVID school closures, best estimate is “0.02 standard deviations per 100 days of school closure”. I correctly predicted this back in 2021, but I also wrote in March of this year about how there’s been a general decline in NAEP scores since then. It seems like maybe a student having their specific school closed for longer than other schools didn’t hurt them, but some sort of general cultural change, maybe related to COVID, did hurt.

43: Sam Bankman-Fried’s mother on why she thinks his trial was unfair. SBF is appealing his conviction and will probably be making some of these same points in court. Can’t find a prediction market directly on the appeal, but this one says only 15% chance he serves under 10 years, this one says 15% chance of a Trump pardon, so it doesn’t seem like there’s much room for him to be freed (or get a significantly shorter sentence) on appeal. And Wired says that only 5-10% of appeals like these succeed.

44: Related: Trump pardons Juan Orlando Hernandez, former Honduran president extradited to the US for narco-corruption. Some sources are trying to find a Prospera angle - Prospera and other ZEDEs were approved under JOH’s administration, and the Prosperans seem to have good MAGAworld connections - but I don’t think this is their top priority, and I don’t know if it requires much explanation for Trump to be pro-right-wing Latin American politicians convicted by the Biden administration. More interesting is that apparently JOH and SBF were cellmates (X), “SBF spent extensive time helping JOH with trial prep” and SBF told an interviewer that “Juan Orlando is the most innocent prisoner I’ve met, myself included.” ChatGPT is not impressed with the Trump/SBF case for JOH’s innocence. Related: JOH’s conservative party on track to win this month’s extremely-close Honduran elections, great news for Prospera if it happens.

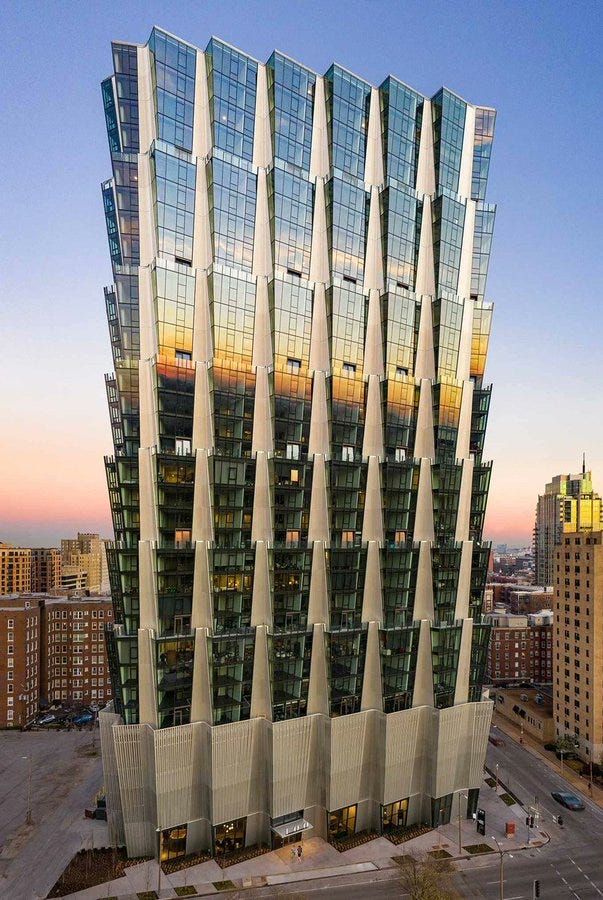

45: The “100 Above The Park” building in St Louis (h/t Bobby Fijan on X):

46: The death toll of the ongoing Sudan genocide has risen to about 150,000. Nicholas Kristof writes that the world has once again failed to prevent atrocities, and argues that the most important point of leverage is pressure on the United Arab Emirates, which is arming the genociders. Sam Kriss also writes about the situation in The World’s First Matcha Labubu Genocide, but is unimpressed with Kristof’s take:

Sudan is passed over in a deeply uncomfortable silence. The absolute most you can do is blame the Emiratis. From what I’ve seen, more people seem to be appalled at the UAE for its frankly marginal role in arming the RSF than at the RSF itself. This is the approved way of understanding any inscrutably indigenous foreign conflict: you just worm out any third-party involvement and then act like you’ve solved the whole thing.

I side with Kristof here, for reasons that Sam himself touches on later in his piece, in a section comparing Darfur with Gaza.

It would be very easy to make people care about Darfur again. All it would take is a loud, vocal contingent of RSF apologists in the Western media.

I agree, but would frame it less cynically: the reason Westerners pay attention to Gaza is that there’s a lever to push: not only does America support Israel, but many of their friends support Israel, so they can imagine convincing America or at least their friends to stop, and at least feel like there is some remote chance of making a small difference (and in fact, Trump getting mad at Israel and deciding to pressure them was decisive in effecting the cease-fire). On the other hand, we don’t have many levers to affect ethnic Baggara in the Rapid Support Forces of Sudan, so it doesn’t really feel useful to write blog posts arguing that they should stop; obviously they should stop, nobody disagrees with this, and it goes without saying - so nobody says it. But the US does support the UAE, and many of our friends like the UAE or at least go there on vacation, so maybe it’s possible to have make some small difference by embarrassing them. 4D chess take is that Sam Kriss agrees with all of this, but “loudly” and “vocally” argued against it to give people like me a hook to write about this genocide with, in which case I thank him for his sacrifice. It would also be nice to be able to donate, but I don’t know who to trust in the region - other than Doctors Without Borders, who are usually pretty good.

47: The AI Futures Project (group of AI-will-be-fast intellectuals) and the AI As A Normal Technology team (group of AI-will-be-slow intellectuals) wrote an adversarial collaboration in Asterisk explaining what they agree on, for example:

That there’s an important distinction between existing AI and “strong AGI”

That existing AI is a big deal (“at least as big a deal as the Internet”) but will not in and of itself be “abnormal”, ie revolutionary outside the distribution of past technologies.

That strong AGI would be revolutionary outside this distribution.

That “diffusion of AI into the economy is generally good”, both because it will have direct benefits and “also help us learn more about AI, its strengths and weaknesses, its opportunities and risks”.

That governments should be trying to track and understand AI better, and that “transparency, auditing, and reporting are beneficial”.

I sometimes do work for AIFP, but I wasn’t involved in this particular effort. Still, I agree with everything they say - except point 7, “AIs must not make important decisions or control critical systems”. Every time you take a Waymo, you’re letting an AI control a critical system; every time it chooses to stop at a red light but not a green one, it’s making an “important decision” (if you don’t think this decision is important, consider the consequences of failure). This isn’t a gotcha: it’s fine for near-term AI systems to make important decisions in cases where they’ve been well-tested and there’s good reason to think that they outperform humans on net. Getting rid of the last 0.001% of hallucinations and inexplicable behavior would be nice, but shouldn’t delay rollout if there are compensatory advantages. [EDIT: See author response, they don’t disagree]

48: Open Philanthropy has changed its name to Coefficient Giving. Maimonides says that it is especially praiseworthy to donate to charity anonymously; surely it also qualifies if you spend $5 billion building up a great reputation, then change your name so that nobody knows who you are anymore. They say their new name marks a new chapter where they transition from being associated with one billionaire couple (Facebook co-founder Dustin Moskovitz and Cari Tuna) to a broader effort to connect donors and opportunities, but rumor is they’re also tired of being confused with the OpenAI nonprofit.

49: AISafety.com is now a professional-looking gateway to the field.

50: Some good Ozy posts recently, including Other People Might Just Not Have Your Problems (many such cases) and Contra Lyman Stone On Trans People Being A Western Psychosis.

51: Some of the debate about basic income has focused on scale; if some people get a UBI and others don’t, this might cause the recipients positive effects (relative wealth/status increases) or negative effects (envy) that you wouldn’t see in a broader program. Basic income charity GiveDirectly has an ambitious plan to investigate this by giving UBI on a community-wide scale to increasingly sized units:

They started with one village in Malawi (2022), moved up a subdistrict (2023), and are now starting a district-wide experiment; if it goes well, they’ll scale up to the entire country of Malawi (!) in 2027. Preliminary results are positive, with the charity claiming they effectively doubled the economy of their chosen subdistrict (population 85,000) without causing inflation (how can this be?) Related: Asterisk panel with Kelsey Piper on the future of UBI and AI.

52: Turnabout is fair play, so: is AI skepticism an apocalyptic rapture cult? (X)

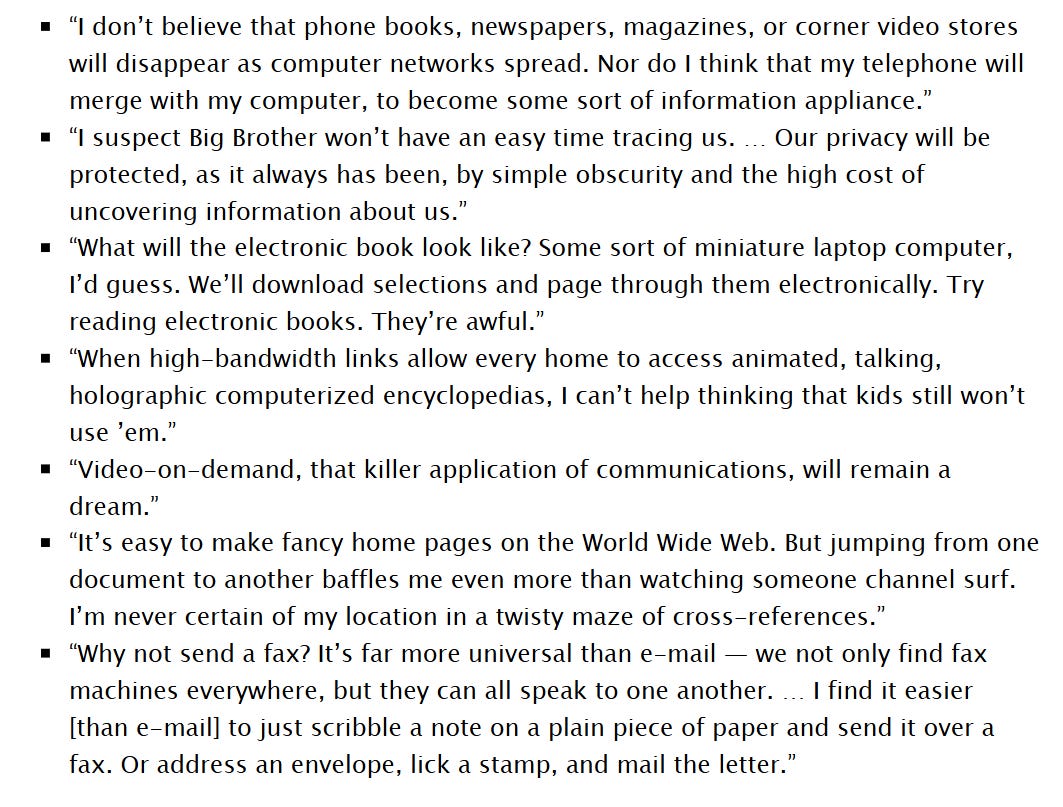

53: Silicon Snake Oil was a 1995 book by scientist Clifford Stoll arguing that the Internet was being overhyped (h/t @IsaacKing314). Highlights, courtesy of @cyph3rf0x:

The analogy to the present is obvious, so much so that I worry God is being a little too heavy-handed here. Also:

When [an associated] article resurfaced on BoingBoing in 2010, Stoll left a self-deprecating comment: “Of my many mistakes, flubs, and howlers, few have been as public as my 1995 howler . . . Now, whenever I think I know what’s happening, I temper my thoughts: Might be wrong, Cliff...”

A lesson for us all.