Your Review: The Synaptic Plasticity and Memory Hypothesis

Finalist #11 in the Review Contest

[This is one of the finalists in the 2025 review contest, written by an ACX reader who will remain anonymous until after voting is done. I’ll be posting about one of these a week for several months. When you’ve read them all, I’ll ask you to vote for a favorite, so remember which ones you liked]

I. THE TASTE OF VICTORY

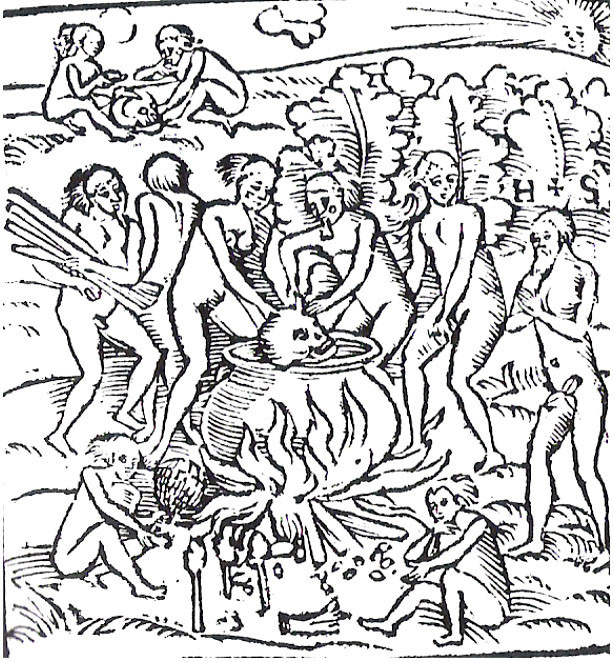

The Tupinambá people ate their enemies. This fact scared Hans Staden, a German explorer who was captured by Tupinambá warriors in 1554, when they caught him by surprise during a hunting expedition. As their prisoner for nearly a year, Staden observed a number of their cannibalism rituals. They were elaborate, public affairs; here’s a description of them from Duffy and Metcalf’s The Return of Hans Staden, an assessment of Staden’s voyage and claims: (Ch. 2, pg. 51-52)

First a rope was placed around the neck of the captive so that he might not escape; at night the rope was tied to the hammock in which the captive slept. Straps that were not removed were placed above and below the knees. The captives were given women, who guarded them and also slept with them. These women were high-status daughters and sisters of chiefs; they were unmarried and sometimes gave birth to the child of a captive. Some of the captives might be held for a period of time until corn was planted and new large clay vessels—for drink and cooking flesh—were made. Guests were invited to the ceremony, and they often arrived eight to fifteen days in advance of it. A special small house was erected, with no walls but with a roof, in which the captives were placed with women and guards two or three days before the ceremony. In the other houses, feathers were prepared for a headdress or for body ornamentation, and inks were made for tattoos. Women and girls prepared fifty to one hundred vats of fermented manioc beer. Then, when all was ready, they painted the victim’s face blue, mounted a headdress of wax covered with feathers on him, and wound a cotton cord around his waist. The guests began to drink in the afternoon and continued all through the night. At dawn, the one who was to do the killing came out with a long, painted wooden club and smashed the captive on the head, splitting it open. The attacker then withdrew for eight to fifteen days of abstinence while the others ate the cooked flesh of the captive and finished all of the drink made for the occasion.

Staden himself was supposed to be eaten, but through a mix of luck and deception managed to convince the Tupinambá that he should not be. Among other reasons, he claimed that their God didn’t like the idea of him being eaten: (Ch. 2, pg. 61)

When the families in Nhaêpepô-oaçú’s hut began to return from Mambucabe, they came sobbing with terrible news. Disease had broken out during the two weeks when Nhaêpepô-oaçú and his people were there rebuilding the long houses that had been burned down by the Tupinikin. … Shocked at the impact of the disease that descended so suddenly and with such devastating results, the Tupinambá struggled to understand the meaning of the outbreak. Staden’s prophecy of the anger of the moon was re- interpreted to foretell the sickness that befell them. Nhaêpepô-oaçú’s brother came to Staden and said: “My brother suspects that your God must be angry.” Staden immediately insisted that this in fact was the case: “I told him yes, my God was angry, because he wanted to eat me.”

His final deception was to talk his way onto a French ship, the Catherine, in late 1554. After four months crossing the Atlantic, Europe was in sight, and he was free. He later wrote a bestselling memoir about his time among the Tupinambá, whose English translation was hilariously titled True History: An Account of Cannibal Captivity in Brazil.

At one point in his book, Staden recounts admonishing a Tupinambá warrior named Cunhambebe about eating human flesh. From Duffy and Metcalf: (Ch. 2, pg. 67)

Staden writes that Cunhambebe held a leg to his mouth and asked him if he wanted to eat it. Staden refused, saying that even animals did not eat their own species. Cunhambebe replied in Tupi, according to Staden: “Jau war sehe [Jauára ichê]”: “I am a tiger” (i.e., the American jaguar). Then he said, Staden writes, “it tastes good.”

Why did they eat their enemies? Partly for revenge, partly because well-cooked people (apparently) taste good, and partly because the associated festivities were fun. But another reason might have been that they could obtain some of their enemy’s strength—their courage and bravery, for example—by eating them. At least according to Wikipedia,

The warriors captured from other Tupi tribes were eaten as it was believed by them that this would lead to their strength being absorbed and digested; thus, in fear of absorbing weakness, they chose only to sacrifice warriors perceived to be strong and brave.

I had a hard time finding support for this claim elsewhere. The closest I could find was some discussion by Neil Whitehead, an anthropologist and one of the translators of a recent English version of Staden’s memoir, regarding possible motives for Tupinambá cannibalism rituals beyond revenge. He writes:

… it is Staden’s testimony in particular that allows latter-day interpreters to escape the sterile vision of Tupi war and cannibalism as merely an intense aspect of a revenge complex. By making the crucial connection between killing and the accumulation of beautiful names, as described by Staden, Viveiros de Castro is able to elaborate the motivations for war and cannibalism beyond the ‘revenge’ model …

Whether or not the Wikipedia claim is true, the idea that you can acquire some of a person’s essence by eating them isn’t unique to the Tupinambá; it’s enough of a meme that it has its own TVTropes page. You’re probably familiar with at least some of the examples listed there.

From a modern vantage point, it’s clear that if groups like the Tupinambá practiced cannibalism for the purpose of acquiring aspects of their enemies, they were misguided. Maybe they could get some protein by doing this, but they certainly couldn’t acquire any of the things that make us fundamentally human, courage and bravery included. You can’t get anything cognitive—personality! memories! identity!—by eating someone’s arm, or leg, or heart. They’re just a bunch of molecules, no longer meaningfully linked to the core features of the former person.

Right?

II. PERSONAL IDENTITY AND HEART TRANSPLANTS

People that have gotten heart transplants sometimes report extremely weird changes afterward. Mitchell Liester, a doctor and Assistant Clinical Professor at the University of Colorado’s School of Medicine, has collected a bunch of examples of this in an article titled “Personality changes following heart transplantation: The role of cellular memory” (Medical Hypotheses, 2020). For example, an avid meat-eater received a heart from a vegetarian, and claimed post-transplant:

“I hate meat now. I can’t stand it. I was McDonald’s biggest money maker, and now meat makes me throw up. Actually, when I even smell it, my heart starts to race.”

Another woman, Claire Sylvia, received a heart-lung transplant from an 18-year-old man and seemed to acquire his taste for beer, green peppers, and chicken nuggets. The donor apparently liked chicken nuggets so much that they were found on him when he died.

But you might think that this isn’t that weird. Getting a heart transplant is a major operation that involves lots of drugs (e.g., for immune suppression and anesthesia) and surgery, and it’s at least plausible that the associated trauma to your body changes what foods taste good to you.

But some of the other accounts are weirder. A lesbian that received a heart from a 19-year-old heterosexual woman reports becoming predominantly sexually attracted to men:

“… I’m engaged to be married now. He’s a great guy and we love each other. The sex is terrific. The problem is, I’m gay. At least, I thought I was. After my transplant, I’m not… I don’t think anyway… I’m sort of semi- or confused gay. Women still seem attractive to me, but my boyfriend turns me on. Women don’t. I have absolutely no desire to be with a woman. I think I got a gender transplant.”

A man who received a heart from a passionate young musician reports suddenly becoming obsessed with classical music, listening to it for hours on end and greatly annoying his wife in the process:

“… he’s driving me nuts with the classical music. He doesn’t know the name of one song and never, never listened to it before. Now, he sits for hours and listens to it. He even whistles classical music songs that he could never know.”

Consider this snippet about a 5-year-old boy who received a heart from a 3-year-old boy:

Some recipients develop aversions after obtaining a new heart. For example, a 5-year-old boy received the heart of a 3-year-old boy but was not told the age or cause of his donor’s death. Still, he offered the following description of his donor following surgery: “He’s just a little kid. He’s a little brother like about half my age. He got hurt bad when he fell down. He likes Power Rangers a lot I think, just like I used to. I don’t like them anymore though”. The donor died after falling from an apartment window while trying to reach a Power Ranger toy that had fallen on the ledge of the window. After receiving his new heart, the recipient would not touch Power Rangers.

Leister includes a number of stories like this: a nine-year-old boy avoids water after getting a heart from a little girl who drowned; a college professor began to have recurring dreams about a flash of light burning his face after getting a heart from a police officer who died in a shooting during a drug bust; a woman often feels the pain of the car accident that killed her donor. It’s possible that all these people are lying, but the phenomenon is apparently common enough that this seems unlikely. It also doesn’t seem likely that what they report is just due to a surgery-related brain injury. In many cases, organ receivers report information about their donor, including information about their name, or their cause of death, that they didn’t appear to have access to.

Claire Sylvia wrote a whole memoir, playfully called A Change of Heart, about the changes she experienced. Here’s a dream she wrote about, as quoted in a great Psychology Today article:

“I’m in an open outdoor place with grass all around. It’s summer. With me is a young man who is tall, thin, and wiry, with sandy-colored hair. His name is Tim—I think it’s Tim Leighton, but I’m not sure. I think of him as Tim L. We’re in a playful relationship, and we’re good friends.

“It’s time for me to leave, to join a performing group of acrobats. I start to walk away from him, but I suddenly feel that something remains unfinished between us. I turn around and go back to him to say goodbye. Tim is standing there watching me, and he seems happy when I return.

“Then we kiss. And as we kiss, I inhale him into me. It feels like the deepest breath I’ve ever taken, and I know that Tim will be with me forever.”

She inhaled him. That’s almost a bit too on the nose. She didn’t know it at the time, but her donor’s name was Tim Lamirande. Spooky, right?

Is there something special about the heart? In a newer article titled “Personality Changes Associated with Organ Transplants” (Transplantology, 2024) Liester and coauthors claim that other kinds of transplants, like kidney and liver transplants, can produce similar changes. It really seems like it’s the internalization of part of someone else that matters, not the internalization of their heart specifically.

I really encourage you to read these stories. They’re crazy. If even a quarter of them are true, they speak to something deep about the biology of personal identity and memory that we don’t yet understand. How could a heart recipient inherit memories of how their donor died?

Then again, the world is full of kooky stories about inexplicable phenomena, and many of these stories are probably fake, no matter how many people strongly and sincerely believe them. Everyone’s heard about out-of-body experiences and psychic phenomena. Despite the many believers in psi, and despite their many earnest accounts of events seemingly outside the bounds of science as we know it—heck, even despite serious attempts to study some of these phenomena, and secret funding from the U.S. government to do so, in some cases—we probably ought to remain skeptical.

And part of that skepticism comes from our hard-won knowledge about how the physical world works. For example, we probably shouldn’t believe someone that claims to be able to bend spoons with their mind, because the laws of physics don’t provide any plausible ways this can happen. As far as we know, some type of signal has to physically propagate from the body of the spoonbender to the spoon in order for them to bend it. And given our knowledge of possible physical signals, there are only so many ways this can happen. No one has found that spoonbenders’ brains produce any long-range waves, electromagnetic or otherwise, capable of bending a spoon. Since “the laws of physics underlying the phenomena of everyday life are completely known”, and are moreover extremely well-supported, we should be extra skeptical of claimed phenomena that appear to violate them.

(Side note: the firm belief that psi phenomena must occur in accordance with the laws of physics motivated a number of physicists to study whether quantum mechanics could explain things like spoonbending. Physicist and historian David Kaiser writes entertainingly about these physicists in How the Hippies Saved Physics.)

The relevant knowledge for evaluating these heart transplant stories is our knowledge of biology, and how human memory works. And everyone knows memories are stored in the brain, not the heart, so we ought to be highly skeptical.

They are just in the brain, right?

…Right?

…

This leads us to the subject of our review: the synaptic plasticity and memory hypothesis.

III. THE SYNAPTIC PLASTICITY AND MEMORY HYPOTHESIS

As formalized by Martin, Greenwood, and Morris in a 2000 review paper, the synaptic plasticity and memory hypothesis (SPM) claims:

Activity-dependent synaptic plasticity is induced at appropriate synapses during memory formation, and is both necessary and sufficient for the information storage underlying the type of memory mediated by the brain area in which that plasticity is observed.

More simply, it says that learning and memory amount to changes in synaptic weights, the connections between neurons. According to this hypothesis, what it physically means to learn something, or store a memory, is to make one or more connections between neurons stronger or weaker.

For most people even vaguely familiar with neuroscience and the brain, this claim rings as trivially true. This idea is so entrenched, in fact, that I'd wager most people don't even know it has a name. (I didn’t, anyway.) The most popular quantitative models of the brain, artificial neural networks (ANNs), assume that the strengths of the connections between neurons, or “weights”, completely determine how networks behave. These are the things that are assumed to change during learning, maybe through an algorithm like backpropagation, or maybe through something that looks more like Hebbian learning. If these models learn something, or store a memory, it has to be through changes in weights.

In AI, too, weights are king. The term “weights” is even used as a synonym for “model parameters”. Weights are the numbers that completely characterize what a state-of-the-art model has learned through expensive training, and these days they can be ferociously protected trade secrets.

The SPM hypothesis is one of the most well-supported theories in the life sciences, and might fairly be called the cornerstone hypothesis of neuroscience. It’s one of the key assumptions of a framework called connectionism, which posits that all of the things that make us human—our ability to talk, think, reason, remember, and so on—follow from networks of interacting neurons, and changes in the strengths of connections between those neurons. It’s a framework that used to be somewhat controversial, but that most neuroscientists accept these days. And they accept it for good reasons, both empirical and philosophical; it’s been extremely successful!

And yet I think it’s wrong, or at least woefully incomplete. In this review, I’ll rant about why.

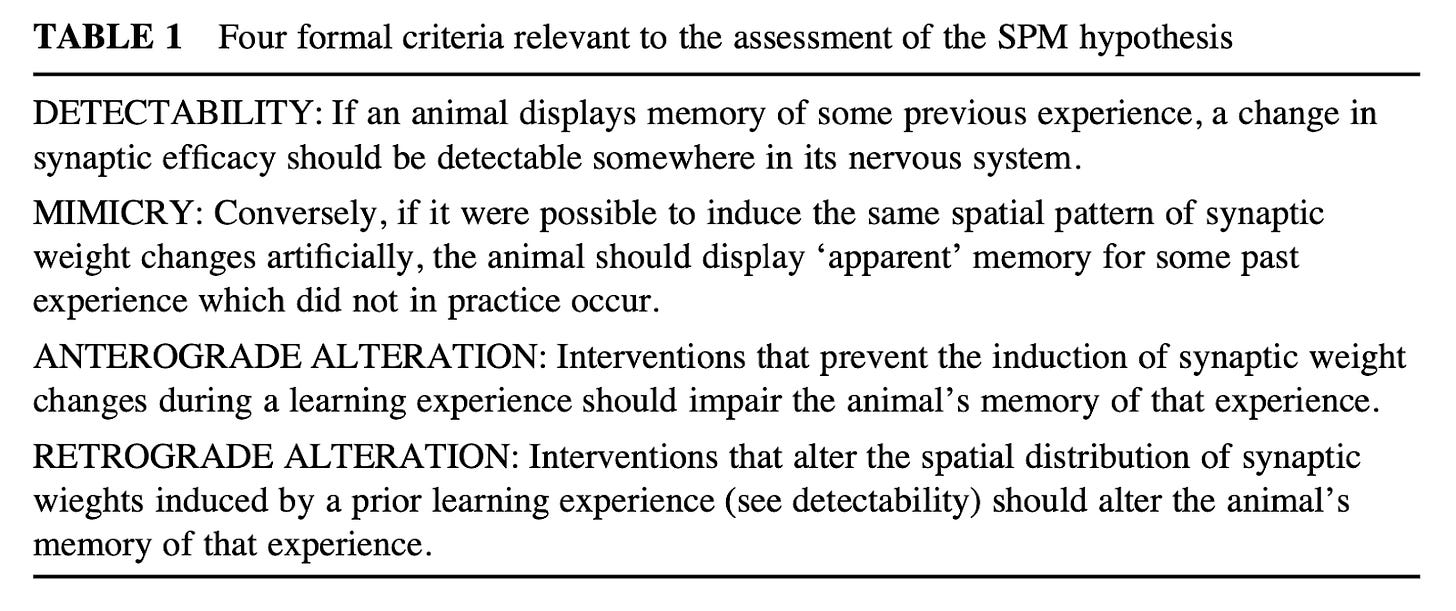

Usefully, Morris et al. lay out a set of criteria for deciding whether the SPM hypothesis is true:

But they really just boil down to necessity and sufficiency. Is a synaptic weight change necessary for an animal to learn or memorize something? If a mouse receives a shock (a common stimulus in fear conditioning experiments), could we in principle look inside its brain and find synaptic weights that encode the memory of that shock? If we found such weights, could we modify the memory, or even generate an entirely new fake memory, by perturbing them?

Is a synaptic weight change sufficient for encoding learning and memory? If we changed some weights, and only some weights, can we change a memory? Can we make a new memory from scratch just by modifying synaptic weights, or would we have to change something else too? Learning and memory happen if and only if synaptic weight changes happen; that’s the SPM hypothesis in a nutshell.

When I say that the SPM hypothesis is wrong, I mean it’s wrong in the same way that something like Newton’s laws are wrong: it’s useful, but its domain of applicability is limited. In particular, I am not saying that synaptic weight changes are not causally related to learning and memory. They almost certainly are, and this point is so well-established that saying otherwise just seems wrong.

To understand my issue, it’s useful to distinguish between what I’ll call the strong SPM hypothesis and the weak SPM hypothesis. The strong version says that learning/memory is literally physically equivalent to changes in synaptic weights. The weak version says that learning/memory can be stored in changes to synaptic weights, but isn’t only stored in them. I believe the weak version, but not the strong version.

In principle, worrying about this distinction could amount to pedantry. Maybe there are other mechanisms for learning or storing memories, but they’re weird edge cases, and mostly don’t matter for the kinds of learning and memory we typically care about. I don’t think this is true, for reasons that will become clear as we go on.

The crux of my negative review of the SPM hypothesis is this: cells are extraordinarily complex molecular machines, and there’s a lot going on inside of them that the SPM hypothesis implicitly neglects. We often abstract away most of the biophysical complexity of neurons, which are cells. As cells, they take up physical space, and can have weird, complicated shapes. They talk to other (not necessarily neural) cells. Each individual neuron has a complicated (gene regulatory) network inside it, whose complexity parallels that of many of our models of entire neural circuits. Do we really think that none of this complexity is involved in processes as complicated and multiscale as learning and memory?

You might respond: sure, neurons are cells and cells are complicated, but we can abstract away those details, and imagine that whatever is going on inside cells ultimately just serves the modification of synaptic weights. I also don’t think this is true, and will try to argue against it.

How should we proceed? First I’ll say a little bit about the history of the SPM hypothesis, so we know how we got here, and what competing ideas fell by the wayside. Then I’ll talk about necessity and sufficiency. Finally, I’ll talk about an alternative hypothesis that I think is more promising, and that can, among other things, potentially explain the weird heart transplant stories discussed above.

IV. A BRIEF HISTORY OF THE HYPOTHESIS

Especially since the early 2010s, when exponential success in AI started to drive significant progress in neuroscience (and lead to many articles like “A deep learning framework for neuroscience”), the SPM hypothesis has become something of a dogma. Like I said above, the most popular models of real neural networks are artificial ones, which learn features and behaviors entirely through changes in synaptic weights. By design, this hypothesis class excludes other interesting possibilities.

But the SPM hypothesis wasn’t always the only game in town. Fortuitously, I found a chapter in a 1976 neuroscience textbook by Nobel Prize winner Eric Kandel (Cellular Basis of Behavior) that recounts some of the history of ideas regarding neuronal plasticity. It’s a pretty surreal experience reading this chapter now, many decades later, since the relevant paradigms have changed so much.

In Kandel’s telling, there are two main hypotheses for explaining why and how behavior changes—and hence, learning and memory—are possible. He calls them the “dynamic change hypothesis” and “plastic change hypothesis”.

The “dynamic change hypothesis” suggests that learning and memory is due to persistent activity in neural circuits: if you see a picture of a cat, and the associated photons impinge on your retina and travel through your visual system, ripples associated with this activity linger for a while. The fact that they linger causes the system to behave differently, and we can identify the differences in system behavior due to the ripples with learning and memory. Kandel attributes this idea to the physiologist Alexander Forbes (1922) and Lorente de Nó (1938). Nowadays, persistent activity is thought to play a role in short-term memory, but isn’t taken seriously as a hypothesis for universally explaining learning and memory. Kandel doesn’t really take it seriously for this purpose either.

According to Kandel, (Cellular Basis of Behavior, Ch. 11, pg. 476)

The plastic change hypothesis states that learning involves a functional or plastic change in the properties of neurons or in their interconnections.

Note that he says “in the properties of neurons”; he’s referring not just to the connections between neurons (i.e., synaptic weights), but also to their intrinsic properties, like their degree of electrical excitability. This makes the “plastic change hypothesis” somewhat more inclusive than the SPM hypothesis, which only concerns connection strengths.

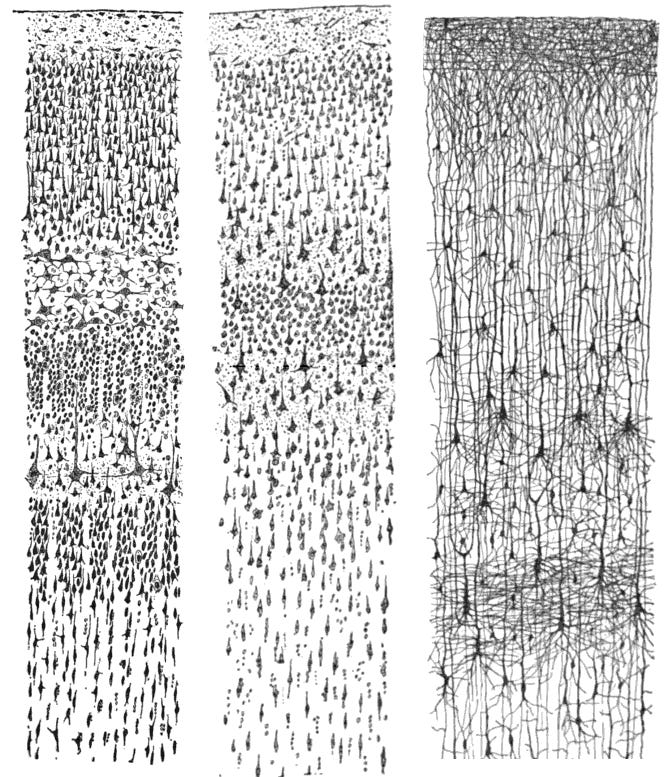

He primarily attributes the plastic change hypothesis to Santiago Ramón y Cajal, a luminary to whom many other ideas foundational to neuroscience are usually (and probably correctly) attributed. In the mid-1890s, Ramón y Cajal synthesized nascent ideas about the nervous system by other workers, like Lugaro and Tanzi. In a classic 1909 textbook (Histologie du système nerveux de l'homme & des vertébrés), he wrote:

The extension, the growth, and the multiplication of the appendages of the neuron do not stop at birth; they continue and nothing is more striking than the difference between the length and the number of the cellular ramifications, of the second and third order, in a newborn and an adult man.

The new cellular extensions do not grow at random; they have to orient themselves according to major neural currents or according to intracellular associations which are the object of repeated action of will. We think that formation of those new branches is followed by an increased blood flow which brings necessary nutrition. The mechanisms are probably chemo-tactile like the ones we observed during histogenesis of the spinal cord.

The ability of the neurons to grow in an adult and their power to create new associations can explain learning and the fact that man can change his ideological systems. Our hypothesis can even explain the conservation of very old memories such as memories from youth in an old man and in an amnesiac or in a mental patient, because the association pathways that have existed for a long time and have been exercised for many years are probably very powerful and were formed at the time when the plasticity of the neuron was at its maximum.

Three Ramón y Cajal drawings. The left two depict parts of the adult human cortex; the rightmost depicts part of the cortex of a 1.5 month old infant. Note the vast differences between infant and adult structure.

Later, people like Jerzy Konorski (1948) and Donald Hebb (1949) substantially expanded on the idea. Hebb’s ideas were particularly influential. In Hebb’s famous Organization of Behavior, he wrote:

Let us assume that the persistence or repetition of a reverberatory activity (or “trace”) tends to induce lasting cellular changes that add to its stability. The assumption can be precisely stated as follows: When an axon of cell A is near enough to excite cell B and repeatedly or persistently takes part in firing it, some growth process or metabolic change takes place in one or both cells so that A’s efficiency as one of the cells firing B, is increased.

Hebb’s idea is now usually paraphrased as “neurons that fire together, wire together”.

But when did the plastic change hypothesis become the SPM hypothesis? When did we mostly forget about changes to intrinsic excitability, or changes in other properties of single neurons? (I say “mostly” because people know that intrinsic excitability can change, and you sometimes see studies that acknowledge its existence and say something about it, but the idea remains niche. It seems to be in the category of things that one could pay attention to, but that one doesn’t need to pay attention to in practice. Synaptic weights are thought to be way more important.)

Kandel doesn’t say, probably because he wrote his textbook (remember: 1976!) before the SPM hypothesis supplanted the more general plastic change hypothesis. But Martin, Greenwood, and Morris do comment on this in the introduction to their paper. They reference long-term potentiation (LTP), the phenomenon that increases in synaptic weights can be maintained on a time scale of minutes to months, which was only discovered in mammals in the late 1960s and early 1970s. LTP was given its modern name in 1975, just one year before Kandel’s textbook was published.

Martin, Greenwood, and Morris wrote:

In short, thinking about LTP’s putative role in memory has moved on from a relatively simple hypothesis (Hebb 1949) to a set of more specific ideas about activity-dependent synaptic plasticity and the multiple types of memory that we now know to exist (Kandel & Schwartz 1982, Lynch & Baudry 1984, McNaughton & Morris 1987, Morris & Frey 1997). These distinct hypotheses do, however, share a common core, which we will call the synaptic plasticity and memory (SPM) hypothesis …

They acknowledge the existence of changes to neuron-intrinsic properties like excitability, but view synaptic weight changes as more important, and as most probably the locus of learning and memory storage. Apart from LTP and long-term depression (LTD), which refers to a persistent weakening of synaptic weights,

There are other forms of activity-dependent neuronal plasticity, such as excitatory postsynaptic potential (EPSP)-spike potentiation and changes in membrane properties (e.g. after-hyper-polarization); these are not, in general, input specific. We recognize, but also exclude from detailed discussion, experience-dependent alterations in neurogenesis or cell survival (Kempermann et al 1997, Gould et al 1999, van Praag et al 1999). These may reflect the nervous system creating the neural space for subsequent learning rather than the on-line encoding of the specific experiences that trigger this change.

Interestingly, they also reference the idea (“experience-dependent alterations in neurogenesis or cell survival”) that learning and memory could happen through introducing new neurons to circuits, or removing old neurons, but implicitly claim that such changes aren’t important compared to synaptic weight changes.

In a follow-up review paper from 2014, where Morris is again the last author, Morris essentially sticks to his guns: (Takeuchi, Duszkiewicz, and Morris, Phil. Trans. R. Soc. B, 2014)

Martin et al. laid out a framework for testing rigorously the widely held notion that synaptic potentiation and depression are key players in mediating the creation of memory traces or engrams. That framework has stood the test of time, with exciting new approaches using contemporary techniques exploring the idea further with respect to detectability, anterograde and retrograde alteration. … Critical experiments remain to be done, but the neuroscience community can justifiably feel tantalizingly close to having tested one of the great ideas of modern neuroscience. Forty years on, LTP continues to excite us all as it slowly gives up its mechanistic secrets and reveals its important functional role in learning and memory.

The rough history of the SPM hypothesis, then, goes something like this. Around 1900, there was still much we didn’t know about the nervous system (or biology, for that matter; remember that molecular biology really only got started in the 1950s), but even early workers like Ramón y Cajal recognized that connections between neurons could offer a useful substrate for modulating circuit behavior, and hence for learning and memory. This lead to the “plastic change hypothesis”, which took a more modern form around 1950 with the work of Hebb et al. When LTP and LTD were discovered in the 1970s, and appeared to successfully account for a variety of longer-term forms of learning, the stock of synaptic weight changes rose, and the stock of other known forms of plasticity (e.g., plasticity in single-neuron properties, and in rates of neuron creation and death) fell. By 2000, when the SPM hypothesis paper was published, workers had good reason to believe LTP and LTD explained most learning and memory phenomena of interest. Other forms of synaptic plasticity were discovered after LTP and LTD, like homeostatic plasticity and spike-timing-dependent plasticity, but the SPM hypothesis is general enough to accommodate them. After ANNs succeeded at pretty much every task we threw at them since the early 2010s, and people started to focus on ANN-based models of the brain, the SPM hypothesis became a dogma.

It’s worth pointing out that there are other ideas about potential biological substrates of memory that don’t fit neatly within this linear narrative. We’ll discuss one such idea a bit later.

V. THE NECESSITY OF SYNAPTIC WEIGHT CHANGES FOR MEMORY

The SPM hypothesis says that synaptic weight changes are necessary for learning and memory. Is that true? I think the answer is a strong no, for a few different types of reasons. (And it goes without saying that this will impact my final rating of the SPM hypothesis.)

First, it’s uncontroversial that there are other forms of information storage, even in humans. There’s immune memory, which involves the production of antibodies and changes in T and B cells, all of which help immune systems respond more quickly and effectively to previously encountered pathogens. The existence of this type of memory is majorly responsible for why many of us are not constantly hobbled by sickness.

Apparently there’s some debate over whether immune memory really ‘counts’ as memory, or at least as memory anything like the cognitive forms of memory psychologists study. A paper by philosopher David Colaço (“On Consistently Assessing Alleged Mnemonic Systems (or, why isn’t Immune Memory “really” Memory?)”), says that there are two common arguments against ‘counting’ immune memory as a cognitive-like form of memory:

The first is that the immune system does not exhibit the errors exemplified in human memory, which I call the Error Argument. The second is that the immune system can be described and explained in causal terms alone, which I call the Mere Causal Argument.

The “Error Argument” is about the fact that there are characteristic ways humans misremember things, or can be manipulated to misremember things, for example by prompting. (If you’ve taken an intro psychology course, maybe you’ve heard of the car crash Loftus and Palmer stuff.) The “Mere Causal Argument” is apparently about the requirement that memory, like other cognitive things, ought to involve some latent variables, and not be totally input-dependent. Colaço elaborates:

Many things that might seem cognitive (or specifically mnemonic) can be described and explained in causal terms alone, this argument continues. For instance, Adams and Garrison claim that the behavior of bacteria might initially seem cognitive, but “on closer inspection there are complete explanations (none of which constitute cognitive processing)” (Adams and Garrison 2013, p. 341). They conclude that “there are non-representational explanations of why they do what they do,” such as chemical or physical explanations that are causal, while “the explanation of cognitive behavior includes the representational content of the internal states” (Adams and Garrison 2013, p. 346).

I can’t convey how much I hate this kind of argument and find it stupid. Either you believe everything is ultimately elementary particles obeying the laws of physics, as physicalism posits, or you don’t. If you don’t, fair enough, but I’m not sure how you’d falsify your position. If you do believe this, then thoughts, feelings, and so on are just useful ways of talking about a complex physical system. One way of talking involves molecules, while another way involves things like thoughts and feelings. Just because you can describe the molecular interactions relevant for some memory system, doesn’t mean it’s not a memory system, or not cognitive. Most neuroscientists would agree that an SPM-hypothesis-like account of memory can ultimately be described in terms of interactions between molecules, and moreover would deny that this means it doesn’t count as memory.

There are other known forms of non-synaptic memory, too, with epigenetics being a cool (but not totally well understood) form. One interesting example of it in action: people who were still in the womb during a famine experienced additional health problems later in life, including higher rates of obesity. And in some cases, so did their kids, and their kids’ kids. Their bodies remembered the famine, and passed on that memory to multiple generations of offspring. How? People think that epigenetic changes like DNA methylation are responsible, since these changes can be fairly stable, and can be heritable.

Next, experiments have shown examples of non-synaptic memory storage. A study from the lab of UCLA neurobiologist David Glanzman (“Reinstatement of long-term memory following erasure of its behavioral and synaptic expression in Aplysia”) showed that you can erase synaptic weight changes in sea slugs, and that this doesn’t totally erase the associated memory. The memory could be reinstated—that is, they found you can ‘jog’ the memory of the slugs—which seems to imply that memory was stored somewhere other than in synaptic weights.

A dramatic followup experiment by Glanzman’s group suggested that the memory was at least partly stored as RNA. Their experiment involved sensitizing one slug to touch, by shocking it, extracting RNA from that slug, and then injecting that RNA into a different slug. They found that the second slug became sensitive to touch, as if it had been shocked. In other words, it seems like the ‘memory’ of the shock got transferred! See their 2018 paper (“RNA from Trained Aplysia Can Induce an Epigenetic Engram for Long-Term Sensitization in Untrained Aplysia”), and a related 2019 review article (“Is plasticity of synapses the mechanism of long-term memory storage?”) for more discussion.

Finally, forget about synaptic weight changes, and forget about synapses all together. Even single cells can learn and store memories! To me, this is the most persuasive argument against the necessity of synapses. Single-celled organisms, by definition, are just one cell, and hence have no nervous system or synapses to speak of. Yet it appears that they can learn and store (ethologically relevant) memories. The memories in question are certainly much simpler than what you might have in mind when you imagine human memory; a paramecium isn’t waxing nostalgic over its most recent birthday party. But one can argue that they are memories nonetheless.

What kinds of memories do single cells possess? The full gamut of possibilities is unclear, but a simple and well-studied kind of memory is habituation. The idea is that you take a single cell, and repeatedly subject it to some stimulus, like a physical poke or light flash. Often, cells repeatedly subjected to a stimulus like this will eventually either decide to escape, or become desensitized to the stimulus. If you poke a cell too much, but it comes to ‘think’ that your pokes don’t matter, it starts ignoring them. “You’re just messing with me,” it might say if it could talk.

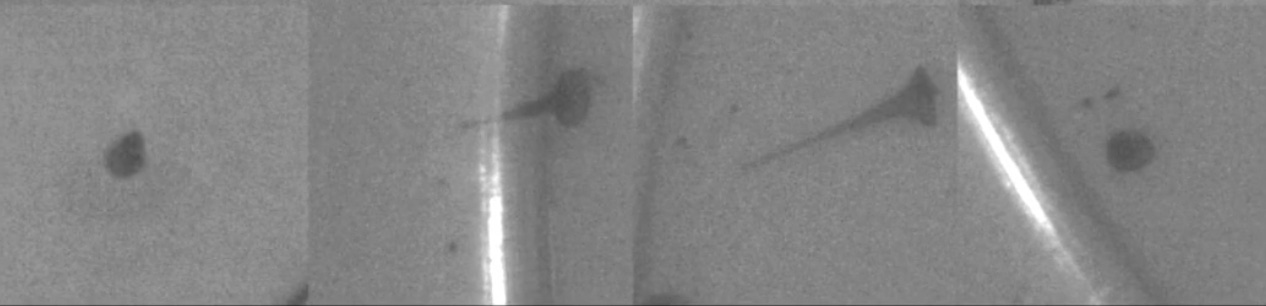

The habituation behavior of the ciliate Stentor coeruleus is a canonical example. It’s shaped like a tiny trumpet, or rice grain. When it gets physically poked, it contracts. When you poke it enough, it stops responding, and maintains its long tube shape despite your pokes.

Habituation is admittedly extremely simple, but even a behavior as simple as this remains mysterious in some ways. Consider this: if you wait a while after Stentor stops responding, it will ‘recover’. That is, it will respond if you start poking it again. Keep poking it for long enough a second time, and it will stop responding a second time. Here’s the interesting part: in the second ‘round’, it will stop responding to your pokes faster than it did the first time. This implies that it ‘remembers’ that it got poked a bunch sometime in the recent past. In other words, there is the shorter-term memory of the recent pokes (‘the current pokes are not dangerous, so I should stop responding’) and a longer-term memory of less-recent pokes (‘the current pokes are like the previous pokes, which were not dangerous, so I should learn faster this time’). How does this work? There are ideas out there, but the truth is that no one really knows! Obviously, the mechanism for this type of multiple-time-scale memory can’t rely on synapses.

Gershman, Balbi, Gallistel, and Gunawardena discuss some of the history of single-cell learning and related controversies (for example, does this really ‘count’ as learning and memory?) in a recent and readable review paper titled “Reconsidering the evidence for learning in single cells”. Although times are changing, in the mid-twentieth century there was serious resistance to the idea that single cells could learn and store memories. Beatrice Gelber was a pioneering scientist that studied learning in protozoa like paramecia. As Gershman et al. recount, she encountered substantial resistance to the idea that paramecia could exhibit sophisticated learning, even from other psychologists working on paramecia. Donald Jensen, one such psychologist, wrote:

Gelber freely applies to Protozoa concepts (reinforcement and approach response) and situations (food presentation) developed with higher metazoan animals. I feel that such application overestimates the sensory and motor capabilities of this organism… If analogies are necessary, a more apt one might be that of an earthworm which crawls and eats its way through the earth, blundering onto food-rich soil and avoiding light, heat, and dryness. Gelber’s assertion loses its force when the blind, filter-feeding mode of life of Paramecia is considered.

As Gershman et al. note, this is probably a bad analogy, since worms have been found to exhibit fairly sophisticated learning and memory! This was clear even in 1957, when Jensen wrote.

If you buy that single cells can learn and store memories, you should probably also believe that the associated mechanisms, which must be based on molecules internal to the cell rather than synaptic weights, are probably widely conserved across evolution. After all, evolution is a tinkerer, and something that previously worked is extremely likely to be used again. This is especially true for things that are really important, like developmental mechanisms—so why not for memory, something similarly basic and important?

Relatedly, it seems unlikely that no organism could learn or remember anything before synapses existed. There had to be some other, simpler mechanism that worked before large-scale, synapse-based neural networks were common. But if this is true, why wouldn’t it continue to be used in one form or another after modifications to synaptic weights began to play a role in learning and memory?

(On the other hand, it’s certainly true that one mechanism can strongly supplant another. Consider that lesions to the motor cortex, a relatively newer brain structure, are devastating to humans, but not necessarily that serious for other mammals. The motor cortex is just one brain area involved in motor control, but is particularly dominant in humans. How to think about this isn’t completely clear.)

Before I end this section, I want to go back to one of the arguments Colaço discussed. It seems like a lot of our argument hinges on what we mean by learning and memory. If we accept that the simple changes in behavior exhibited by single cells or sea slugs reflect some form of ‘learning’ or ‘memory’, then it really does seem like one has to take a broader view, and (for example) engage with non-synaptic mechanisms. Some people might just not think these behavioral changes are worth associating with the rich phenomenology of human memory.

How should we define memory, then? I think it’s worth taking a permissive view, since otherwise—essentially by definition—we’re excluding non-human animals, or non-human complex systems more generally, from being able to learn and store memories. Biology is complicated, and it’s plausible to me that something as basic as memory is a matter of degree rather than kind. Maybe single cells don’t exhibit all of the rich phenomenology associated with human memories, but there are still important enough similarities that we should use some of the same words.

A minimal definition of learning and memory is something like “the capacity to store and retrieve information” on some time scale. This is a bit impoverished, but I think it does the job.

Did we go too far? If you kick a rock, so that a piece of it breaks off, does this mean that the rock now ‘stores’ information about you kicking it, and hence ‘memorizes’ something about your kick? I’d bite the bullet and say yes. But a rock can’t use the absence of that piece to inform adaptive behavior in the same way that a living organism can, or equivalently its retrieval capabilities are extremely limited. It also has more limited storage capabilities: your friend will remember a whole temporal sequence of you kicking them (and will probably not be too happy), whereas the rock can probably only ‘store’ the final result. In other words, maybe there’s a sense in which non-living physical systems like rocks have memory, but it isn’t very good.

VI. THE SUFFICIENCY OF SYNAPTIC WEIGHT CHANGES FOR MEMORY

The SPM hypothesis says that synaptic weight changes are sufficient for learning and memory. Is that true? I think the answer is again a strong no.

We’ve already encountered Glanzman et al.’s experiments, which suggest (at least in sea slugs) that synaptic weights are not the only format for information storage. These experiments directly falsify one of Martin, Greenwood, and Morris’ proposed tests: you can destroy a bunch of synaptic weights, but not destroy the associated memory.

There’s a more dramatic example of large-scale synaptic weight destruction in nature whose phenomenology parallels that finding. In the process of becoming a butterfly, a caterpillar’s entire body—including its brain—undergoes major structural changes. These changes involve a vast array of synapses being pruned, remodeled, and generally reorganized. And despite all of this, it seems like it’s at least possible that a butterfly can retain memories of its life as a caterpillar. More generally, this seems to be true of holometabolous insects, which have distinct larval and adult stages.

Blackiston, Shomrat, and Levin discuss more examples like this in a 2015 article titled “The stability of memories during brain remodeling: A perspective”:

Holometabolous insects reorganize their brains during pupation in the transition from larva to adult, with many neurons of the central nervous system pruning to the cell body before the generation of adult specific structures. Planarian species are capable of regenerating their entire brain from a tail fragment in the event of fission or amputation, with new tissue arising from a neoblast stem cell population. Arctic ground squirrels demonstrate a drastic reduction in brain volume during hibernation at near freezing temperatures, which is corrected within hours of arousal. In all 3 of these animal groups, learned behaviors have been observed to survive the striking reorganization of the brain.

Sam Gershman, in a magisterial 2023 review (“The molecular memory code and synaptic plasticity: A synthesis”), elaborates on regeneration and planaria learning:

… if repeatedly shocked after presentation of a light, a planarian will learn to avoid the light (Thompson and McConnell, 1955). Now suppose you cut off a planarian’s head after it has learned to avoid light. Within a week, the head will have regrown. The critical question is: will the new head remember to avoid light? Remarkably, a number of experiments, using light-shock conditioning and other learning tasks, suggested (albeit controversially) that the answer is yes (McConnell et al., 1959; Corning and John, 1961; Shomrat and Levin, 2013). What kind of memory storage mechanism can withstand utter destruction of brain tissue?

It’s worth pointing out that some of these examples are controversial, or at least not nearly as well-studied as more mainstream memory-related phenomena. But if any of these examples is at least kind of true, the SPM hypothesis doesn’t come out looking great. These examples also speak against the possibility that non-synaptic memory mechanisms are just ‘edge cases’, since the behaviors involved (metamorphosis, regeneration, and hibernation) are pretty varied, and also fairly important for the animals that perform them.

And there are other reasons to think synapses are insufficient as a learning and memory storage mechanism. Synaptic weights naturally ‘decay’ and ‘turn over’ on a time scale of hours to weeks. In that sense, they are not completely stable places to store information. In the aforementioned 2023 review, Gershman points this out as a serious problem with a synaptic-weight-based account of learning and memory:

Excitatory synapses are typically contained in small dendritic protrusions, called spines, which grow after induction of LTP (Engert and Bonhoeffer, 1999). Spine sizes are in constant flux (see Mongillo et al., 2017, for a review). Over the course of 3 weeks, most dendritic spines in auditory cortex will grow or shrink by a factor of 2 or more (Loewenstein et al., 2011). In barrel cortex, spine size changes are smaller but still substantial (Zuo et al., 2005). Spines are also constantly being eliminated and replaced, to the extent that most spines in auditory cortex are replaced entirely over the same period of time (Loewenstein et al., 2015). In the hippocampus, the lifetime of spines is even shorter—approximately 1–2 weeks (Attardo et al., 2015). Importantly, much of the variance in dendritic spine structure is independent of plasticity pathways and ongoing neural activity (Minerbi et al., 2009; Dvorkin and Ziv, 2016; Quinn et al., 2019; Yasumatsu et al., 2008), indicating that these fluctuations are likely not generated by the covert operation of classical plasticity mechanisms. Collectively, these observations paint a picture of profound synaptic instability.

How can we remember things for years with synapses that turn over on a time scale of weeks or less? Maybe we can imagine neural circuits playing an elaborate game of hot potato, and constantly moving information around to prevent synaptic turnover from destroying it. But this explanation seems problematic given the orders of magnitude that separate hours and weeks from decades.

At this point, I think it’s helpful to recall a point we made earlier: persistent activity, reverberations of neural activity due to some previously presented stimulus, is probably a reasonable mechanism for short-term memory, but not long-term memory. Maybe there are tricks one can play to get it to hold onto information for a little longer, but these will only go so far—it faces a fundamental limitation. But this isn’t the end of the world, because it can couple to a longer-term memory mechanism so that one gets the best of both worlds. (Persistent activity can’t hold onto information as long as a synaptic-weight-based mechanism, but its faster dynamics make it more responsive to stimuli, which is good for inputting a quickly-changing signal.) The idea that persistent activity and longer-term storage mechanisms interact is popular, and is sometimes called the “dual-trace” theory.

Maybe it’s turtles all the way down? If synaptic weights turn over on a time scale of weeks, maybe there’s something slower that turns over on a time scale of months. And maybe there’s something else that’s slower than that. Why just one ‘fast’ and one ‘slow’ mechanism? Why not ‘fast’, and ‘slow’, and ‘even slower’, and ‘even slower than that’, and so on?

This line of thinking leads us to an alternative to the SPM hypothesis, which I discuss next.

VII. THE CELLULAR PROCESSES AND MEMORY HYPOTHESIS

Earlier, I referenced experiments from the Glanzman lab that appeared to show that injecting an untrained sea slug with RNA from a trained slug ‘transferred’ a training-associated memory. It turns out this kind of experiment has a long history.

In parallel with ideas due to Hebb and others that learning and memory might be associated with the connections between neurons, a different idea floated around: maybe memory was stored via molecules like proteins or RNA. In the 1950s and 1960s, when molecular biology was just getting started and people began to get excited about the possibilities associated with molecules like DNA, this was a natural idea. If a lot of biology could ultimately be described in molecular terms, why not learning and memory too? Were there memory molecules? If so, what were they like, and how did they work? See a (pessimistic) 1976 article by Gaito (“Molecular psychobiology of memory: Its appearance, contributions, and decline”), one of the early workers in this area, for a sense of how people thought.

James McConnell was a psychologist interested in learning and memory, among other things. Inspired by the idea that learning and memory might ultimately be stored in molecules, in 1962 he performed a simple experiment with worms: if you train a worm to be afraid of a light stimulus (by associating it with a shock), and then grind it up and feed it to another worm, does the second worm acquire the fear? Said differently, do you acquire the fears of the animals you eat? In an article hilariously titled “Memory transfer through cannibalism in planarians”, McConnell showed that the answer seemed to be yes!

An article in the Monitor notes that

The cannibalism studies, both startling and vivid in their imagery, and McConnell, never one to shy away from the media, caught the public eye. At a time when scientists remained sequestered in their labs, McConnell appeared with his cannibalistic worms on television (i.e., “The Way Out Men,” “Mr. Wizard” and “The Steve Allen Show”), while articles profiling his work appeared in Time, Newsweek, Life, Esquire and Fortune. Eminently quotable, McConnell referred to his work as confirming the Mau Mau hypothesis, and the “McCannibal” moniker didn’t bother him one bit. He made grand pronouncements about the future of “memory pills” and “memory injections,” promising more than he and others working in the area could actually deliver.

Maybe unsurprisingly, the subsequent history of this line of work is muddled. (Otherwise, we probably would’ve heard about “memory pills” and “memory injections”.) It didn’t help that McConnell first published these results in a journal he ran called Worm Runner’s Digest, which mixed science with satire. Also, people had a hard time reproducing McConnell’s result, especially in animals more sophisticated than worms. On this point, Gershman writes that

The first studies of memory transfer between rodents were reported by four separate laboratories in 1965 (Babich et al., 1965b,a; Fjerdingstad et al., 1965; Reinis, 1965; Ungar and Oceguera-Navarro, 1965). By the mid-1970s, hundreds of rodent memory transfer studies had been carried out. According to one tally (Dyal, 1971), approximately half of the studies found a positive transfer effect. This unreliability, combined with uncertainty about the underlying molecular substrate and issues with inadequate experimental controls, were enough to discredit the whole line of research in the eyes of the neuroscience community (Setlow, 1997).

For this and a variety of other reasons—including the discovery of LTP, which boosted the idea that synaptic weights were causally related to learning and memory—by the late 1970s, researchers became generally pessimistic about the prospects of the “biochemical” memory hypothesis.

But people never totally gave it up.

Over the years, various researchers have made apparently unrelated suggestions that this or that molecule might provide a potential memory storage mechanism. Francis Crick, of Watson and Crick fame, suggested that post-translational modifications of proteins (i.e., you take a protein and you glue something to it) are an appealing potential memory storage format. Robin Holliday suggested that epigenetic mechanisms like DNA methylation might play a role in long-term memory. Various people suggested that specific molecules like CaMKII or CREB transcription factors could store memory, or at the very least were causally involved in memory formation in an important way.

Over the years, there were a number of reviews and perspectives on these somewhat heterodox approaches to learning and memory. And over the past ten years, they’ve grown increasingly common. Consider these titles:

“Time to rethink the neural mechanisms of learning and memory”

Gallistel and Balsam, Neurobiology of Learning and Memory (2014)

“The Demise of the Synapse As the Locus of Memory: A Looming Paradigm Shift?”

Trettenbrein, Front. Syst. Neurosci. (2016)

“Is plasticity of synapses the mechanism of long-term memory storage?”

Abraham, Jones, and Glanzman, npj Science of Learning (2019)

“Locating the engram: Should we look for plastic synapses or information-storing molecules?”

Langille and Gallistel, Neurobiology of Learning and Memory (2020)

“Reconsidering the evidence for learning in single cells”

Gershman, Balbi, Gallistel, and Gunawardena, eLife (2021)

“The central importance of nuclear mechanisms in the storage of memory”

Gold and Glanzman, Biochemical and Biophysical Research Communications (2021)

“Memory: Synaptic or Cellular, That Is the Question”

Arshavsky, The Neuroscientist (2023)

All right, all right, we get it! Synapses aren’t enough!

But despite the pleading of these titles, in 2025 this still very much remains a minority view, or at the very least something most neuroscientists don’t think about regularly. (Maybe if you asked them to really think about it, they’d agree that there’s potentially something interesting going on.) It’s also apparently hard to get mainstream funding to study possible non-synaptic, molecular mechanisms for memory. I heard secondhand that Glanzman has had trouble getting funding since he published those studies. I’m not sure whether this anecdote is true, but if it is true, it’s surprising. You can give someone a memory by having them eat someone else with that memory? Seriously? That is objectively insanely cool, and I don’t know why more people aren’t excited about it.

Where does all of this leave us? Maybe at this point you’re on board, and think that synaptic weight changes are neither necessary nor sufficient for learning and memory. So what’s the alternative? Is it just the SPM hypothesis plus plus, where we take synaptic mechanisms and tack on a bunch of additional mechanisms? Kind of, but we can do a little better than that.

As an alternative to the SPM, I want to put forward the cellular processes and memory hypothesis (CPM). In the spirit of Martin, Greenwood, and Morris’ 2000 review article, I’ll define the CPM hypothesis as the claim that:

The formation, consolidation, and retrieval of learning and memory in biological systems often involves stimulus-dependent, non-synaptic molecular and intracellular processes. These processes do not just serve synaptic-weight-based mechanisms, but provide complementary mechanisms. They are necessary for making and keeping long-term memories, but not sufficient, and interact with synaptic-weight-based mechanisms in nontrivial ways.

(Just to be clear, this idea definitely isn’t original to me. I’m just the only one calling it this.)

What are these molecular and intracellular processes I allude to? Is learning and memory stored in RNA, as McConnell thought and Glanzman et al.’s experiments suggest? Is it stored in post-translational modifications to proteins, like Crick thought? Is it stored via epigenetic mechanisms, or in the stable states of gene regulatory networks, or in the action of transcription factors?

The truth is that no one really knows. But I think there’s evidence for all of these, and in general a good rule of thumb is to believe that biology embraces a multiplicity of solutions when more than one solution exists. I’d bet that all of these things contribute, and work together with synaptic-weight-based mechanisms to store long-term memories.

Two final comments here, because now we’re getting (even more) into wild speculation and topics of ongoing research. First, most neurobiologists would agree that molecular mechanisms are involved in learning and memory; this claim is definitely not controversial. What’s controversial is whether these processes store information independently of, or in a fashion complementary to, synaptic-weight-based mechanisms. If this were the case, molecular mechanisms are certainly there and play a role, but may not be that interesting from a higher-level computational or systems perspective. They’re details that we can just abstract away.

I don’t think this is the case, since otherwise the memory transfer experiments and single-cell learning experiments (among others) would be hard to explain. There isn’t any good reason to believe that RNA molecules track synaptic weight changes, and moreover with a fidelity high enough to encode them well, and moreover that other cells (in other animals!) somehow ‘know’ to decode some aspect of those RNA in order to decode something about synaptic weight changes. And if you do believe this, this is basically just saying that RNA molecules encode memory (albeit, memory possibly originally stored in synaptic weight changes), but using more words. The memory transfer experiments, which suggest that molecules like RNA are sufficient for transferring a memory, are consistent with the CPM hypothesis but inconsistent with the SPM hypothesis.

Lastly, you might wonder: if there are perfectly good synaptic-weight-based mechanisms for learning and memory, why would you need these other mechanisms? I think the answer to this puzzle relates to the relatively short time scale on which synapses turn over, an observation I mentioned earlier. You’d really like a mechanism that’s slower, since slower processes tend to hold onto information for longer. Intracellular processes like signaling cascades, gene regulatory dynamics, epigenetic dynamics, and so on all operate on time scales much slower than that of synaptic weight changes. It’s possible that these intracellular mechanisms are better for storing long-term, but probably not short-term, memories.

VIII. THE TERRA INCOGNITA OF CELLULAR MEMORY

A few years ago, when I first heard about some of these ideas, I was heavily inspired by a review article I mentioned above (“The molecular memory code and synaptic plasticity: A synthesis”) by Sam Gershman, a neuroscientist and psychologist at Harvard. It makes many of the same points I’ve made here, and in more detail. I really suggest you read it if you find any of this interesting. Actually, it would be more accurate to say that I learned these points from that article, and spent part of this review just regurgitating them.

The end of that review is so beautifully written that I can’t resist quoting it in full here:

Why can so little be firmly asserted, despite decades of intensive research? One reason is that the associative-synaptic conceptualization of memory is so deeply embedded in the thinking of neurobiologists that it is routinely viewed as an axiom rather than as a hypothesis.

Consequently, the most decisive experiments have yet to be carried out. Looking at the history of research on nuclear mechanisms of memory is instructive because it has long been clear to neurobiologists that DNA methylation and histone modification play an important role. However, the prevailing view has been that these mechanisms support long-term storage at synapses, mainly by controlling gene transcription necessary for the production of plasticity-related proteins (Zovkic et al., 2013). The notion that these nuclear mechanisms might themselves be storage sites effectively became invisible, despite many early suggestions, because such a notion was incompatible with the associative-synaptic conceptualization. It was not the case that a non-associative hypothesis was considered and then rejected; it was never considered at all, presumably because no one could imagine what that would look like. This story testifies to the power of theory, even when implicit, to determine how we interpret experimental data and ultimately what experiments we do (Gershman, 2021).

We need new beginnings. Are we prepared to take a step off the terra firma of synaptic plasticity and venture into the terra incognita of a molecular memory code?

It isn’t an exaggeration to say that the SPM hypothesis is a central, foundational element of how most neuroscientists think, whether or not they’ve heard the term “SPM hypothesis”. It’s been extremely productive, and will no doubt continue to be productive for those that cling to it, but a new world awaits those courageous enough to let go. We ought to embrace a broader conception of how learning and memory might be possible.

Consider the heart transplant stories we started with. These stories are all extremely weird. Can it really be true that one person can remember someone else’s first-person experience of death, or acquire someone else’s sexuality, or know the name of a person they’ve never met or heard about? According to the CPM hypothesis, these phenomena are at least biologically plausible, and hence worth taking seriously. If memories aren’t just stored in the connections between cells, but in the cells themselves—as molecules, or perhaps as dynamic processes involving molecules—then there’s no reason to think that memories are particular to the brain. Maybe your memory of your fifth birthday party is in your arm, or leg, or heart. And if it’s potentially in these weird other places, and if the format in which information is stored is similar from person to person, then why couldn’t transplanting an organ transplant the memories stored in them?

If the CPM hypothesis is even partially true, the implications are stunning. Can you forget something about your fifth birthday party if someone chops your arm off? Does eating the liver of an animal give you some of its memories? (Does it depend on the animal, or the body part?) Is cannibalism actually good, at least in the sense that you can acquire nontrivial capabilities of the person you eat? Could we treat a memory-related disease by intervening on someone’s heart? Could we manipulate memories, or inject new ones, by intervening on a non-brain part of the body? To all of these: maybe. It’s a brand new world! Terra incognita!

The SPM hypothesis is useful and well-supported, but I hope I’ve convinced you that it’s wrong.

IX. THE VERDICT

Three out of five stars, good for its time but doesn't hold up to modern scrutiny. Like a human leg, a bit tough to swallow.