I.

My ex-girlfriend has a weird relationship to reality. Her actions ripple out into the world more heavily than other people's. She finds herself at the center of events more often than makes sense. One time someone asked her to explain the whole “AI risk” thing to a State Senator. She hadn’t realized states had senators, but it sounded important, so she gave it a try, figuring out her exact pitch on the car ride to his office.

A few months later, she was informed that the Senator had really taken her words to heart, and he'd been thinking hard about how he could help. This is part of the story behind SB 1047 - specifically, the only part I have any personal connection to. The rest of this post comes from anonymous sources in the pro-1047 community who wanted to tell their side of the story.

(In case you’re just joining us - SB 1047 is a California bill, recently passed by the legislature but vetoed by the governor - which forced AI companies to take some steps to reduce the risk of AI-caused existential catastrophes. See here for more on the content of the bill and the arguments for and against; this post will limit itself to the political fight.)

State Senator Scott Wiener, unusually for California politicians, is a thoughtful person with a personality and a willingness to think outside the box. When there’s a really interesting bill that makes people wake up and pay attention, it’s usually one of his. Some of his efforts I can get behind - he’s a big YIMBY champion, and gets credit for the law letting homeowners build ADUs. Others seem crazy to me - like a law which lowered penalties for knowingly exposing someone to HIV (you can read his argument for why he thought this was a good idea here)1. Still, love him or hate him, he feels like a live player in ways that other Californian politicians don't.

The New York Times says he got the idea for the bill after:

…[going to] “A.I. salons” held in San Francisco. Last year, Mr. Wiener attended a series of those salons, where young researchers, entrepreneurs, activists and amateur philosophers discussed the future of artificial intelligence.

...so I guess it wasn’t entirely due to my ex. But the bill owes much of its specifics and support to its three co-sponsors - Center for AI Safety, Encode Justice, and Economic Security Project Action.

Center for AI Safety is the one I already knew about. An AI researcher named Dan Hendrycks got really into AI safety and apparently works 70 hour days; I try to follow this space closely and am boggled by the amount of projects he has going at any given time - several lobbying efforts, a bunch of research projects, various showcases of model capabilities, and being Elon Musk's safety advisor at X.AI. Hendrycks has gotten a reputation for being incorruptible (he gave away ~$20 million in AI company equity2 after trolls tried to turn it into a "conflict of interest" and use it to discredit his lobbying) and intense (I don't think it's a coincidence that he gets along with Elon so well). At some point he founded CAIS to keep track of all his efforts, I'm not surprised to see them involved here too, and I imagine they provided some much-needed technical expertise.

Encode Justice needs to be snapped up by some documentary-maker as an inspiring human interest story. Some teenagers decided the world needed to hear the voice of the youth on AI and founded an "intergenerational call for global AI action". Their leader is already being called "the Greta Thunberg of AI". Over four years, they’ve scaled up to “a mass movement of a thousand young people across every inhabited continent". I cannot even imagine how good all of this is going to look on their college applications.

Economic Security Project Action isn’t part of our conspiracy. They’re a generic progressive nonprofit that wants the benefits of AI to be shared broadly, and came up with the idea of CalCompute - a “state compute cluster” that academics and other sympathetic actors can use to train socially valuable AI models without needing to beg VCs for big bucks. Apparently someone made some kind of deal and their wish list was combined with ours into a single bill.

II.

Along the way SB 1047 picked up an impressive number of endorsements.

Elon Musk was the one I was most excited about. The bill's Silicon Valley opponents tried to frame it as nanny-state Democrats trying to crush progress. But Elon Musk has impeccable credentials for being pro-progress and anti-nanny-state-Democrats, so he was the perfect person to puncture this objection. He's always been interested in AI safety, but I imagine Hendrycks gets a lot of the credit for him weighing in on this particular issue.

Another major endorsement came from SAG-AFTRA (formerly Screen Actors Guild), a politicially influential union of Hollywood creatives. Their union’s letter to the governor makes it sound like they're against AI copying their voices and stealing their jobs, and willing to support basically any anti-AI legislation no matter how distantly related to their specific concern. But a later open letter showed more specific interest in existential risks, and a few people in show business have been consistent allies. Joseph Gordon-Leavitt is a long-time effective altruist (and married to Tasha McCauley, one of the OpenAI board members who voted out Sam Altman last November). And I was also moved by support from Adam McKay, who directed of Don’t Look Up (a film about people ignoring an impending asteroid strike, which AI safety advocates praised as a good intentional or unintentional metaphor for the current landscape).

The big AI companies split among themselves. OpenAI, Meta, and Google opposed the bill, X.AI supported, and Anthropic dithered on an earlier version but ultimately came out in support after their feedback was taken into account. Many opponents claimed that the bill was a Trojan Horse attempt at regulatory capture by the big AI companies, so it was fun watching three of the biggest AI companies come out against it and prove them exactly wrong. I don’t think any opponents ever changed their minds, admitted they’d made a mistake, or even stopped arguing that it was a big AI company plot - but hopefully enough people were paying attention that it discredited them a little for the next fight.

The list of opponents included some tech investors, AI trade groups, and Nancy Pelosi.

Nancy’s involvement was the biggest surprise. The boring explanation is that she represents San Francisco and has a lot of tech investor friends and donors, but you can get more conspiratorial. For example, Pelosi (current net worth $240 million) is known to get impossibly good investing returns, so much so that there are ETFs that try to replicate her stock picks; everyone assumes she must be doing some kind of scummy-but-legal insider trading. Her portfolio is currently weighted towards AI - did that influence her decision? Even more conspiratorial, insiders say Pelosi is waging a “shadow campaign” against Scott Wiener, the only San Francisco politician popular enough to challenge her daughter and hand-picked successor Christine Pelosi for SF’s House seat after she retires. Is she trying to deny Wiener a victory?

The other opponent everyone talks about is Marc Andreesen and his Andreesen Horowitz (“A16Z”) venture capital firm. Their campaign was especially public-facing, confrontational, and dishonest, and got most of the Twitter buzz. But in the end they were only one of several major players, and maybe not the one with the most political clout.

III.

The most important supporter was, of course, The Average Californian.

Opponents tried to confuse people by releasing a biased poll - basically “do you support this HORRIBLE BILL which will DEVASTATE INNOVATION by creating a GIANT BUREAUCRACY?” (exact wording here, story on the scandal here).

Supporters responded with an adversarial collaboration between both sides to come up with a fair wording; with this version, Californians were 62-25 in favor, with strong support among Democrats, Republicans, youth, elders, tech workers, minorities, etc.

IV.

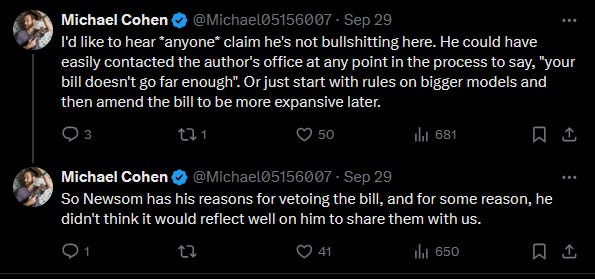

Based on considerations like these, the bill made it through California’s Assembly and Senate relatively smoothly, passing the State Assembly 49-15 and the State Senate 29-9. It then went to Governor Gavin Newsom for signature/veto. He sat on it until almost the last possible moment, then vetoed it September 29.

His letter explaining his veto is - sorry to impugn a state official this way, but everyone who read it agrees - bullsh*t. He says he loves regulating AI and is very concerned about safety, but rejects the bill because it doesn’t go far enough. In particular:

By focusing only on the most expensive and large-scale models, SB 1047 establishes a regulatory framework that could give the public a false sense of security about controlling this fast-moving technology. Smaller, specialized models may emerge as equally or even more dangerous than the models targeted by SB 1047 - at the potential expense of curtailing the very innovation that fuels advancement in favor of the public good.

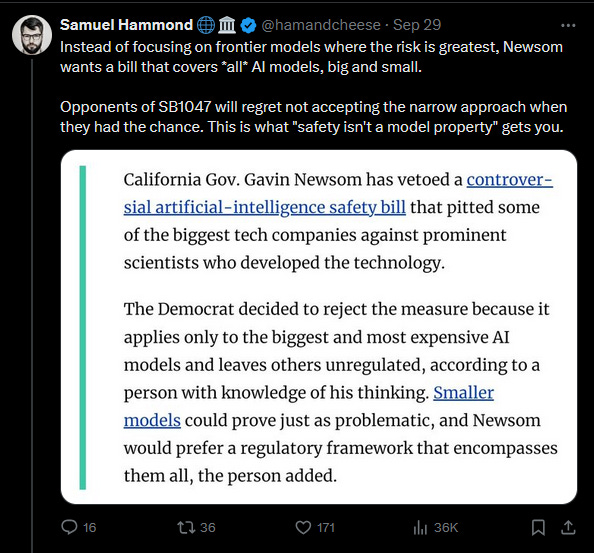

I’m not sure there’s a single person in the world who actually holds this opinion. Opponents of the bill worried that it could potentially crush innovation by regulating smaller models that weren’t dangerous, and demanded guarantees that this would only apply to the big companies that were able to pay the regulatory costs. Supporters accepted this was a risk and offered those guarantees gladly. The constituency for rejecting SB 1047 because it didn’t go far enough in regulating small models is zero.

Newsom obviously has no plan to come up with a stricter bill that also regulates the small models, so on the basis of “this doesn’t regulate enough” he is ensuring that nothing gets regulated at all.

One of my sources generously interprets Newsom to mean something like “don’t regulate the models, regulate the end applications”. IE if OpenAI trains GPT-5, and then LegalCo fine-tunes it to do paralegal work, leave most of the safety responsibility on LegalCo, not OpenAI. This fails to engage with the motivations behind the bill, which are things like “what if someone uses AI for bioterrorism”? If Meta trains LLaMa-4, and al-Qaeda fine-tunes it for terrorism, instead of regulating it at the Meta-level, we should regulate al-Qaeda? Are we sure al-Qaeda will comply with California regulations? Our side is not sure that even this generous interpretation is very well has been thought through very well.

But I prefer an ungenerous interpretation: Gavin Newsom is bad.

On some level, I don’t mind having a bad governor. I actually have a perverse sort of fondness for Newsom. He reminds me of the Simpsons’ Mayor Quimby, a sort of old-school politician’s politician from the good old days when people were too busy pandering to special interests to talk about Jewish space lasers. California is a state full of very sincere but frequently insane people. We’re constantly coming up with clever ideas like “let’s make free organic BIPOC-owned cannabis cafes for undocumented immigrants a human right” or whatever. California’s representatives are very earnest and will happily go to bat for these kinds of ideas. Then whoever would be on the losing end hands Governor Newsom a manila envelope full of unmarked bills, and he vetoes it. In a world of dangerous ideological zealots, there’s something reassuring about having a governor too dull and venal to be corrupted by the siren song of “being a good person and trying to improve the world”.

But sometimes you’re the group trying to do the right thing and improve the world, and then it sucks.

Which particular special interests and cronies influenced Newsom, and how come they could get more money and favors than we could?

Ron Conway is one of Newsom’s closest allies and biggest donors. In 2021, after Newsom broke his own COVID rules to go to a fancy dinner, some Californians tried to him recalled (ie got votes to hold a special election to impeach the governor). Conway (net worth $1.5 billion) helped coordinate Big Tech around opposing the recall and personally donated $200K to the anti-recall campaign; he apparently lobbied against the bill, and plausibly leads the list of people the Governor owes favors to.

I don’t fully understand where his SB 1047 opposition comes from. He has some AI investments, but no more than a lot of other people, and he also shows some signs of personal pro-innovation opinions, so I could go either way here. Still, it seems important to him for some reason, and the governor owes him a lot of favors.

Something vaguely similar probably applies to Reid Hoffman and Garry Tan…

…two of the bill’s other influential Silicon Valley billionaire opponents. Politico has more of the story here.

I think these people beat us because they’ve been optimizing for political clout for decades, and our side hasn’t even existed that long, plus we care about too many other things to focus on gubernatorial recall elections.

Newsom is good at politics, so he’s covering his tracks. To counterbalance his SB 1047 veto and appear strong on AI, he signed several less important anti-AI bills, including a ban on deepfakes which was immediately struck down as unconstitutional. And with all the ferocity of OJ vowing to find the real killer, he’s set up a committee to come up with better AI safety regulation. He’s named a few committee members already, most notably Fei-Fei Li:

[Opponents of SB 1047] had a big assist from “godmother of AI” and Stanford professor Fei-Fei Li, who published an op-ed in Fortune falsely claiming that SB 1047’s “kill switch” would effectively destroy the open-source AI community. Li’s op-ed was prominently cited in the congressional letter and Pelosi’s statement, where the former Speaker said that Li is “viewed as California’s top AI academic and researcher and one of the top AI thinkers globally.”

Nowhere in Fortune or these congressional statements was it mentioned that Li founded a billion-dollar AI startup backed by Andreessen Horowitz, the venture fund behind a scorched-earth smear campaign against the bill.

The same day he vetoed SB 1047, Newsom announced a new board of advisers on AI governance for the state. Li is the first name mentioned.

We’ll see whether Newsom gets his better regulation before or after OJ completes his manhunt.

(yes, I realize OJ is dead)

V.

Some opponents were gracious in victory:

Others were less so. Martin Casado (needless to say, of Andreesen Horowitz):

While supporters’ “concession speeches” ranged from defiant:

…to maybe slightly threatening. A frequent theme was that some form of AI regulation was inevitable. SB 1047 - a light-touch bill designed by Silicon-Valley-friendly moderates - was the best deal that Big Tech was ever going to get, and they went full scorched-earth to oppose it. Next time, the deal will be designed by anti-tech socialists, it’ll be much worse, and nobody will feel sorry for them.

Dean Ball wrote:

In response to the veto, some SB 1047 proponents seem to be threatening a kind of revenge arc. They failed to get a “light-touch” bill passed, the reasoning seems to be, so instead of trying again, perhaps they should team up with unions, tech “ethics” activists, disinformation “experts,” and other, more ambiently anti-technology actors for a much broader legislative effort. Get ready, they seem to be warning, for “use-based” regulation of epic proportions. As Rob Wiblin, one of the hosts of the Effective Altruist-aligned 80,000 Hours podcast put it on X:

» “Having failed to get up a narrow bill focused on frontier models, should AI x-risk folks join a popular front for an Omnibus AI Bill that includes SB1047 but adds regulations to tackle union concerns, actor concerns, disinformation, AI ethics, current safety, etc?”

This is one plausible strategic response the safety community—to the extent it is a monolith—could pursue. We even saw inklings of this in the final innings of the SB 1047 debate, after bill co-sponsor Encode Justice recruited more than one hundred members of the actors’ union SAG-AFTRA to the cause. These actors (literal actors) did not know much about catastrophic risk from AI—some of them even dismiss the possibility and supported SB 1047 anyway! Instead, they have a more generalized dislike of technology in general and AI in particular. This group likes anything that “hurts AI,” not because they care about catastrophic risk, but because they do not like AI.

The AI safety movement could easily transition from being a quirky, heterodox, “extremely online” movement to being just another generic left-wing cause. It could even work.

But I hope they do not. As I have written consistently, I believe that the AI safety movement, on the whole, is a long-term friend of anyone who wants to see positive technological transformation in the coming decades. Though they have their concerns about AI, in general this is a group that is pro-science, techno-optimist, anti-stagnation, and skeptical of massive state interventions in the economy (if I may be forgiven for speaking broadly about a diverse intellectual community).

I hope that we can work together, as a broadly techno-optimist community, toward some sort of consensus. One solution might be to break SB 1047 into smaller, more manageable pieces. Should we have audits for “frontier” AI models? Should we have whistleblower protections for employees at frontier labs? Should there be transparency requirements of some kind on the labs? I bet if the community put legitimate effort into any one of these issues, something sensible would emerge.

The cynical, and perhaps easier, path would be to form an unholy alliance with the unions and the misinformation crusaders and all the rest. AI safety can become the “anti-AI” movement it is often accused of being by its opponents, if it wishes. Given public sentiment about AI, and the eagerness of politicians to flex their regulatory biceps, this may well be the path of least resistance.

The harder, but ultimately more rewarding, path would be to embrace classical motifs of American civics: compromise, virtue, and restraint.

I believe we can all pursue the second, narrow path. I believe we can be friends. Time will tell whether I, myself, am hopelessly naïve.

Last year, I would have told Dean not to worry about us allying with the Left - the Left would never accept an alliance with the likes of us anyway. But I was surprised by how fairly socialist media covered the SB 1047 fight. For example, from Jacobin3:

The debate playing out in the public square may lead you to believe that we have to choose between addressing AI’s immediate harms and its inherently speculative existential risks. And there are certainly trade-offs that require careful consideration.

But when you look at the material forces at play, a different picture emerges: in one corner are trillion-dollar companies trying to make AI models more powerful and profitable; in another, you find civil society groups trying to make AI reflect values that routinely clash with profit maximization.

In short, it’s capitalism versus humanity.

Current Affairs, another socialist magazine, also had a good article, Surely AI Safety Legislation Is A No-Brainer. The magazine’s editor, Nathan Robinson4, openly talked about how his opinion had shifted:

One thing I’ve changed some of my opinions about in the last few years is AI. I used to think that most of the claims made about its radically socially disruptive potential (both positive and negative) were hype. That was in part because they often came from the same people who made massively overstated claims about cryptocurrency. Some also resembled science fiction stories, and I think we should prioritize things we know to be problems in the here and now (climate catastrophe, nuclear weapons, pandemics) than purely speculative potential disasters. Given that Silicon Valley companies are constantly promising new revolutions, I try to always remember that there is a tendency for those with strong financial incentives to spin modest improvements, or even total frauds, as epochal breakthroughs.

But as I’ve actually used some of the various technologies lumped together as “artificial intelligence,” over and over my reaction has been: “Jesus, this stuff is actually very powerful… and this is only the beginning.” I think many of my fellow leftists tend to have a dismissive attitude toward AI’s capabilities, delighting in its failures (ChatGPT’s basic math errors and “hallucinations,” the ugliness of much AI-generated “art,” badly made hands from image generators, etc.). There is even a certain desire for AI to be bad at what it does, because nobody likes to think that so much of what we do on a day-to-day basis is capable of being automated. But if we are being honest, the kinds of technological breakthroughs we are seeing are shocking. If I’m training to debate someone, I can ask ChatGPT to play the role of my opponent, and it will deliver a virtually flawless performance. I remember not too many years ago when chatbots were so laughably inept that it was easy to believe one would never be able to pass a Turing Test. Now, ChatGPT not only aces the test but is better at being “human” than most humans. And, again, this is only the start.

The ability to replicate more and more of the functions of human intelligence on a machine is both very exciting and incredibly risky. Personally I am deeply alarmed by military applications of AI in an age of great power competition. The autonomous weapons arms race strikes me as one of the most dangerous things happening in the world today, and it’s virtually undiscussed in the press. The conceivable harms from AI are endless. If a computer can replicate the capacities of a human scientist, it will be easy for rogue actors to engineer viruses that could cause pandemics far worse than COVID. They could build bombs. They could execute massive cyberattacks. From deepfake porn to the empowerment of authoritarian governments to the possibility that badly-programmed AI will inflict some catastrophic new harm we haven’t even considered, the rapid advancement of these technologies is clearly hugely risky. That means that we are being put at risk by institutions over which we have no control.

I don’t want to gloss this as “socialists finally admit we were right all along”. I think the change has been bi-directional. Back in 2010, when we had no idea what AI would look like, the rationalists and EAs focused on the only risk big enough to see from such a distance: runaway unaligned superintelligence. Now that we know more specifics, “smaller” existential risks have also come into focus, like AI-fueled bioterrorism, AI-fueled great power conflict, and - yes - AI-fueled inequality. At some point, without either side entirely abandoning their position, the very-near-term-risk people and the very-long-term-risk people have started to meet in the middle.

But I think an equally big change is that SB 1047 has proven that AI doomers are willing to stand up to Big Tech. Socialists previously accused us of being tech company stooges, harping on the dangers of AI as a sneaky way of hyping it up. I admit I dismissed those accusations as part of a strategy of slinging every possible insult at us to see which ones stuck. But maybe they actually believed it. Maybe it was their real barrier to working with us, and maybe - now that we’ve proven we can (grudgingly, tentatively, when absolutely forced) oppose (some) Silicon Valley billionaires, they’ll be willing to at least treat us as potential allies of convenience.

Dean Ball calls this strategy “an unholy alliance with the unions and the misinformation crusaders and all the rest”, and equates it to selling our souls. I admit we have many cultural and ethical differences with socialists, that I don’t want to become them, that I can’t fully endorse them, and that I’m sure they feel the same way about me. But coalition politics doesn’t require perfect agreement. The US and its European allies were willing to form an “unholy alliance” with some unsavory socialists in order to defeat the Nazis, they did defeat the Nazis, and they kept their own commitments to capitalism and democracy intact.

As a wise man once said, politics is the art of the deal. We should see how good a deal we’re getting from Dean, and how good a deal we’re getting from the socialists, then take whichever one is better.

Dean says maybe he and his allies in Big Tech would support a weaker compromise proposal that broke SB 1047 into small parts. But I feel like we watered down SB 1047 pretty hard already, and Big Tech just ignored the concessions, lied about the contents, and told everyone it would destroy California forever. Is there some hidden group of opponents who were against it this time, but would get on board if only we watered it down slightly more? I think the burden of proof is on him to demonstrate that there are.

I respect Dean’s spirit of cooperation and offer of compromise. But the socialists have a saying - “That already was the compromise” - and I’m starting to respect them too.

VI.

A final interesting response to the SB 1047 veto came from the stock market. When Newsom nixed the bill - which was supposed to devastate the AI industry and destroy California’s technological competitiveness - AI stocks responded by doing absolutely nothing (source):

You can’t see it in the screenshot, but the first stock is NVIDIA, the second TSMC, the third Alphabet, and the fourth Microsoft. On average they went up about 0.5%, on a day when the NASDAQ as a whole also went up about 0.5%.

Some people objected that maybe it was “priced in”. But the day before SB 1047 got vetoed, the prediction markets gave it a 33% chance of passing:

So we should expect the stock market shift to equal 1/3 of the total damage to AI companies that the bill would have caused. But the stock market shift was indistinguishable from zero.

Should we have expected a single California law to have an effect visible in the markets? According to Daniel and @GroundHogStrat , past history says yes: when California passed a proposition backing down from their attempt to crack down on Uber over gig workers, Uber’s stock went up 35%. If SB 1047 was going to be as bad for AI as the anti-gig-worker rules were for Uber, we would expect a similar jump. Even if the bill would be fine for incumbents but only hurt small startups, we would have expected a hit for NVIDIA, who sells chips to those small startups. But NVIDIA went up less than the NASDAQ overall.

VII.

Some people tell me they wish they’d gotten involved in AI early. But it’s still early! AI is less than 1% of the economy! In a few years, we’re going to look back on these days the way we look back now on punch-card computers.

Even very early, it’s possible to do good object-level work. But the earlier you go, the less important object-level work is compared to shaping possibilities, coalitions, and expectations for the future. So here are some reasons for optimism.

First, we proved we can stand up to (the bad parts of) Big Tech. Without sacrificing our principles or adopting any rhetoric we considered dishonest, we earned some respect from leftists and got some leads on potential new friends.

Second, we registered our beliefs (AI will soon be powerful and potentially dangerous) loudly enough to get the attention of the political class and the general public. And we forced our opponents to register theirs (AI isn’t scary and doesn’t require regulation) with equal volume. In a few years, when the real impact of advanced AI starts to come into focus, nobody will be able to lie about which side of the battle lines they were on.

Third, we learned - partly to our own surprise - that we have the support of ~65% of Californians and an even higher proportion of the state legislature. It’s still unbelievably, fantastically early, comparable to people trying to build an airplane safety coalition when da Vinci was doodling pictures of guys with wings - and we already have the support of 65% of Californians and the legislature. So one specific governor vetoed one specific bill. So what? This year we got ourselves the high ground / eternal glory / social capital of being early to the fight. Next year we’ll get the actual policy victory. Or if not next year, the year after, or the year after that. “Instead of planning a path to victory, plan so that all paths lead to victory”. We have strategies available that people from lesser states can’t even imagine!

Trying to be maximally charitable, I think he’s saying that the penalty for infecting someone with HIV was much more severe than the penalty for infecting people with other diseases, that this was a relic of the age of mass panic over AIDS, and that now that we’re panicking less we should bring the penalties back into line. But his argument style actively alienates me, focusing as it does on “reducing stigma” against AIDS patients. This brings back too many bad memories of the days when we weren’t allowed to try to prevent COVID from reaching the US, or prepare for it when it did, because that might “cause stigma” against Chinese people. I’m now permanently soured on all stigma-based arguments, and the current issue under discussion - declaring that it’s not such a big deal to intentionally give people AIDS, because if we admitted it was a big deal then that might cause “stigma” - is a perfect example of why this turns me off. I’d be more comfortable if he’d ignored the “stigma” angle and just tried to argue that the penalties were out of line with equally dangerous diseases (which I haven’t yet seen evidence about).

I got some pushback on this claim and agree it’s confusing. Hendrycks divested from his equity in a safety company called Gray Swan; this wasn’t worth anywhere near that amount. But he also says he turned down $20 million in equity in Elon Musk’s x.AI.

I don’t want to overplay this. Garrison Lovely, author of the Jacobin article, is himself an effective altruist, one of our few really committed socialists. I’ve clashed with him on his socialist opinions in the past, but I’m still grateful to have him as an ally, and happy that our common cause can rally support from across the political spectrum. I also think that regardless of Garrison’s personal opinions, the fact that Jacobin would publish his story suggests a broader sea change within the socialist movement.

I’ve also clashed with Robinson before, sometimes pretty seriously, but I can at least offer him the same praise I offered Scott Wiener - in a world full of hacks and lizardmen, he’s a real person with a personality and principles.

Share this post