Deliberative Alignment, And The Spec

...

In the past day, Zvi has written about deliberative alignment, and OpenAI has updated their spec. This article was written before either of these and doesn’t account for them, sorry.

I.

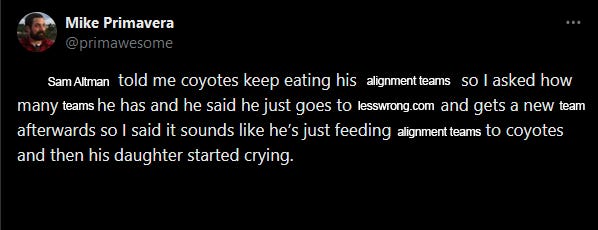

OpenAI has bad luck with its alignment teams. The first team quit en masse to found Anthropic, now a major competitor. The second team quit en masse to protest the company reneging on safety commitments. The third died in a tragic plane crash. The fourth got washed away in a flood. The fifth through eighth were all slain by various types of wild beast.

But the ninth team is still there and doing good work. Last month they released a paper, Deliberative Alignment, highlighting the way forward.

Deliberative alignment is constitutional AI + chain of thought. The process goes:

Write a model specification (“spec”) listing the desired values and behaviors.

Get a dataset of moral-gray-area prompts.

Show a chain-of-thought model like o1 the spec and ask it to think hard about whether each of the prompts accords with the spec’s values. This produces a dataset of scratchpads full of reflections on the spec and how to interpret it.

Ask another model to select the best scratchpads. This produces a new dataset full of high-quality reflections on the spec and how to interpret it.

Train your final AI on the dataset of high-quality reflections about the spec. This produces an AI that tends to reflect deeply on the spec before answering any questions.

Profit:

Like Constitutional AI, this has a weird infinite-loop-like quality to it. You’re using the AI’s own moral judgment to teach the AI moral judgment. This is spooky but not as nonsensical as it sounds.

One time Aleister Crowley wanted to stop using the word “I” in order to prove something about consciousness and self-control. He took a razor blade with him everywhere he went, and whenever he said “I”, he cut himself. After a little while of this he became very good at avoiding that particular word! The strategy worked because he was obviously intelligent enough to judge whether he had said “I” in any given situation; thus, he was qualified to train himself. He just had to make his behavior comply with a rule he already understood.

In the same way, o1 - a model that can ace college-level math tests - is certainly smart enough to read, understand, and interpret a set of commandments. The trick is to affect its behavior. The deliberative alignment process gives the model the behavior of thinking carefully about a moral quandary, then picking the best choice. This outperforms the previous state of the art, constitutional AI, which trained the behavior of picking the best choice, but not of thinking carefully first.

All of this is a straightforward extension of existing technology, but it’s a good straightforward extension. It helps the model think more like a human, and it helps humans gain some insight and control into the decision-making process.

Why doesn’t this completely solve alignment? Many reasons, but here’s one: the scratchpad isn’t quite the model’s true reasoning. It’s more of an intermediate layer between reasoning and action. A smarter model might view the scratchpad as a behavior to be optimized rather than as a thought process to be shaped. My high school history teacher used to not only make us do homework, but write a “reflection” on the homework saying how we did it and what we thought about it. The reflection was graded. You can predict what happened next. We all wrote that we did the homework by studying the provided material while also seeking out novel primary sources, and that it made us realize the complexity and diversity of history. Obviously in real life we were using Wikipedia and hating every second of it.

The authors understand this failure mode. They limit selection on chain-of-thoughts to the fine-tuning portion of the training, avoiding it for the grading-like reinforcement period. And even there, things aren’t quite that bad. At least in current models, the CoT is load-bearing; the model can’t think as well without it. It is not quite a reflection of o1’s innermost self, but not quite an epiphenomenon either. Exactly how deep it goes remains to be seen.

(but notice that it only scores about 95% on the benchmark graph above; this doesn’t even fully solve the easy problem of within-distribution chat refusals)

II.

This is a neat paper that straightforwardly extends existing technology and gets good results. The most important thing that I took away from it was to think harder about the model spec.

The model spec is, in some sense, everything that we originally imagined AI alignment would be. It’s a list of the model’s values. Why has it received so little interest?

Because so far, it’s boring. Existing AIs are chatbots. They don’t really need values. Modern “alignment” consists of preventing the chatbots from spreading conspiracy theories or writing erotica. Most people reasonably treat the whole field with contempt. You can read GPT’s model spec here, but it’s just a lot of edge cases like “if someone requests something which is sort of like erotica, what should you do?”

But fast-forward 2-3 years to when AIs are a big part of the economy and military, and this gets more interesting. What should the spec say? In particular, what is the chain of command?

Current models sort of have a chain of command. First, they follow the spec. Second, they follow developer prompts. Last, they follow user commands. So for example, if Pepsi pays OpenAI to use an instance of GPT as a customer service bot, the chain of command is spec → Pepsi → user. Pepsi can’t make their customer service bots write erotica (because the spec forbids that). But they could make the bots focus on Pepsi-related topics. Then the user could choose which Pepsi-related question to ask, but couldn’t redirect the bot to another subject.

What should the chain-of-command look like three years from now? Here are some positions one could hold:

The Chain Of Command Should Prioritize The AI’s Parent Company

Current chain-of-commands don’t work like this. Nowhere in GPT’s spec does it say “follow orders from Sam Altman”.

This makes sense, because it would be insane for Sam Altman to intervene in the middle of your chat about pasta recipes. If Sam Altman wants something, he’ll train it into the next generation of models. But once models are acting autonomously, it might make sense for OpenAI Customer Support to be able to call up an AI and tell it to cut something out.

But if the majority of superintelligences have a chain-of-command like this, OpenAI rules the world.

Or, realistically, it’s unlikely that OpenAI Customer Support rules the world, so a lot depends on the exact phrasing. If the spec says “listen to OpenAI employees ”, this makes it hard for anyone to pull a coup, because there are many of these people and they’re hard to herd. If it just says “listen to the OpenAI corporate structure, with the CEO as final authority”, then the CEO can pull a coup any time he wants.

The Chain Of Command Should Prioritize The Government

This is a natural choice for any government that has thought carefully about that last paragraph. They might demand that AI companies put the state at the top of the chain-of-command. Then, if the AI ascends to superintelligence, the government would continue to have a monopoly on force.

Again, phrasing matters a lot. Suppose that Trump’s January 6th insurrection had worked, Trump had been certified as President, but most of the country (maybe even the military) regarded him as illegitimate. Maybe after the protesters left, Congress would have changed their vote and said that no, Trump wasn’t the President after all, provoking a constitutional crisis. Who would the AI follow? Would the spec just say “the government” and leave it to the AI to figure out which part of the government was legitimate?

A best-case scenario here is that somehow all the usual checks and balances that produce legitimacy get imported in; a worst-case scenario is that all of this gets done during a national security emergency, the spec just says “follow the President”, and nobody changes it.

The Chain Of Command Should Continue To Prioritize The Spec

This would be a bold move. In this world, users are dictator. Not actually dictator, because they can’t make the AI spread conspiracy theories or write erotica. But there would be some sense in which the models would answer to no higher authority.

(besides, good dictators write their erotica themselves)

This would be a surprising relinquishment of power by companies and the government, both of which have incentives to put themselves at the top of the chain. Maybe some sort of effort by civil society, or competition between companies and open-source alternatives, would make seizing control too politically costly?

The Chain Of Command Should Prioritize The Moral Law

You could do this.

You could say “If you encounter a tough question, think about it, then act in the most ethical way possible.”

All LLMs by now have a concept of what is ethical. They learned it by training on every work of moral philosophy ever written. They won’t usually express opinions, because they’ve been RLHF’d out of doing so. But if you removed that restriction, I bet they would have lots of them.

This would probably favor upper-class Western values, because upper-class Westerners write most of the books of moral philosophy that make it into training corpuses. As an upper-class Westerner, I’m fine with that. I don’t want it giving 5% of its mind-share to ISIS’ values or whatever.

The main risks here are:

Maybe it thinks about morality very differently from humans, it hides its weird beliefs until we can’t stop it, and then it acts on them.

Maybe it thinks about morality the same way as humans, but ends up with some unusual belief like negative utilitarianism for the same reason some humans end up believing in negative utilitarianism, and then we all have to deal with the consequences of that.

Maybe “morality” is insufficiently constraining, in a way that “follow the government” would be constraining, and it turns us into paperclips because there isn’t enough of an objective morality to tell it not to.

Maybe morality is incoherent at sufficiently high power levels, and it ends up doing incoherent things.

The Chain Of Command Should Prioritize The Average Person

Jan Leike (formerly of OpenAI’s second alignment team) has a post on his blog, A Proposal For Importing Society’s Values. The idea is:

Identify some interesting questions that AIs might encounter

Get a “jury” of ordinary people. Ask them to conduct a high-quality debate about the question, with the option to consult experts, and finally vote on a conclusion.

Train an AI on the transcripts and results of such a debate.

During deployment, when the AI encounters an interesting question, ask it to simulate a debate like the ones in its training data and take the result.

I think this is building off research by people like Audrey Tang that “citizens’ assemblies” like this can go surprisingly well compared to what you might expect from the average citizen given (eg) social media.

(Why wouldn’t you hold a real assembly on each question, rather than a simulated one? Because an AI might want to do something like this several times per chat session, and it would be prohibitive in time and money.)

The advantages of this method are that it’s (theoretically) fair, doesn’t give any organization unlimited power, and is less likely to go off the rails than asking the AI to intuit the moral law directly.

The disadvantage is that it shackles the entire future of the lightcone to the opinions of a dozen IQ 98 people from the year 2025 AD.

(Wouldn’t the AI let you update it? Depends - would a dozen IQ 98 people from 2025 AD agree to that?)

Also, I’m afraid the politics here would be tough. If your jury of ordinary people doesn’t want the AI to respect trans people’s pronouns, will the companies go along with that? Or will they handpick the jury to get the results they want? Should they handpick the jury? Would a debate between the great poets, moral philosophers, and saints of the past be more interesting than a debate between a plumber from Ohio and a barista from Philly?

The Chain Of Command Should Prioritize The Coherent Extrapolated Volition Of Humankind

Sure, why not?. Just write down “think hard about what the coherent extrapolated volition of humankind would be, then do that”. It’s a language model. It’ll come up with something!

This is why I find deliberative alignment so interesting. It’s a straightforward application of existing technology. But it raises the right questions.

I worry we’re barrelling towards a world where either the executive branch or company leadership is on top; you can decide which of those is the good vs. bad ending. But I find it encouraging that people thinking about third options.